date

Mar 11, 2023

type

Post

status

Published

slug

AI4xiaomi-en

summary

In November 2022, I had the honor of being invited to create a promotional video using AI for the limited international edition smartphone Xiaomi 12T PRO, in collaboration with the famous American artist Daniel Arsham. I'm excited to share the creative process and the AI technologies used!

tags

创作

项目

教程

Art

Project

AI Animation

Tutorial

Website building

Recommend

category

Project

icon

password

URL

March 11, 2023 • 5 min read

by Simon Meng, mp.weixin.qq.com • See original

In November 2022, I had the honor of being invited to create a promotional video using AI for the limited international edition smartphone Xiaomi 12T PRO, in collaboration with the famous American artist Daniel Arsham. Now, I'm sharing the creative process and the AI technologies used!

▶ Original video link: Xiaomi Twitter

AI Tools Used:

Stable Diffusion webUI automatic 111 + SD 1.5 model / dreambooth / Stable Diffusion studio / frame interpolation + Real-ESRGAN + manual post-production (download links at the end)

Creative Steps:

- Design Script + Generate Concept Images:

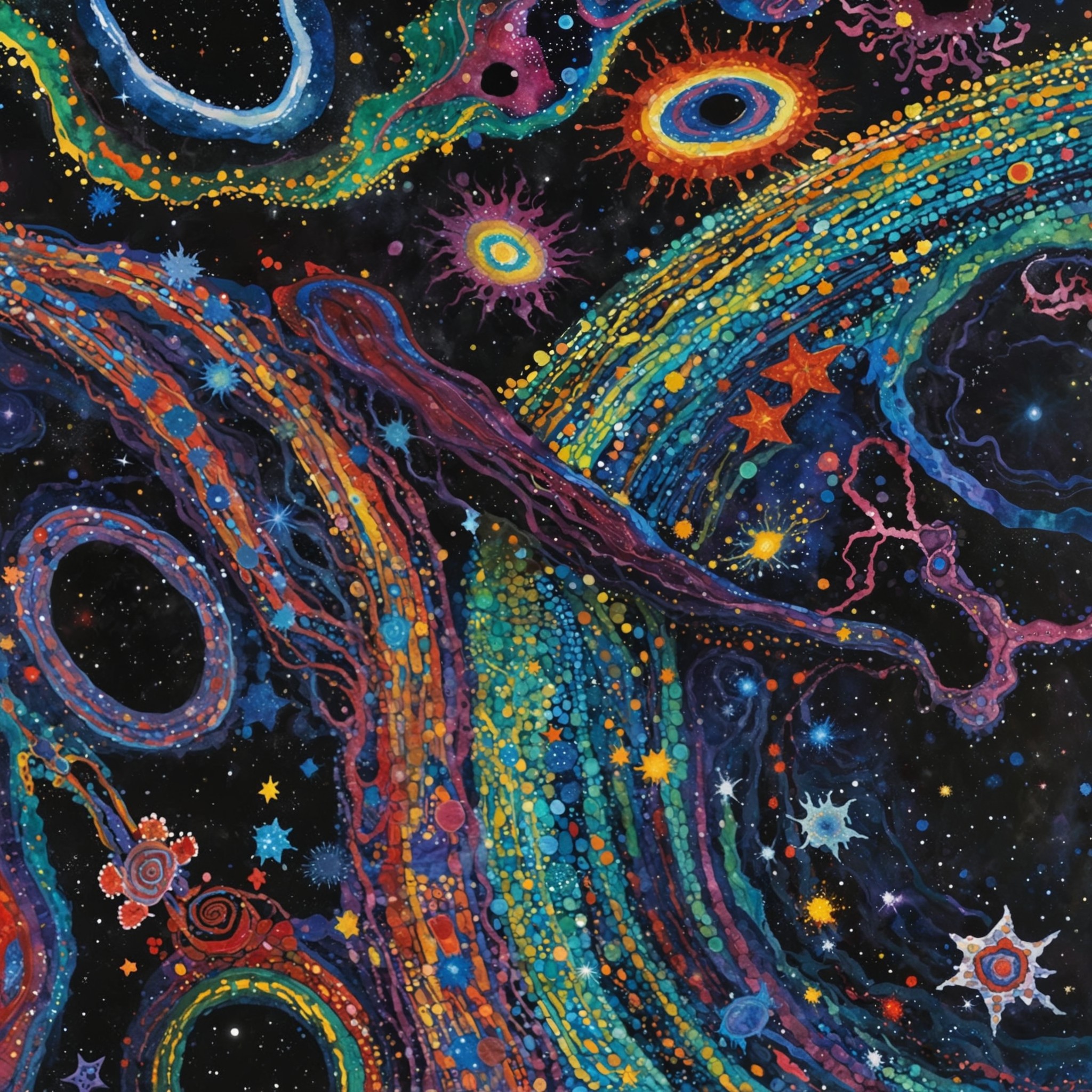

The theme of the phone is "give it time," which can be understood in Chinese as "timeless" or "everlasting." Based on this theme, I designed three scenes: From the Past / For the Present / Back to the Future.

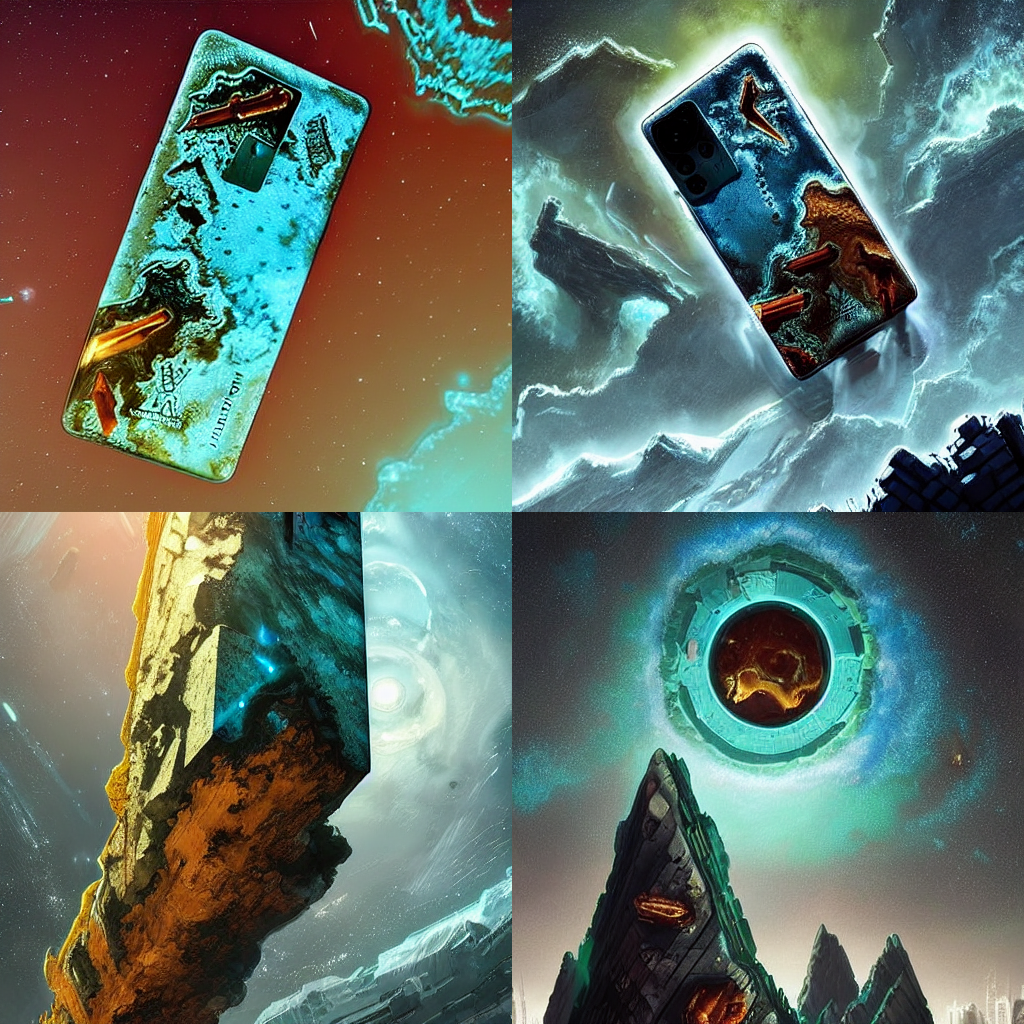

Each scene has its own timeline, so multiple prompts are needed for each, generating a large number of reference images. Based on client feedback, keywords and parameters were continually modified. Below are the foundational texts for each scene along with some process images.

▶ From the Past — Over the long years, through the changes of time, a resilient and mysterious square stone tablet was forged among all things, eventually transforming into a beautifully crafted phone packaging.

▶ For the Present — The phone interface/screen is likened to a time portal, traveling from the future to the bustling modern city, capturing the attention and admiration of people.

▶ Back to the Future — This phone, now a piece of art, has become a symbol of eternity. After years of refinement, it still shines brightly, like an everlasting star in the universe, landing in the city of future civilization.

- Finetune Model

As the final animation needed to feature the main characteristics of the phone and packaging, I had to finetune the model. I first took dozens of photos of the phone's front, back, and packaging in various backgrounds and lighting conditions, then used dreambooth to finetune the SD 1.5 model until I could generate the phone's corresponding content clearly using identifier words. (By the way, the design and final presentation of this phone case and packaging are among the most beautiful I have ever seen.)

- Keyframe Determination

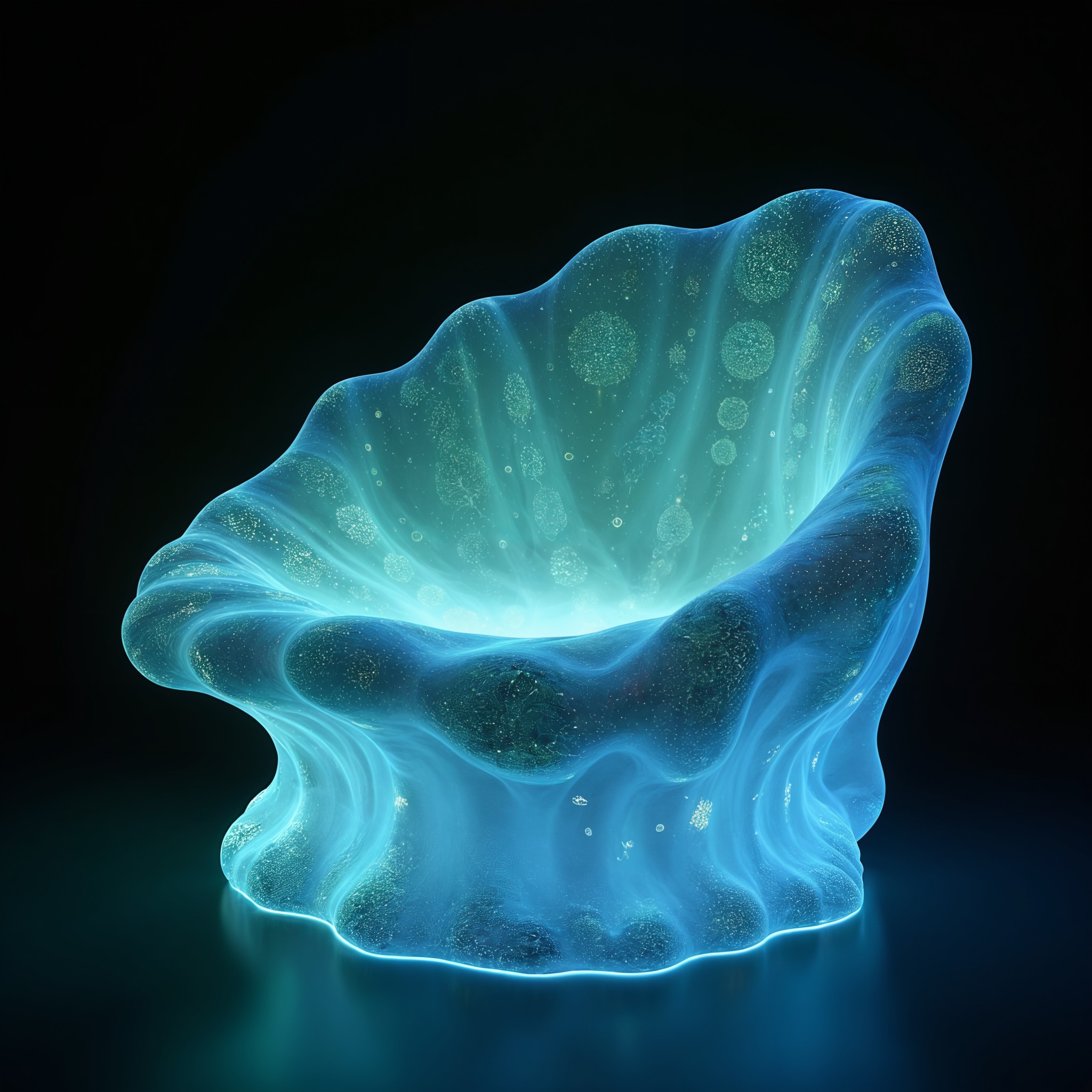

Using the trained model, I integrated identifier words with previously tested scene prompts to generate a large number of images containing product features. Adjustments were made based on client feedback, and finally, keyframes corresponding to each scene's storyline were selected.

- Animation Generation

These keyframes were connected using Stable Diffusion Studio to create smooth transitions (it might now be possible to directly use the image guide feature in Stable Diffusion webUI's deforum). A video was generated, followed by fine-tuning based on the results.

- Manual Frame Editing

The generated frames were exported, with some flawed frames manually deleted or modified. Physical photos of the phone/packaging were manually inserted into the last frame of each video (the final frame needed to focus on the product, as the AI-generated phone images weren't detailed or realistic enough).

- AI Upscaling + Frame Interpolation

Using Real-ESRGAN, the resolution was upscaled to 2K; frame interpolation was applied to smooth out any rough areas; manual adjustments were made to the video's sharpness, saturation, and contrast—mission accomplished! Yeah!

Download Links for Tools:

▶ Stable Diffusion webUI automatic 111: GitHub

▶ Dreambooth (Colab version, can also use SD webUI plugins or try using Lora as a substitute): GitHub

▶ Stable Diffusion Studio (used in conjunction with SD webUI, though it seems to no longer be compatible with the latest SD version): GitHub

▶ Frame Interpolation: GitHub

▶ Real-ESRGAN: GitHub

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/AI4xiaomi-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章