date

Feb 8, 2022

type

Post

status

Published

slug

AI-archi-leifeng-en

summary

This is a super hardcore long article about AI + architecture + creation. I would like to thank Hua Jie, Xiao Gang, Zhang Wanlin, and other global knowledge-sharing friends for their efforts. Through the enlightening Q&A, I have learned a lot myself (resulting in a hardcore and systematic article).

tags

展览

资讯

建筑

艺术

Report

Art

Project

Architecture

category

Knowledge

icon

password

URL

Here's the translation while retaining the markdown format:

February 8, 2022 • 72 min read

by Simon's Daydream, mp.weixin.qq.com • See original

This is a super hardcore long article. Thanks to Hua Jie, Xiao Gang Ge, Zhang Wanlin, and other global knowledge Lei Feng friends for their efforts. I learned a lot during the enlightening Q&A (producing a hardcore + systematic article). PS: The interview took place more than three months ago, and more interesting models have emerged since then. More information can be found at the end of the article by scanning the code to join the discussion group.

How does a PhD in Architecture "conquer challenges" with AI?

Can artificial intelligence replace humans in artistic creation?

What are the breakthrough points for creation using AI?

What AI trends should architectural practitioners pay attention to?

Answers loading...

Supernova Explosion

Meng Shengyu Simon

Interdisciplinary researcher in architecture, artificial intelligence, and biology

and artistic creator

PhD candidate in architecture at the University of Innsbruck (UIBK)

Master of Architecture from University College London (UCL)

Teaching assistant and invited review guest for technical courses at UIBK and UCL

Public account & video account & Xiaohongshu & B station & Weibo: Simon's Daydream

"Can machines think?"

This question was raised by Alan Turing in 1950,

and it is the theoretical foundation of artificial intelligence.

Today, artificial intelligence is widely applied in areas such as autonomous driving, facial recognition, unmanned stores, voice recognition, and healthcare. In this episode, supernova Simon also utilizes artificial intelligence to create stunning architecture, cities, and alien life forms...

"Transcoding" seems to be a high-frequency term among architecture students when discussing the future. Two crossroads appear: should one persist with hard work or completely escape?

Simon strives to step out of the unknown third path between these two roads. He actively acquires cutting-edge AI algorithms in this increasingly digital world while effectively applying his years of accumulated architectural knowledge in new creative contexts.

307df655c12f02d6f91b1dc.jpg)

Interview

Q: Lian Xiaogang

Founder of the children's education brand "Bai Zao Xue Tang," graduated from Tsinghua University's architecture department, a behind-the-scenes figure of Little Lei Feng

A: Meng Shengyu

Interdisciplinary researcher in architecture, artificial intelligence, and biology, and artistic creator, PhD candidate in architecture at the University of Innsbruck

Organized by: Zhang Wanlin

Editor-in-chief of the "Supernova Explosion" column for global knowledge Lei Feng

Interview Framework

- Initial exposure to AI

- How to cross industry thresholds: positive feedback and opportunities

- Personal qualities and talents: the ability to connect dots

- Biology: drawing on complex systems to expand human limitations

- AI evolution and instincts

- Is AI based on algorithms or data?

- Challenges in AI creation: yield rate and industrial standards

- AI directions worth noting for architects

- Similarities between AI learning and children's cognition based on algorithms/logics

- Does AI help with a lack of creative content?

- Image quality, generalization, speed

- Works featuring alien life: AI's perception of different objects

- Beginner's guide, tutorial recommendations

- Appreciated creators

- AI workshop design

- Hardware requirements: not high

- Using different tools in architectural workflows: Grasshopper, Blender, AI...

Click the knowledge card to get more AI popular science information

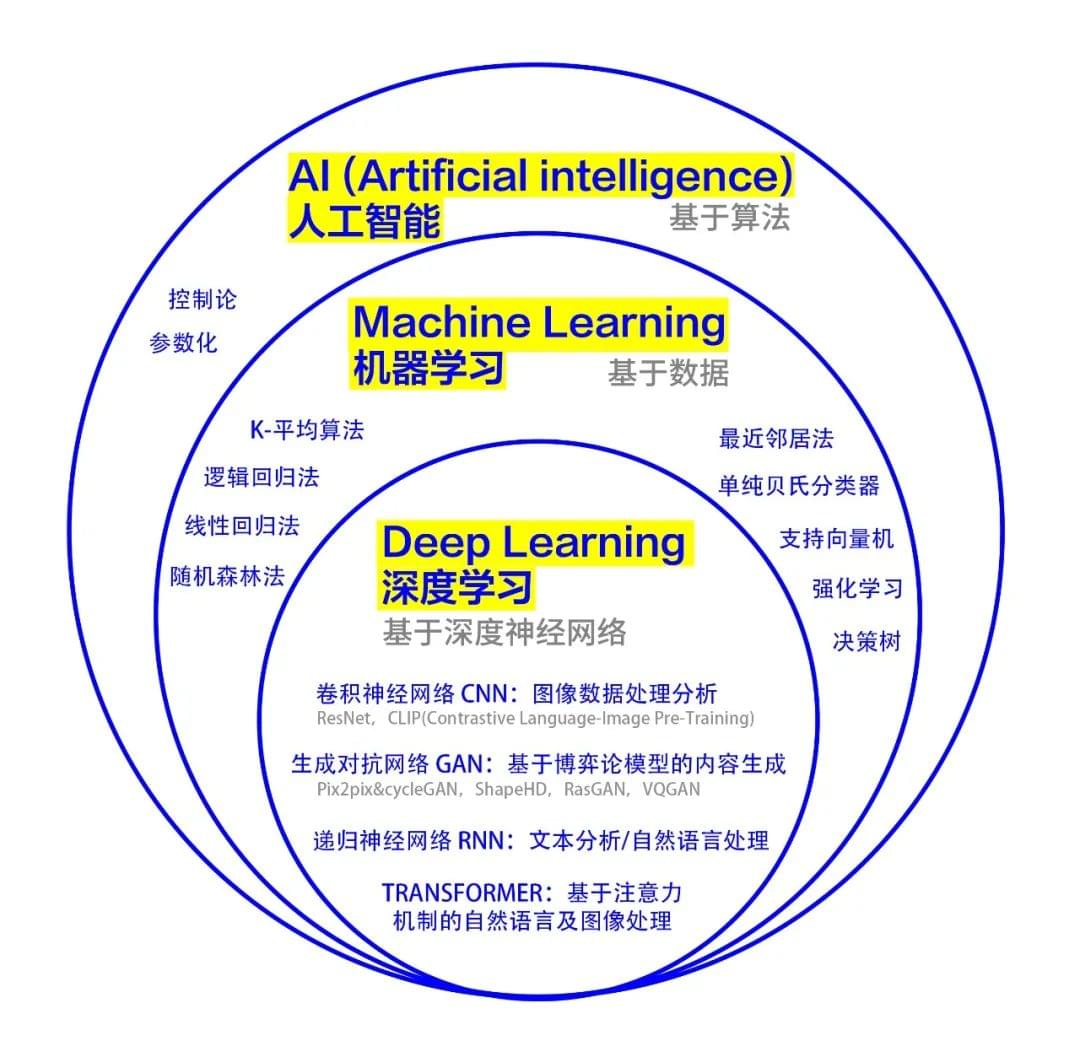

AI Concept Knowledge Card

The professional terms in the AI field that frequently appear in the text

Readers with no understanding of AI can first read the following sections

Artificial Intelligence (AI) is a branch of computer science that attempts to create machines that can respond in ways similar to human intelligence.

<< Swipe left and right >>

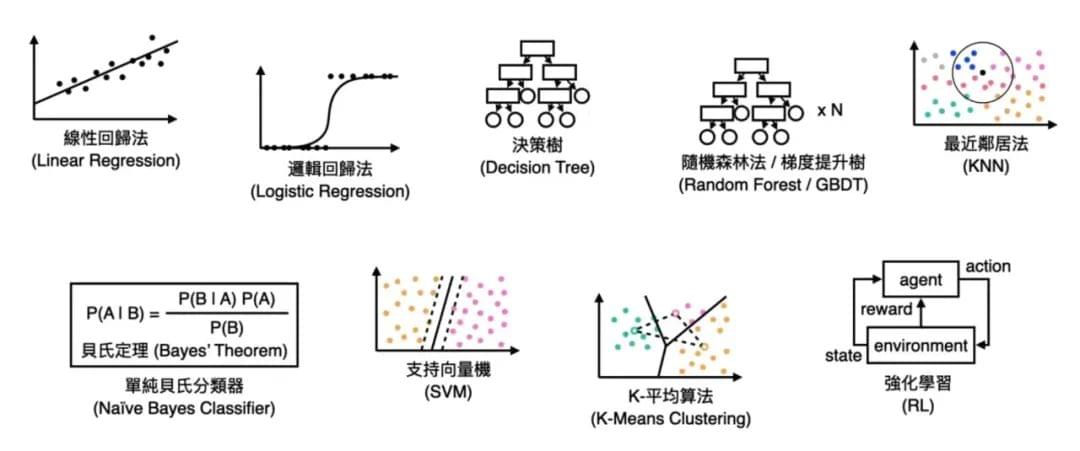

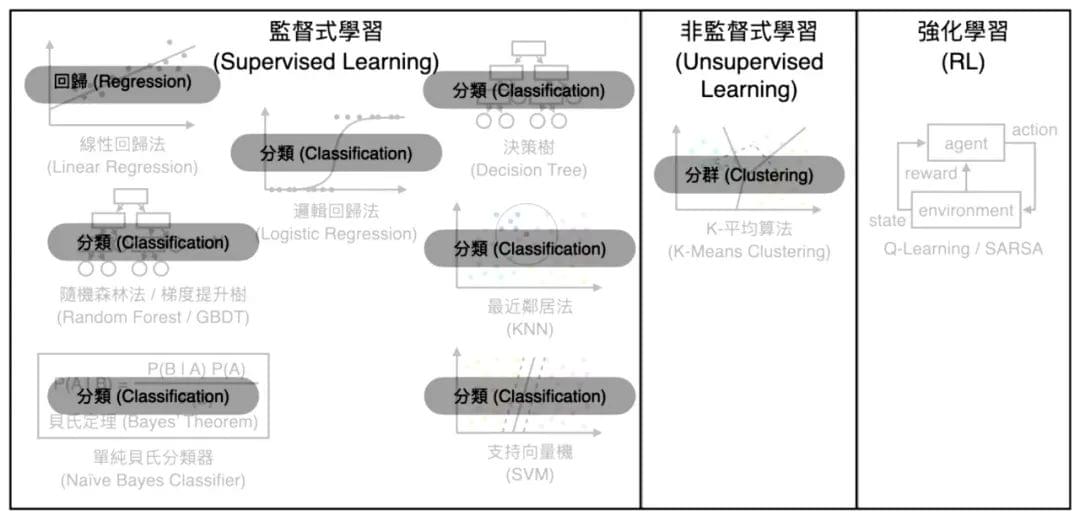

Machine Learning is a method of achieving artificial intelligence. The most basic practice of machine learning is to use algorithms to analyze data based on statistics and probabilities, learning the patterns of data distribution, and then making decisions and predictions about events in the real world. Unlike traditional software programs that solve specific tasks through hard coding, machine learning is trained using large amounts of data, learning how to complete tasks through various algorithms. The main research directions of traditional machine learning include decision trees, random forests, Bayesian learning, etc.

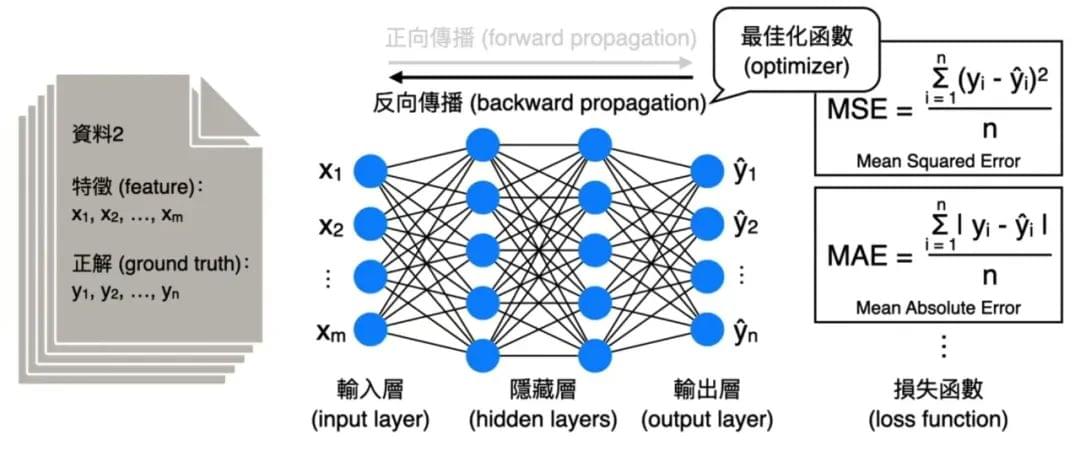

Deep Learning is a new research direction in the field of machine learning. It was introduced to bring machine learning closer to its original goal—artificial intelligence.

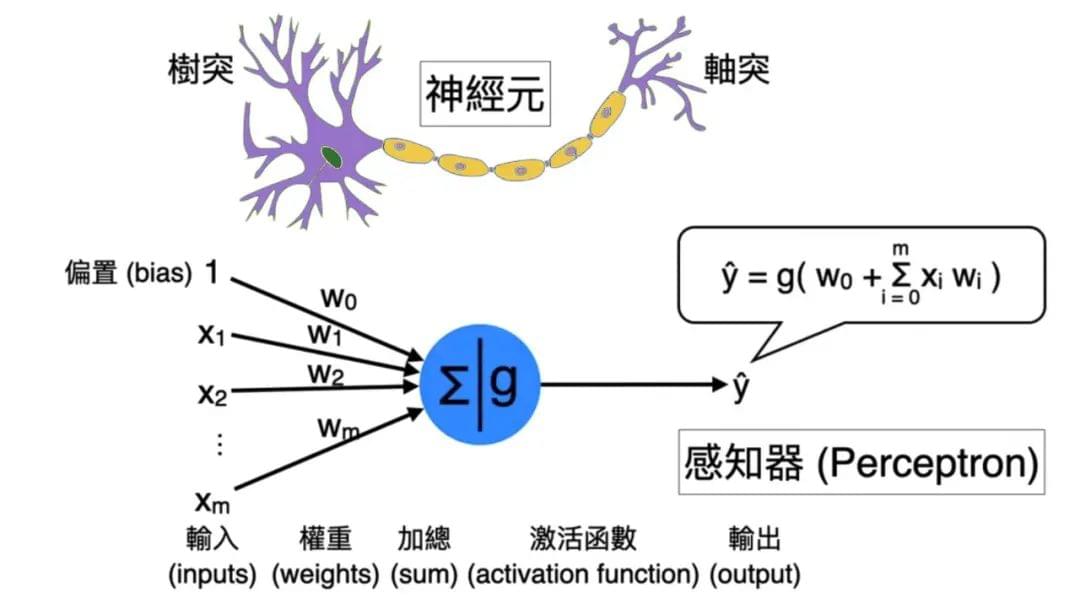

How can machines think and make judgments as easily as humans? Artificial Neural Networks are neural networks that simulate the human brain for analytical learning. They are conceptually similar to logical neurons, consisting of interconnected individual units, each with numerical input and output, which can be real numbers or linear combinations. The network must first learn based on a learning criterion before it can perform its tasks. When the network makes incorrect judgments, it learns to reduce the likelihood of repeating the same mistakes. Deep learning mimics the brain's mechanisms to interpret data such as images, sounds, and text.

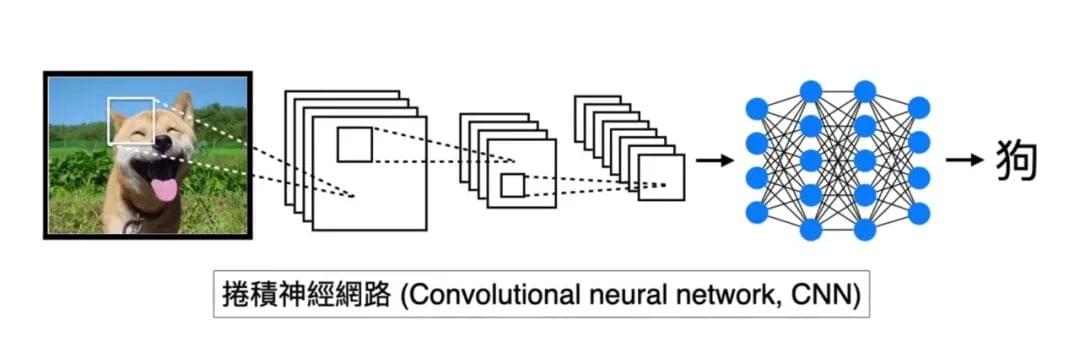

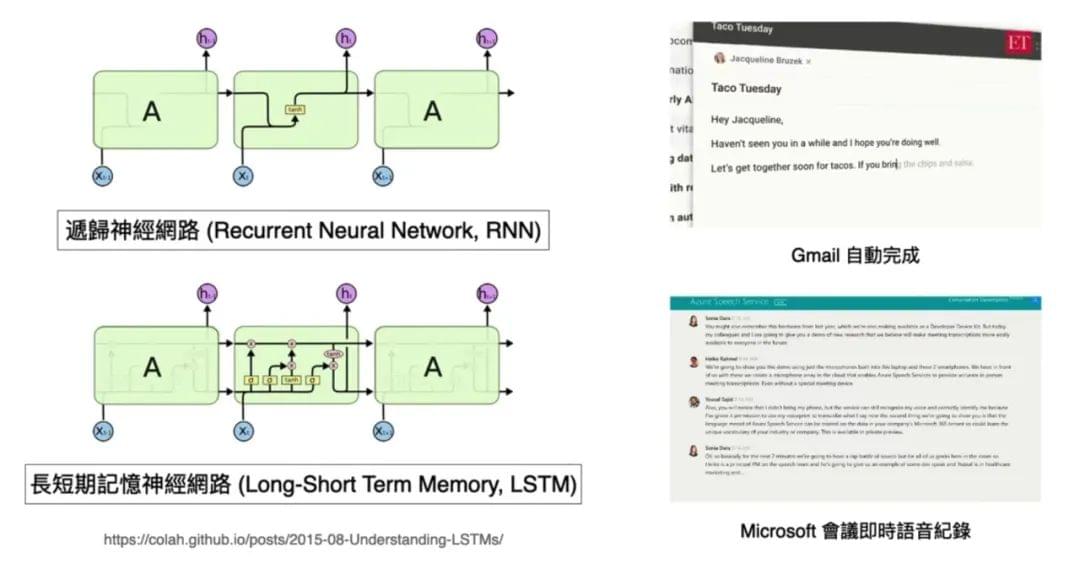

Typical deep learning models include Convolutional Neural Networks (CNN), Generative Adversarial Networks (GAN), and Recurrent Neural Networks (RNN).

Source:

Wikipedia

1.

Initial Exposure to AI

/ / / / / /

Xiao Gang:

When did you start to get involved in the field of artificial intelligence? What was your experience back then?

Simon:

Are you referring to AI in the narrow sense, or can we include computational generation in a broader sense?

Xiao Gang:

You can include computational generation.

Simon:

In that case, it's very early. It should be around 2010. I was an architecture undergraduate starting in 2008, and I began exploring parametric design not long after I started learning architecture. The term "parametric" has evolved a bit since then, but that was when I started using tools like Grasshopper.

I have always been interested in using tools. In high school, I began making personal websites and learning Photoshop. Before encountering parametric design, I never thought tools could be so powerful. Previously, tools merely extended your capabilities, requiring you to execute every step accurately; they only saved you a little effort. But afterward, you only needed to plant a seed, and it could grow into a tree—now, AI can grow a large tree, while before it could only grow a sapling, which was already enough to attract me. So, my exposure to computational generation has been synchronous with my architectural studies.

My exposure to AI in the narrow sense largely depended on the development of AI tools. Earlier deep learning frameworks were challenging to use, like the early Torch and Chainer, which were very hard to handle, and back then, there was no Google Colab or Anaconda. In short, the entry barrier was very high.

In 2015, when I graduated from UCL and returned to China, many things happened and created opportunities. For instance, AlphaGo defeated Li Shishi and Ke Jie, making the world recognize the potential of AI. Of course, this interest stemmed from my personal liking for this area.

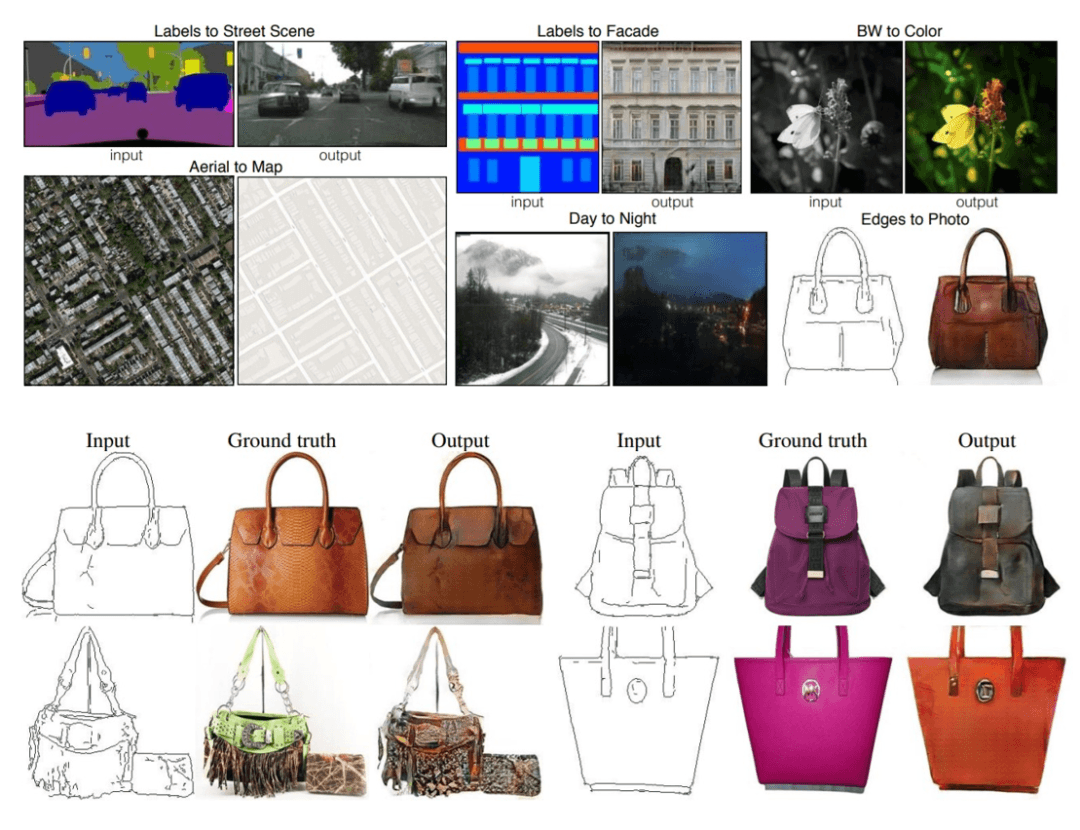

On the other hand, many algorithms emerged that could be applied in our workflows. For example, in 2016, Pix2pix & cycleGAN appeared, enabling numerous image transformations. Image transformation closely resembles tasks in architectural work, where design often transitions from a sketch to a rendered image and from a sketch to technical drawings. By that time, there were already relatively convenient tools—I'm not sure if Anaconda was available, but I had installed an Ubuntu environment myself. In summary, the operational barriers had become relatively low. Even if you weren't an AI expert, you could use some open-source frameworks to enter this field.

So, there was an opportunity, and the technology had developed to a level suitable for those not majoring in computer science but with some exposure, and then I embarked on a... I wouldn't call it a path of trials, but rather a fantastic journey.

2.

How to Cross Industry Thresholds:

Positive Feedback and Opportunities

/ / / / / /

Xiao Gang:

Let me ask more specifically. It sounds like the industry is providing some possible tools, and you're also familiarizing yourself with these tools, from Photoshop to Grasshopper to some advanced tools and methods that I might not understand. This process is certainly not linear. Were there any significant hurdles for you, or where do you think the barriers in this industry lie for an average architectural background? How did you overcome them?

Simon:

Although traditional Chinese culture often

says, "Where there is a will, there is a way," and "There are no difficulties in the world, only those who are unwilling," I tend to be less steadfast when facing difficulties, so when I genuinely want to accomplish something, I need a lot of continuous, positively timed feedback. Now, I habitually create positive feedback for myself, which is also advice for those aspiring to enter this challenging research field.

Even though the tools had become relatively simple, if you’re fighting alone and groping in the dark, it’s still quite difficult; you need encouragement and some companions.

There were a few key moments: one was at the beginning of 2017 when a colleague from UCL returned to Beijing and was working at a well-known company called "Deck Wisdom," which created many interactive gardens. I wonder if you’ve visited Haidian Park, where you can use telescopes to view plants and get relevant information, and it can also provide interactive scene information during movement—it's very interesting.

At that time, their founder, Li Changlin, was quite enthusiastic when I mentioned the technologies I was focusing on. Subsequently, he actually hired me and provided equipment for me to remotely control devices and work on what we wanted—our idea was that as long as we provided AI with a line drawing, it could directly map it into a complete rendered image using a deep learning model, without needing to add textures or lighting.

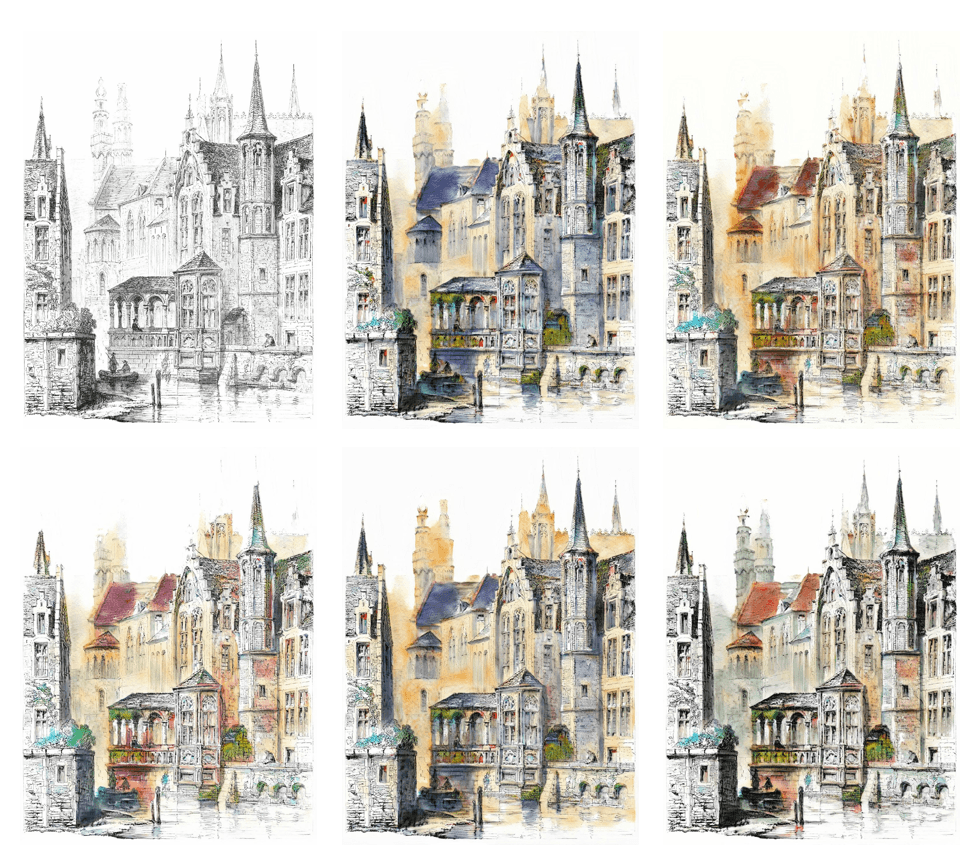

Line drawing to rendered image

Pix2pix & cycleGAN based on supervised learning adversarial neural network image generation

Source: Berkeley AI Research (BAIR) Laboratory

Left: Direct output of rendered image, Right: Original screenshot from SU

However, due to our capabilities and the limitations of the algorithms at the time, this project was not fully realized. But it left some "legacies": the yield rate of AI as a tool is relatively low; you might generate 100 images, and only 5 or 2 are usable—making it subpar as a tool.

Line drawing to rendered image

Testing the generation of architectural sketches based on AI outside the dataset

But if my role is an artist, I can accept this tool. So the collection of images we generated participated in the first global AI art competition jointly held by Tsinghua University and the Art Evaluation Network, where we won a third prize and a potential newcomer award. Of course, the award isn’t particularly high, but for a newcomer entering this field, it was a sufficient acknowledgment to keep me motivated to move forward.

So, from this opportunity, I felt I had enough motivation to continue.

3.

Personal Qualities, Talents:

The Ability to Connect the Dots

/ / / / / /

Xiao Gang:

From my understanding, you are one of the pioneers in the field of AI-based architectural creation. What qualities do you think you possess, or what serendipitous or inevitable factors have led you to this point?

Simon:

First, let me clarify that I'm not particularly technically skilled or a pioneer. I just found a niche where there are relatively few competitors, so I may have stumbled upon a clever opportunity. Especially in AI, I only understand some basic principles and can use the tools and make some modifications.

If I had to mention a trait, from another perspective, my flaw is that I tend to only scratch the surface of many things and don't delve deeply; I learn a bit about everything. Conversely, this gives me the ability to connect the dots, as I excel in linking seemingly unrelated elements, including models within AI and even outside architecture—this capability of connecting dots is quite valuable in this era.

On one hand, human technological development has expanded significantly, and it's no longer possible to say, "I spent ten years in isolation," to master several disciplines; it’s hard to even master one discipline nowadays. On the other hand, information spreads very quickly, and the resources available to you are abundant, allowing you to maximize the utility of the information you can access through your ability to connect the dots.

Of course, I'm not comparing myself to Steve Jobs, but I think of this example: Jobs didn’t invent anything on the iPhone—touchscreens, operating systems, interaction modes, cameras, etc., were all existing technologies. But he composed them into a new masterpiece—something a person who has honed the ability to connect the dots to the extreme can create. I have a bit of that talent in this regard.

4.

Biology:

Drawing on Complex Systems to Expand Human Limitations

/ / / / / /

Xiao Gang:

In your introduction, you describe yourself as a cross-disciplinary researcher in architecture, artificial intelligence, and biology. Could you elaborate on the third field?

Simon:

In the biology field, it's because during my master's at UCL, I followed a mentor named Claudia Pasquero, who focuses on the crossover research between architecture and biology. Now that I'm pursuing her PhD, I certainly need to delve into this area; otherwise, I won't graduate (laughs).

Although I'm more interested in AI, I didn't have such high enthusiasm for biology at first. It was mainly influenced by my mentor’s direction. Now I think this is quite significant. For example, my mentor worked on many membrane structures in a building, incorporating living algae. By controlling the growth of the algae, it could generate electricity and transform the building into something akin to an ordinary tree, capable of carbon fixation and performing basic plant functions.

When I first started studying, I didn’t understand this concept and thought it was redundant; wouldn’t it be better to just build a house and plant a couple of trees? But now I find this fascinating—she is attempting to break the boundaries between architecture, human society, constructed environments, and nature. For example, our cities face many issues, one of the roots being that our urban and architectural systems are too simplistic. What does "too simplistic" mean?

In biology, there are numerous ecological niches, and many levels exist within them, with each ecological niche possibly housing multiple species. If a certain species within an ecological niche dies out, other species can fill the gap. If a particular species within an ecological niche goes extinct, species from other niches may mutate to occupy that niche.

From the perspective of human livability, ecosystems appear unstable. However, from the ecological perspective, they are quite stable.

So, looking back at her work, she introduces existing natural elements to complicate our constructed systems. Once they become complex, they gain two capabilities: first, they can better integrate into the Earth’s ecological system. Currently, urban systems are isolated from the Earth’s ecosystem. For instance, we must build a power station specifically to provide energy to the city; they cannot self-sustain energy. Of course, some renewable energies like wind and solar power offer some passive generation, which is somewhat better. If we don’t manage our cities, they gradually deteriorate and decline, unable to remain revitalized like an old tree; they are fragile systems that require continuous external maintenance.

Therefore, she aims to enhance the resilience of our cities, incorporating them more deeply into ecological systems, elevating the level beyond "simple carbon neutrality."

On the other hand, humans have limits. The knowledge we've accumulated over the past few thousand years since writing emerged is what we possess. However, there are alternative research systems, such as directly using slime molds to create biological computers by setting different connection points to guide slime molds in generating urban layouts; or how we might control bees to construct buildings.

Sometimes, outsiders may not comprehend this and think it lacks significance. The reason for such attempts is that different species have evolved over millions to billions of years, accumulating wisdom that is unimaginable.

No matter how we design, we use only our known wisdom or experience. Introducing biology into design allows us to borrow from their evolution, at least offering a possibility to break some of our human limitations. So, I find this quite intriguing.

5.

AI Evolution and Instincts

/ / / / / /

Xiao Gang:

I want to jump to a more philosophical part and discuss your views on AI. Earlier, we talked about evolution and complexity, and I’m particularly interested in the AI generation process. Some compare it to rapid evolution, while I find it at times close to instinctual responses lacking self-awareness, simulating some instinctual reactions with computers. These instinctual responses may sometimes seem related to human instincts while at other times feel unfamiliar. What do you think? Have you considered whether your creations simulate evolution or biological instincts, which are currently unpredictable?

Simon:

Let me first separate evolution and instincts. Evolution has, for instance, the simplest algorithm called genetic algorithms. Genetic algorithms simulate an evolutionary process, striving to reach the optimal target through mutation and selection. The process of gradient descent to find global optimal points in AI models somewhat mirrors the evolutionary process, borrowing wisdom from biological evolution.

Instincts can be understood as the primal impulses of neurons. For example, if you poke me with a needle, my neurons drive me to pull my hand back; that is an instinct.

- The earliest neural networks mimicked the visual cells of

frogs, creating a visual neural network that indeed simulated primal neural impulses and evolution. This relates to the earlier discussion that in the initial stages of some disciplines, much experience must be drawn from organisms that have evolved over millions of years—this is where it gets interesting.

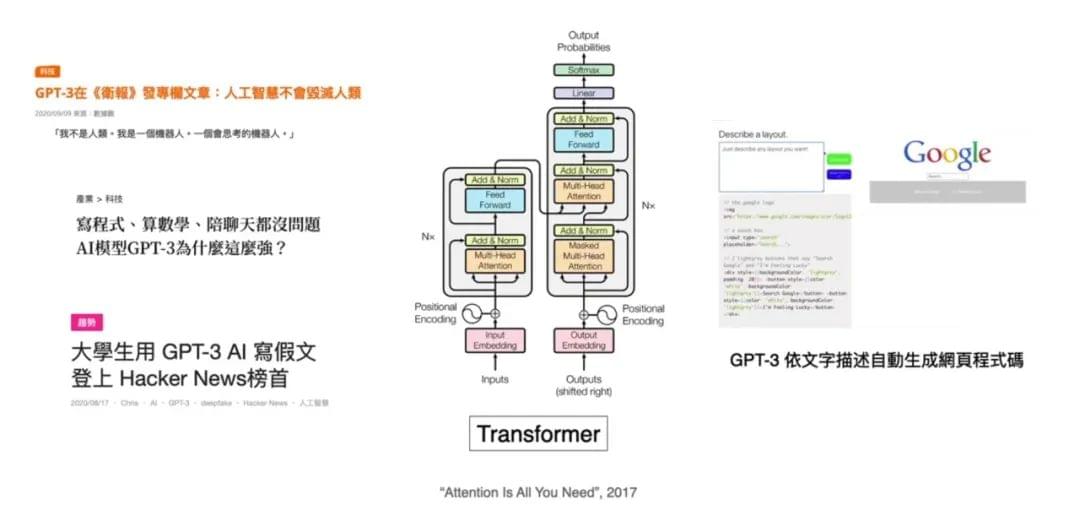

However, now some newer neural networks, like Transformers and ResNet, might not resemble evolution and biological neural networks as closely.

- Popular science:

①Transformer: http://www.uml.org.cn/ai/201910233.asp

Since this question is somewhat vague, I can only say that indeed, it started by borrowing from these algorithms. While the models we currently use do reflect some shadows of evolution and instinctual impulses, they differ significantly from biological evolution and instincts. The reasons for this difference are twofold: Biology and computers are fundamentally different structural systems, which we can refer to as "carbon-based life" and "silicon-based life." When forging silicon-based life, we might borrow seeds from carbon-based life, but ultimately, we will develop a set of algorithms more suitable for silicon-based life.

The second reason is that the terms "instinct" and "evolution" are generally used for conscious beings. Since we currently believe AI lacks consciousness, the definitions differ, so we can only say it borrows some principles and algorithms from them.

6.

Is AI Based on Algorithms or Data?

/ / / / / /

Xiao Gang:

Earlier, you mentioned that AI technology has two paths: one is oriented towards imitating biological instincts, while the other approaches from rational logic. We previously saw some AI using vast amounts of random data, letting it grow through a black box. Others opposed this method, arguing that true AI should still mimic the intelligence and logic that humans currently possess. What are your thoughts on this? Have you encountered forked paths in your creations?

Simon:

The two paths you mentioned are actually a single path; a model can simultaneously draw from both genetic algorithms and neural networks, or rather, these two have inherent similarities. A neural network is a structure where each node can be understood as a neuron. When it's constructed, its responsibilities, weights, and activation functions are not predetermined.

It requires a process similar to genetic algorithms to assign tasks to each neuron. Like a child at two or three years old, when the number of neurons peaks, they are not the smartest; only later, as unused neurons are pruned, does intelligence develop.

AI functions similarly; it starts with a framework, where each point's responsibilities are indeterminate. Whether it’s a decision tree in machine learning or a deep neural network in deep learning, the learning process uses gradient descent to optimize. In short, it requires an algorithm that can update the network based on learning samples to clarify the functions and weights of each point in the entire framework.

Eventually, when training is completed, some points will have larger weights and more responsibilities, while others will serve different functions, and some might even become redundant.

Thus, in industry, deploying a model can be achieved through model distillation and pruning, eliminating redundant neurons to optimize the model and lighten its load—so it is quite similar to humans.

Your second question is, Is AI based on data or algorithms? Generally, earlier AI was algorithm-based. The early AI was referred to as "artificial decision trees," representing a flowchart where each branch has a node; a decision flows to this point, and depending on conditions, it moves in various directions. This was prevalent in architecture during the 1960s and 70s when control theory was popular, but later, it became clear that this approach had limitations and could only address simpler tasks.

Now, mainstream AI largely relies on data for training, but it's not randomly constructed networks; it's built through the collective experience in constructing different network frameworks for various tasks.* For instance, convolutional neural networks are generally used for image processing, while long short-term memory recurrent neural networks (LSTM) and Transformers are applied in language processing. Different network architectures are required for different tasks, and you must feed it task-related data to align the network with relevant knowledge.

The mainstream approach evolved from artificial decision trees, which involved predefining algorithms. However, over time, it was realized that conditions in decision trees could also be autonomously learned through data feeding. Now, decision trees have become a type of machine learning algorithm.

In summary, very few AI systems now involve predefining all rules, as their performance cannot match that of those trained with data. However, in fields involving human life safety, such as autonomous driving, many systems still rely on reinforcement learning, driven by data. There has been ongoing debate in the industry because, despite the emergence of many analytical tools, AI remains somewhat of a black box, making it difficult to pinpoint errors in training when incidents occur. Hence, a viewpoint has emerged: In any domain related to life safety, where fault tolerance is particularly low, human-written algorithms should be used because we can clearly understand every step taken.

However, from what I know, mainstream autonomous driving companies still rely on data because producing autonomous systems is incredibly challenging, except for very simple tasks. For instance, I recall that Alstom, a French company similar to Huawei, was seized by the US after detaining its executives and subsequently acquired and dismantled. However, Huawei has not reached that point.

In the past, they established an automated light rail system in France, which, if I recall correctly, involved either an automated driving light rail or signal light systems, but it was not based on artificial intelligence; it was even based on simulated signals rather than digital signals. This was quite remarkable and could only be achieved for relatively simple tasks. The complexity of tasks in light rail cannot be compared to actual road conditions, where every vehicle's schedule is known, and there is enough buffer distance. Real road situations are highly complex, making it nearly impossible to achieve through pure algorithmic programming.

7.

Challenges in AI Creation:

Yield Rate and Industrial Standards

/ / / / / /

Xiao Gang:

Some individuals are developing AI applications for construction drawing optimization, which seems to lean more towards the algorithmic path. However, they struggle to create aesthetically pleasing architectural images, unlike what you produce.

Simon:

I haven't specifically looked into the algorithms behind construction drawing AI, but based on my limited knowledge, I suspect they are not data-trained; they likely employ artificial decision trees or pre-written algorithms. There are indeed clear conditions—what constitutes a violation in construction drawings can be described algorithmically.

Audience A:

That is indeed programmed directly. I have a friend working at iFlytek in this area, and he collaborates closely with seasoned draftsmen. After they complete tasks like strong loading or parking lots, they can quickly bring newcomers up to speed, making them feel like they have two years of experience in an instant.

Xiao Gang:

You recently released an AI-generated spatial piece. In the creation process, what do you find to be the main challenges of AI-generated spaces?

Simon:

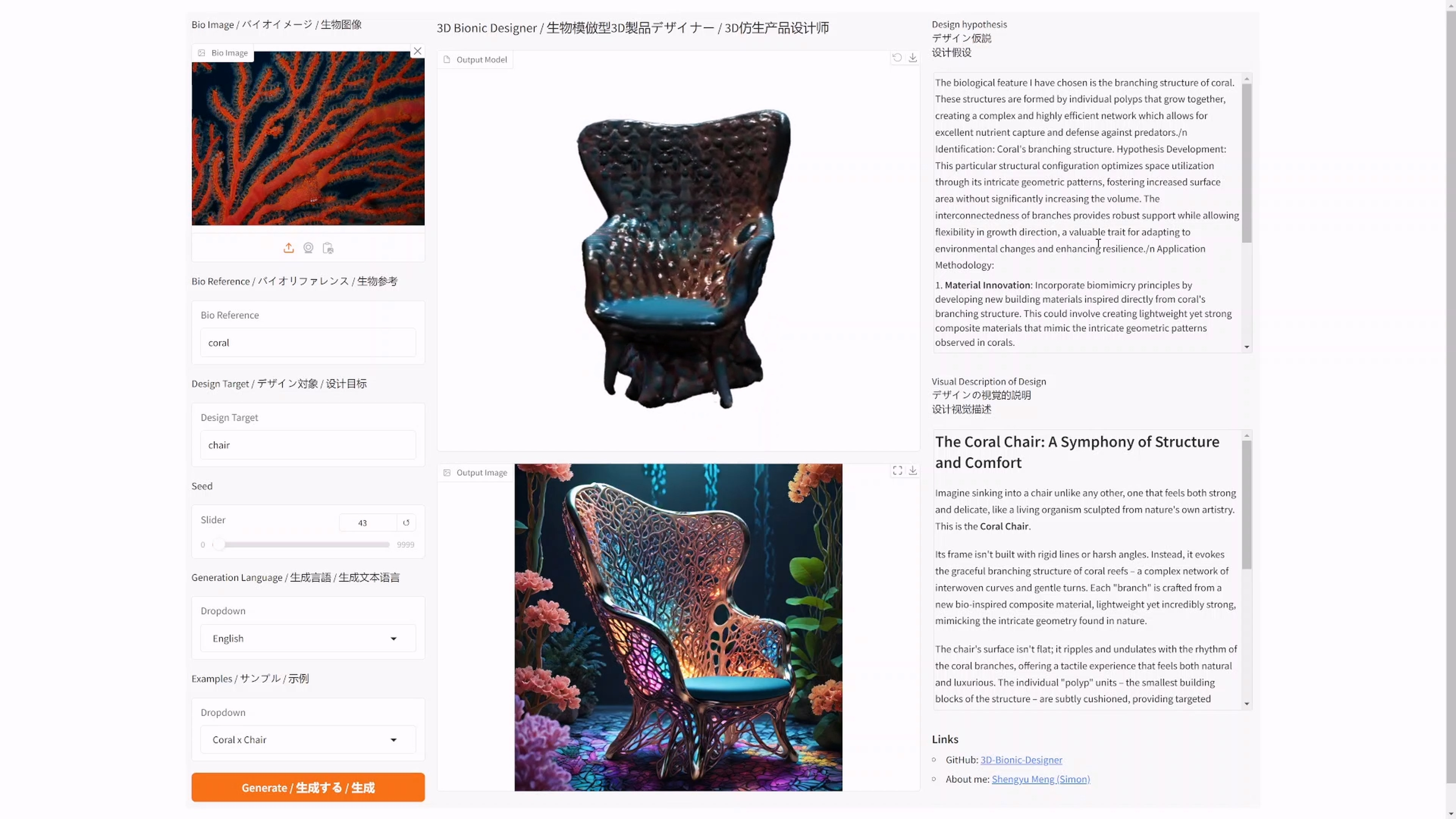

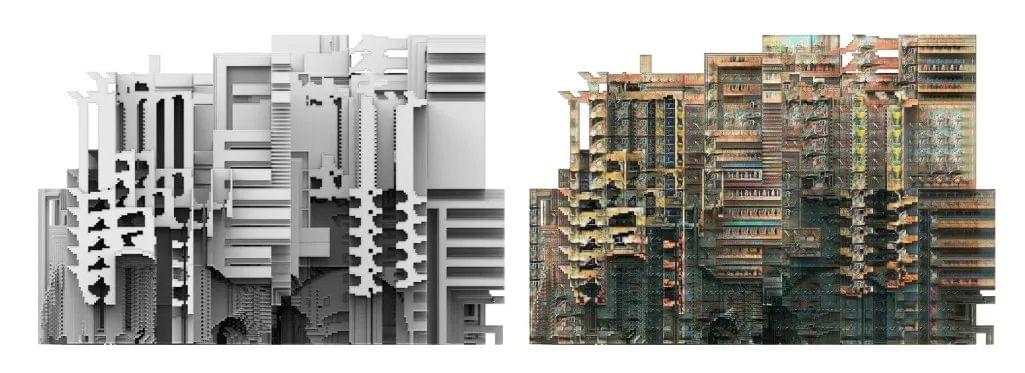

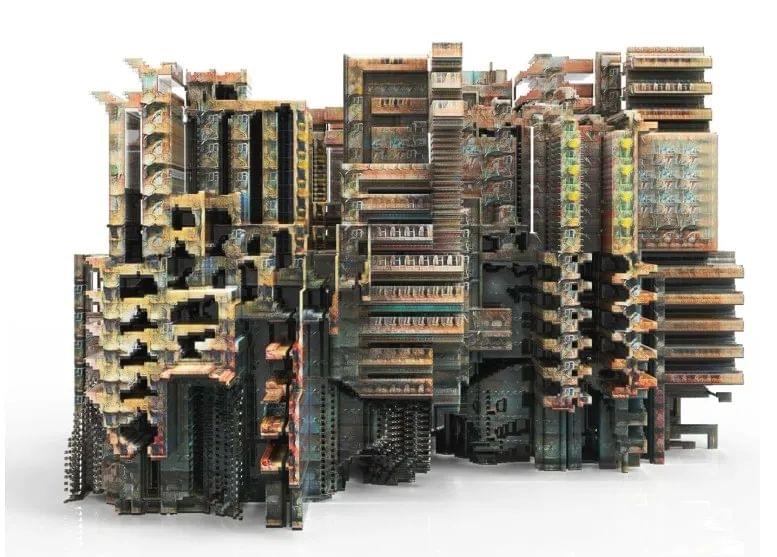

First, let me clarify that the recent work you saw is technically not AI; it’s generated using fractal algorithms, producing 3D outputs directly. It does utilize some AI technology, where you set parameters and use a genetic algorithm tool to allow the work to mutate, and then you can select a preferred option. So, it may involve 5% AI.

- Regarding AI-generated content, I believe there are currently two main challenges: yield rate and industrial standards.I previously mentioned yield rate; the tools we used in 2016 and 2017 were black boxes, making it difficult to direct them toward desired outcomes. When you attempt to adjust them, they may cause issues elsewhere, leading to a low yield rate, which is a significant challenge.

The second major challenge lies in industrial standards. For example, if we output a high-resolution image suitable for printing, say 5000×5000, but there are no existing models that can directly produce such an image, you have to employ algorithms to upscale it, which could result in blurriness and inferior print quality—this is a straightforward industrial standard.

More complex industrial standards involve the work of people like Hu Hu, who are engaged in game space creation, where modeling must meet many requirements. The model must not only look good but also meet constraints on polygon count and material variety, and layering requirements, etc. AI-generated three-dimensional objects often struggle to align with industrial standards.

Currently, I think the most significant difficulties in content generation are these two aspects.

Hu Hu:

I’ll quickly respond since it’s somewhat off-topic. Indeed, industrial standards present a significant challenge for AI-generated work in the gaming industry. Game development resembles construction, where a building blueprint must be executed on-site. The flat plans drawn by students and architects are not usable for contractors, which aligns with what was just mentioned. Regardless of whether AI generates content or an original artist creates models, all must ultimately be realized by dedicated personnel.

8.

AI Directions Worth Noting for Architects: Transformer, GAN, Zero-shot Learning

/ / / / / /

Xiao Gang:

During our last conversation, I realized that you focus on the evolution of algorithms, especially some open-source ones. Could you discuss the main directions of technological evolution in recent years, particularly those worth noting for architects?

Simon:

First, I want to say that there are three types of artificial intelligence algorithms worth noting for architects: one type is the content generation I am currently involved in; another is building performance optimization, whether for load analysis or optimizing energy and daylighting; the third

is content generation directly connected to production processes. The generated material is certainly valuable in the industry, but it may not be as visually intuitive; personally, I tend to pursue more visually appealing content.

In the realm of content generation, I believe the most valuable developments over the past two years stem from Generative Adversarial Networks (GAN). One notable example is Pix2pix & cycleGAN, which can transform one type of image set into another. Another significant system is StyleGAN, which is a self-supervised model that can generate similar yet distinct images as long as it is fed a set of similar images, like face generation technology.

In the three-dimensional realm, there are models like ShapeHD and RasGAN that can learn a plethora of voxel models, such as chairs, and then generate new ones. These GAN-based models, both two-dimensional and three-dimensional, are worth paying attention to.

In the last two years, two newer developments are emerging: one based on Transformers. Transformers were originally models for language processing, notable for their ability to establish effective connections in distant contextual content, like when delivering a lengthy narrative. Earlier AI models faced issues where feeding them a 1000-word article meant they would forget the initial context by the end, but Transformers can bypass significant distances to create meaningful links, allowing AI to establish associations even with significant gaps.

This methodology has also been applied to image generation models; each image has semantic meaning, and you can describe a picture with language. Essentially, this allows AI to understand the relationships between elements within the generated image. For example, when generating a street scene where it’s raining, AI can predict that people in the scene may be holding umbrellas because it has learned the relationship between umbrellas and rain. This ability makes the generated images more realistic. Recently, many high-performance image models have emerged due to integrating Transformers.

Another development involves zero-shot learning applications: CLIP. The recent models that generate images from text combine CLIP with VQGAN.

- As of February 8, 2022, there’s a diffusion model in image generation that is worth noting, based on neural network-based differentiable rendering. Nerf is another model currently attracting attention.

- Popular science:

VQGAN is a model where you can provide it a plethora of images, and it will generate similar content. However, unlike StyleGAN, it doesn’t require those images to be homogenous; it can mix all images together and still generate highly realistic outputs. The advantage is that you don’t need to train a model specifically for a particular task, but extracting specific items from the vast results can be challenging.

At this juncture, CLIP comes into play as it can understand the relationship between images and text; when you give it a piece of text, it can determine how closely the generated images align with that text, guiding VQGAN to produce what it deems the most relevant image. This allows for text-to-image generation.

So why can CLIP achieve this? There’s a concept called zero-shot learning, which allows experiences from one thing to be seamlessly transferred to another.

For instance, if we describe a panda to a child who has never seen one, we assume that the child knows what a bear is and understands the concepts of black and white. If we say, "A panda has black circles around its eyes and ears, with black feet, while the rest is mostly white," they should be able to recognize the panda upon seeing one at the zoo.

During training, CLIP interestingly annotates not just the content within images but also descriptive language, like "this is a yellow banana" or "this is a bicycle with two wheels." With this redundant information, even if you describe something the model hasn’t seen before, it can identify the concept based on similarities with known items. Zero-shot learning endows AI with a semblance of intelligence, enabling many generative models to better integrate into creative workflows.

Thus, I believe that Transformer, GAN, and zero-shot learning are three areas that will have considerable influence in the coming two to three years.

9.

Similarities Between AI Learning and Children’s Cognition:

Based on Algorithms/Logic

/ / / / / /

Xiao Gang:

Actually, drawing parallels between children's learning processes and AI is quite interesting. For instance, in a child's learning journey, there's semantic learning—like recognizing the characteristics of a panda—and then there's image-based cognition. AI also recognizes relationships through isomorphism in graphic features. There's also behavioral learning, which may be less common in AI. What do you think of these two aspects? You mentioned that a child might have around 200 billion neurons at the age of two, and these neurons need time to connect, isn't that similar to the growth process of algorithms?

Simon:

I can't precisely state how similar they are. First, the initial idea for artificial neural networks indeed derived from biological neurons, and the methods for seeking optimal solutions drew from evolutionary methods, so there are undoubtedly similarities. However, it’s challenging to determine how closely they align since algorithms are diverse, and each varies significantly.

Additionally, some network structures might already diverge significantly from human neurons. Nevertheless, intuitively, I believe there are some similarities, possibly related to fundamental issues. I previously heard a talk by Zheng Haoxiong*, who mentioned an enlightening point, distinguishing algorithmic generation into two types: one based on data and the other on logic.

As you mentioned, one is programmed, while the other is data-driven; originally, few wanted to delve into data-based methods. Logical-based algorithms are more intuitive, allowing you to grasp what is occurring.

The second real-world concern is that data-driven methods require substantial hardware resources since they must simultaneously process vast amounts of data. Thus, after about 2010, as Moore’s Law began to plateau and transistor counts soared, the performance of GPUs and CPUs exploded, accommodating larger models. In fact, earlier neural network models couldn’t compete with manually coded algorithms because available GPUs or processors couldn't process massive amounts of data simultaneously. Today, programmed algorithms still tend to be more cost-effective. Coding is simpler to maintain; collecting and cleaning data for AI training demands considerable hardware resources. However, it seems that only data-driven approaches can closely replicate tasks within real-world environments.

- I suspect that on the path toward intelligence, wisdom cannot be directly coded; it must be acquired through learning, accumulation, evolution, and mutations influenced by the environment to reach a state close to intelligence. This is fundamentally why we perceive parallels between AI evolution, training, and children's growth and learning processes.

10.

Does AI Help with a Lack of Creative Content?

/ / / / / /

Xiao Gang:

In your creative process using AI, have there been moments when you felt that something shouldn’t be produced by AI or particularly surprising instances?

Simon:

Indeed, there have been many such moments. Almost every time I use a new model or algorithm, I am initially astonished. As I create more, I gradually understand what it can do, and that sense of surprise diminishes. This drives me to seek new algorithms or make novel connections with existing ones. Overall, there isn't a specific work that surprised me; however, at the beginning of each series of works, I usually feel quite surprised.

Xiao Gang:

From a macro perspective, as art and architecture evolve, they seem to be becoming increasingly scarce. Regardless of the maturity or reality of AI creation, do you think its unexpectedness can offer insights or assistance to the dwindling realms of art and architecture?

Simon:

- I believe AI serves as an amplifier, enabling those with innate creativity or ideas to increase their output or enhance the surface quality of their works.However, it may not directly transform those fields.

This relates to how we define scarcity. If it refers to a lack of groundbreaking ideas that can profoundly reshape academia or industry, I don't think AI will produce that.

- Because such concepts emerge from the creator’s deep insights into society, historical phases, or a given industry.The masters who previously led architectural revolutions had profound insights into society, which AI cannot replicate.

However, if scarcity is not about altering the course of history but about generating medium-to-high-quality works or consumer products, I believe AI can indeed play a role. Currently, there is a significant scarcity of quality content on the market. For instance, when discussing games, this is something I heard at a gaming forum I attended. A great game, like Grand Theft Auto or Breath of the Wild, requires hundreds of developers and engineers years to create a 10GB game, which players can complete in just a few days, consuming that content instantly.

But if we are to transition into the metaverse, we must have enough—not necessarily classic works but at least high-quality and non-repetitive, personalized pieces to provide abundant material. Producing such content through traditional development cycles is incredibly challenging due to the slow pace and high costs.

- This is why self-media has gained significant popularity, as self-media thrives on easily generated content.The least expensive way to produce diverse consumer content is to document and share surrounding experiences. People generally enjoy watching and engaging with shared everyday occurrences, which also incur low costs—this is the current trend.

- If generative AI becomes widely accessible, the quantity of medium-to-high-quality works will increase, enabling creators of these works to access a multiplier for their output.They might have only managed one piece in a

month, but with AI tools, they could create twenty. Therefore, AI can somewhat alleviate scarcity in this tier of content.

Xiao Gang:

Indeed, AI serves as a tool for creators to boost their productivity.

Simon:

Currently, that’s true, after all, the essence of a work is its core spirit: what is its main idea? Who is the audience? What problems does it aim to solve? Until AI reaches the point of strong artificial intelligence or general artificial intelligence, it cannot assist you in answering these questions. Ultimately, when you create something, by addressing those questions, the essence of the piece is already largely determined. Therefore, it still relies on human effort.

11.

Image Quality, Generalization, Speed

/ / / / / /

Xiao Gang:

Recently, there has been a surge of NFT avatars that appear to be generated by AI, many of which utilize recognizable creative methods. Do you believe that’s the case?

Simon:

Those avatars can indeed be AI-generated, but based on my limited knowledge, they can be created without AI. A simple script can concatenate pre-defined components into fixed sections; just prepare a set of materials for each part and randomly piece them together. This approach is more controllable and cost-effective than using AI, which often introduces uncertainty and uncontrollable elements, while scripted compositions can yield higher quality.

Xiao Gang:

You’ve mentioned "blurriness" multiple times. Transitioning from an unclear initial work to a structured, clearer piece—such as the background image behind you—does that step present challenges? Is it feasible for AI to achieve precision in creation within a short timeframe?

Simon:

It’s not particularly difficult; for example, StyleGAN can already produce many hyper-realistic images that are nearly indistinguishable from real ones. Recently, a competition called the Deepfake Challenge has emerged to identify generated fake faces, as human eyes often cannot distinguish them; machines are needed for recognition.

What I refer to as "blurriness" appears in certain contexts. If we visualize AI’s capabilities in a three-point framework—image quality, generalization, and speed— these aspects are often mutually exclusive, meaning you can typically only achieve two of them. For instance, if I want AI to produce a realistic face—whether it’s an African, Caucasian, Asian, male, female, old, or young face—it can deliver an accurate representation. However, if I ask it to create a face that falls between an alien and a human, it may become blurry due to the challenge of generalization. Achieving both generalization and realism often requires sacrificing speed, necessitating prolonged searching within the model to arrive at a clearer image. It’s not inherently difficult; it depends on various conditions, and sometimes clarity can be achieved.

Audience B:

I have a question. Is "generalization" referring to generating images that were not included in the training set?

Simon:

Yes, it refers to generating images that significantly deviate from the training set.

Audience B:

Also, moving from blurriness to clarity—does that process involve super-resolution techniques?

Simon:

If something is inherently blurry, applying super-resolution might sharpen its edges, but you'll still feel there should be additional information. It merely enhances sharpness. Utilizing CLIP aids in achieving clarity; for example, if you generate an image with VQGAN, not every image it produces will be clear; it could be a mix of blurry and clear outputs. However, the images used during CLIP training were all sharp, so when you guide it, CLIP will seek images that are not only content-relevant but also as clear as possible. I’ve noticed that using CLIP with diffusion often results in clearer images, likely due to diffusion’s inherent capabilities, although it typically requires longer iterations.

12.

Alien Creatures:

AI's Recognition of Different Objects

/ / / / / /

Xiao Gang:

You created two works featuring alien creatures; their boundaries appear clearer than those of architectural or spatial images. What differences do you encounter during the operational process for these two types?

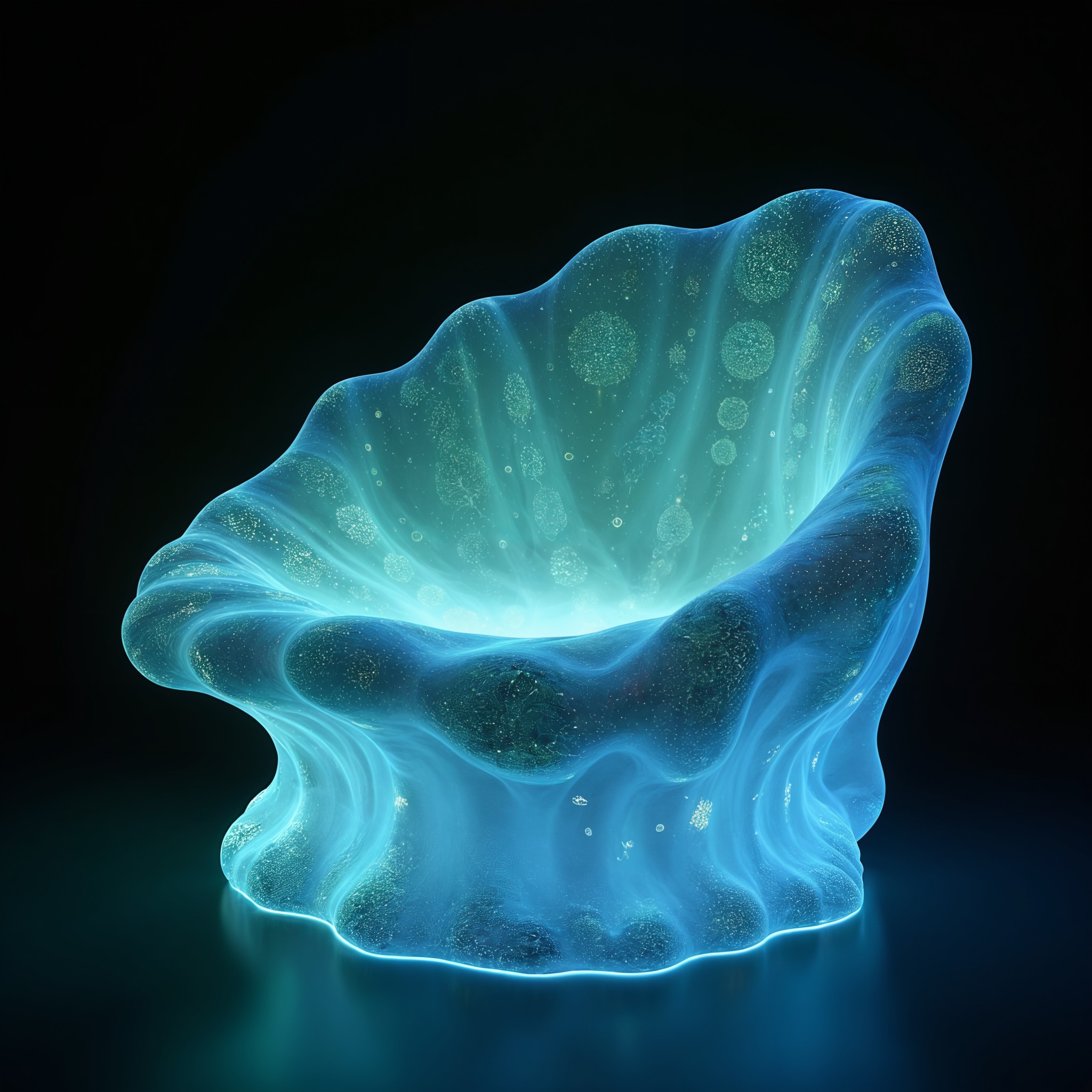

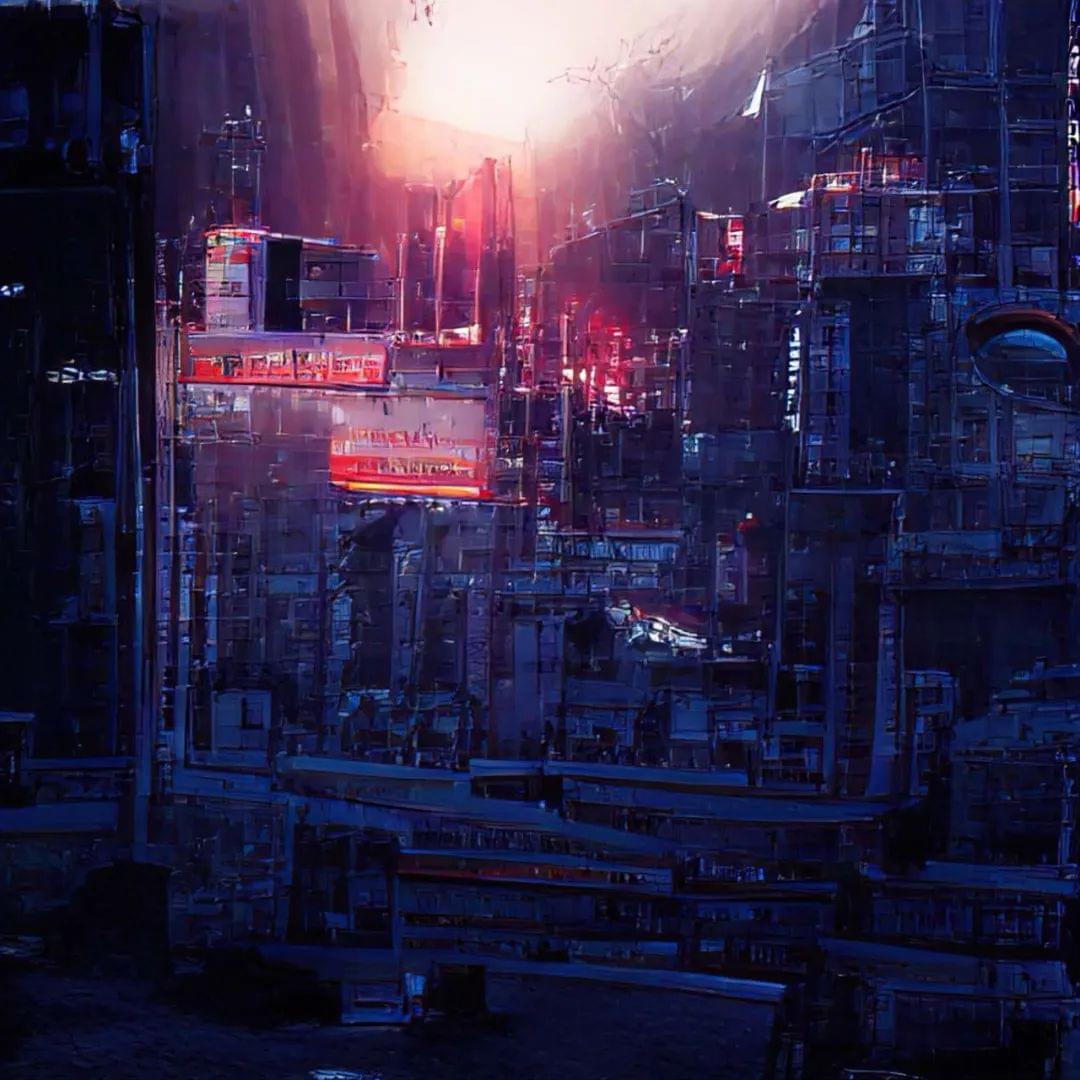

AI-generated apocalyptic creatures

Generated using CLIP + VQGAN/diffusion Model

Based on the text: cyborg jellyfish survive in nuclear war

Simon:

In those cases, there isn’t much difference; they were generated using text with a diffusion model. In fact, from AI's perspective, biological forms may be simpler than architecture. Architectural scenarios are inherently complex, and features like doors and windows can be as complex as a living organism in AI's perception.

Additionally, the diversity in architectural facades adds to the uncertainty when requesting AI to generate a structure. Even if you limit it to Gothic architecture, there will still be significant variations, leading to blurred boundaries.

Conversely, for AI, generating an image of a dog or a cat tends to be less variable; the differences between cats are minimal compared to those among various Gothic structures, which gives AI a stronger sense of certainty in this context. Hence, I feel that generating biological entities may be somewhat easier for those entering the realm of AI creation.

13.

Beginner’s Guide and Recommended Tutorials

/ / / / / /

Xiao Gang:

For an ordinary architect interested in AI, besides tutorials on Bilibili, what beginner-friendly resources would you recommend? How should a novice take their first step?

Simon:

There are three aspects. First, you need to find some cases to run; you should see the results to grasp what it can do. Second, you still need to understand the underlying principles. Since it is a black box, not knowing the principles makes it harder to control effectively. Third, you must be mentally prepared for a lengthy learning process; the learning curve is quite steep, so prepare some positive incentives for yourself.

Self-Learning Guide

Step 1

Public accounts: Quantum Bit, CSDN, Machine Heart, Paper Weekly

In terms of the first step, you can follow some Chinese public accounts, such as: Quantum Bit, CSDN, Machine Heart, and Paper Weekly. Some of these accounts will introduce interesting new AI algorithms, and when you find them appealing, you can look up corresponding source code on GitHub. At this stage, you need basic skills to comprehend the material. You should also set up the environment required to run the programs, whether it’s installing Anaconda on your computer or setting up Ubuntu, or utilizing Google Colab's virtual environment; in short, you need to be able to get it running.

Step 2

Courses: Andrew Ng - Deep Learning (Official website deeplearning.ai, the Chinese version on NetEase OpenCourse does have subtitles but seems to lack accompanying assignments); Bilibili @Learning AI with Li Mu, @Tongji Zihao Brother

For the second step, if you genuinely want to learn AI, I would recommend taking Andrew Ng's course. I spent two or three years gradually digesting it. During the deep learning specialization courses, you'll find yourself lacking in foundational knowledge; for example, you might realize you don’t know linear algebra or advanced calculus, prompting you to delve into the "Four Great Heavenly Books" of calculus. You may also discover that you don't know Python, requiring you to learn Python through other resources.

Step 3

Community (with the same name as the public account): Infinite Community Mixlab

Lastly, for positive reinforcement, I suggest finding like-minded partners and joining communities where you can ask questions and feel everyone progressing together. On the other hand, maintain your self-media presence; it doesn’t have to be formal; even sharing what you’ve created on social media will garner likes from people around you who haven’t encountered such content before, providing you with motivation to continue.

14.

Influential Creators

/ / / / / /

Xiao Gang:

Who do you consider the most influential creator in your life? It could be an architect, artist, or writer—anyone.

Simon:

It’s challenging to pinpoint a single individual as the most influential; I often find that I remember where I saw something but forget who created it.

To elaborate, there are certainly some masters who have profoundly influenced me, but when asked this question, my first instinct is not to mention those names because they shine like brilliant stars in the distance; while they’re bright, they don’t illuminate the path beneath our feet. The pace of change is so rapid now that we might need figures who act like streetlights, guiding us step by step.

In this sense, I think those actively creating and sharing their thoughts or tutorials online have influenced me more in practice. Their continuous work allows you to sense and uncover their creative pathways or techniques.

Thus, I would advise people to focus more on individuals who are not so far removed from you—those engaged in similar pursuits but perhaps doing them better. By sharing your creations and establishing connections with them, even directly interacting online, I believe this will be of greater help.

- *

15.**

AI Workshop Design

/ / / / / /

Xiao Gang:

A practical question—can we design a series of workshops that enable those interested in AI to easily enter the field?

Simon:

I believe it's possible to create workshops that allow beginners to quickly utilize these tools, but clearly explaining the underlying principles may prove challenging.

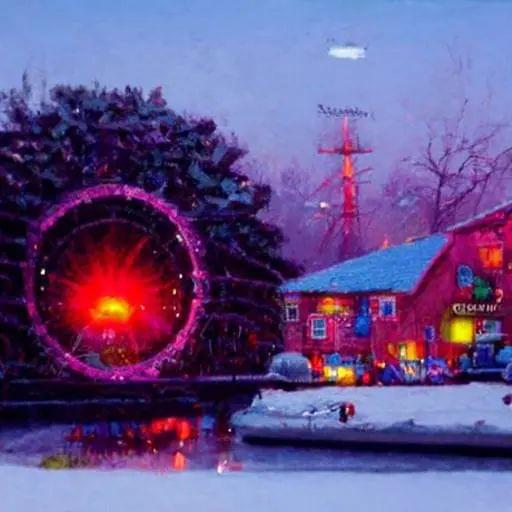

For example, the background image you see now was generated during a parametric training course I attended at Tsinghua University with Professor Xu Weiguo in 2019. The program lasted about seven days, during which my mentor Casey Rehm taught parts of processing and AI. However, he only demonstrated how to get the model running without delving into the principles.

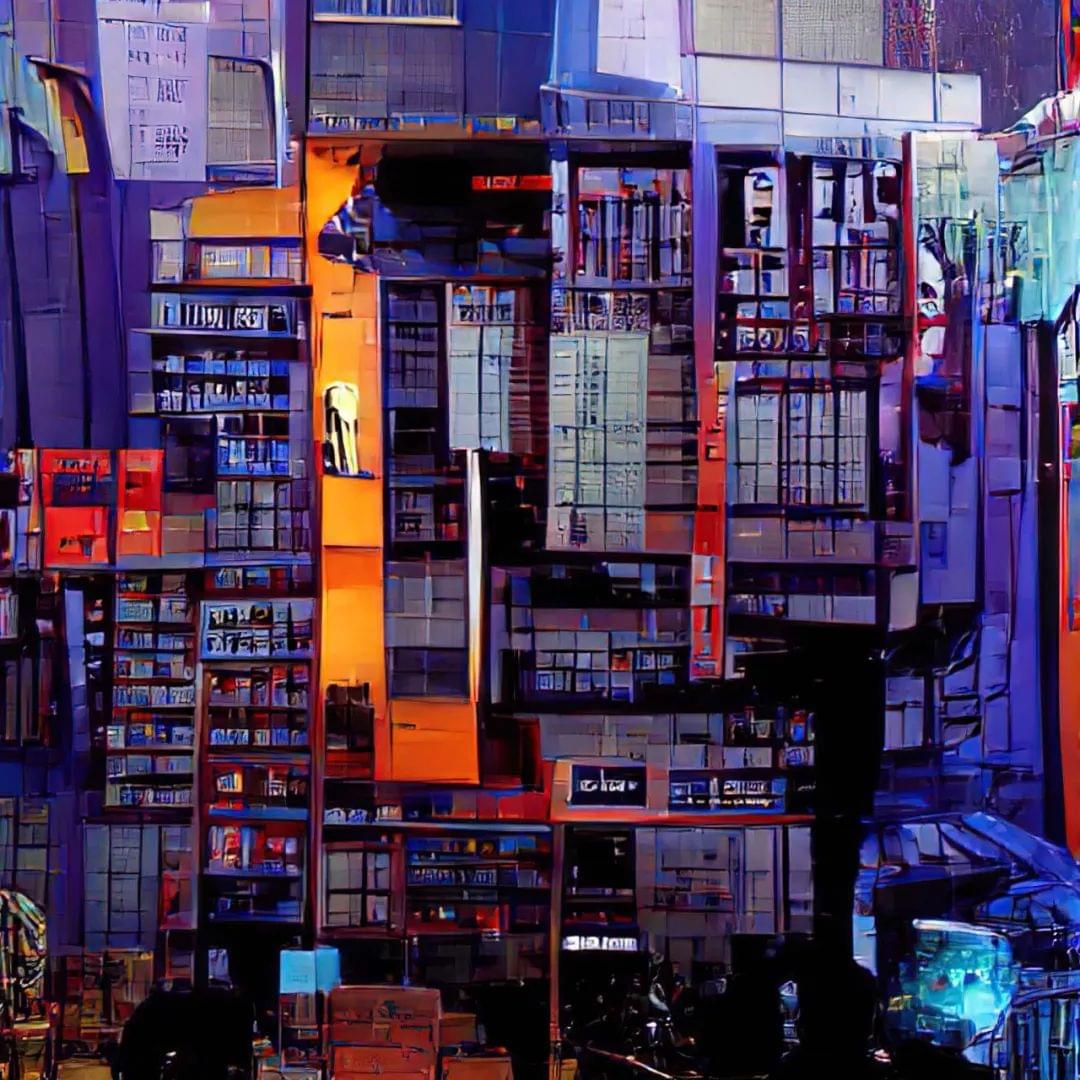

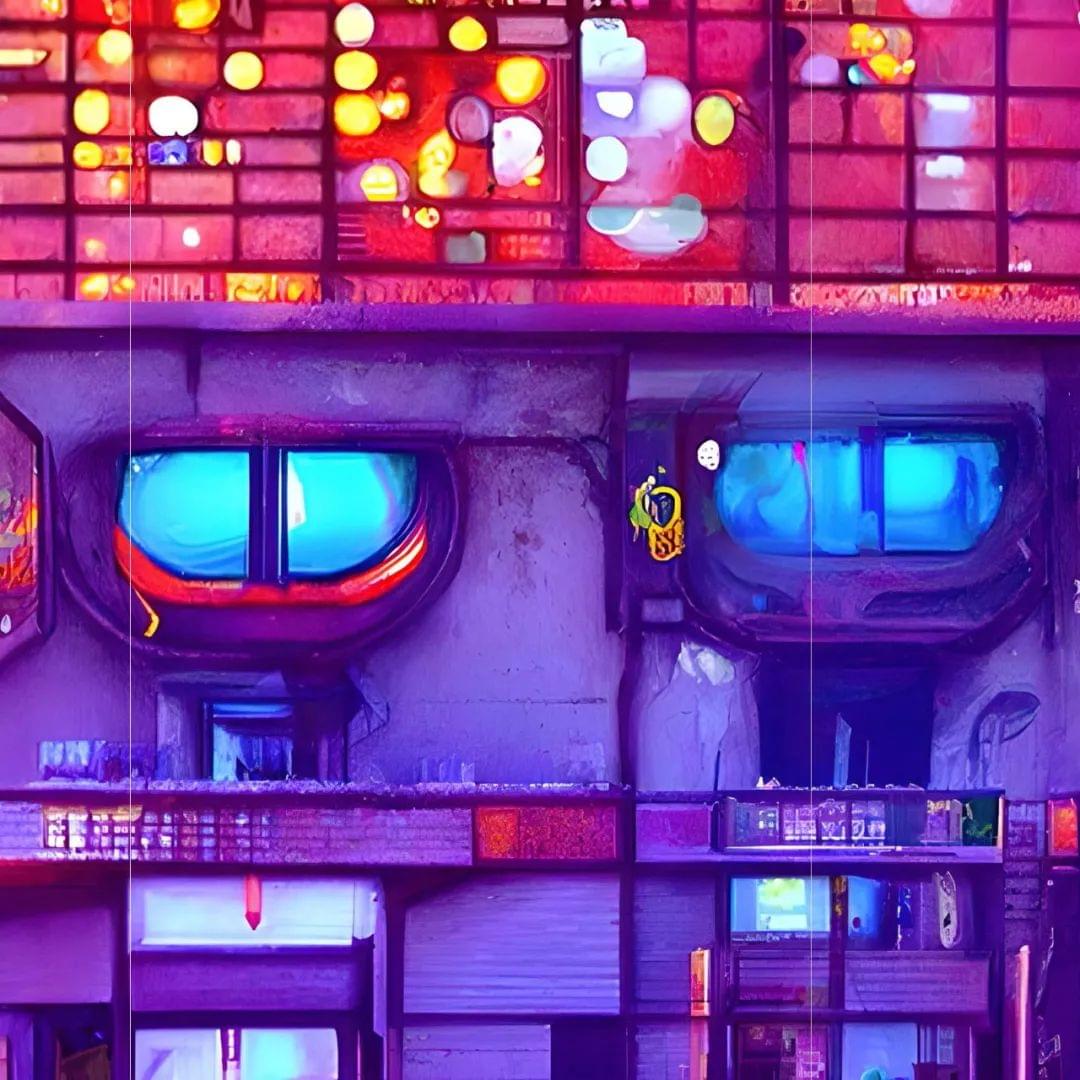

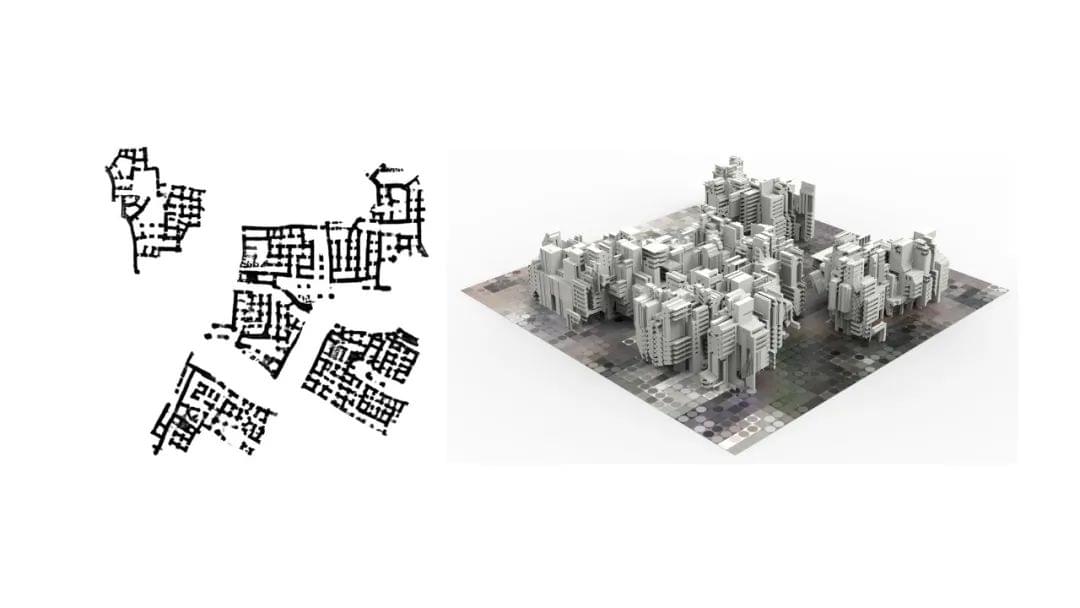

AI-Generated City

Left: Satellite image of desert regions; right: Satellite image of Los Angeles.

Site floor plan—overall plan—building volume—textured model.

By combining image segmentation and splicing based on generative adversarial networks (GAN), high-resolution single images can be transformed.

After the course, I observed that the participants who continued using those programs were those with some foundational knowledge beforehand. Those who lacked that background likely didn’t learn as effectively or simply forgot after some time.

Currently, many tools are accessible, making it relatively easy to operate them. The barrier to entry has indeed decreased. However, the understanding barrier hasn't significantly lowered. While deep learning frameworks have become slightly easier and more excellent tutorials have emerged, the fundamental principles remain unchanged. Regardless of the era, the "Four Great Heavenly Books" of calculus still retain their complexity. However, in the future, it might be that people won’t need to understand so much foundational knowledge to utilize these tools effectively—this trend is slowly emerging.

I think it’s worth trying; you can approach it as a black box. If you lack the time to learn thoroughly, intuitively using it may lead to discovering your own path. I haven’t personally pursued that route, but I believe it’s feasible.

16.

Hardware Requirements: Not High

/ / / / / /

Xiao Gang:

Let’s discuss this further. If we aim to realize a black box model like the one you provided links to, I’ve found that response times have significantly slowed compared to the videos on Bilibili, likely due to lengthy queues. To achieve a level of complexity with adequate clarity while accommodating numerous users, can this be accomplished on standard home computers, or do we require high-performance cloud devices?

Simon:

It’s possible on home computers, but generally, a good graphics card is necessary. Simply put, a 3070 or 3080 with at least 12GB of memory is needed. However, such a node might only support one user at a time, so costs can still be high.

Actually, the web-based model I showed you earlier is open source; you can directly retrieve the code along with the front end and run it on your server. As long as you have a properly configured server, you can create multiple nodes for others to use, but again, costs can be high. A single 3080 graphics card can cost thousands of yuan, and it only serves as one node. Therefore, while the costs remain elevated, if you're conducting a workshop for twenty or thirty people, you might only need four or five such nodes to manage the demand effectively without requiring all users to access them simultaneously.

However, if you want to make it publicly available for anyone to use, it’s inevitable that it will become like a website on Hugging Face, where users will have to queue for access, which may be unavoidable.

Xiao Gang:

The questions I had have been addressed. Others are free to engage with the three speakers.

17.

Using Different Tools in Architectural Workflows: Grasshopper, Blender, AI...

/ / / / / /

Audience B:

Simon hopes that AI can become an amplifier for architects or designers, enhancing output. I think this is a valid point. Have you envisioned how AI and architects might collaborate in the future? For example, with the current integration of CLIP and VQGAN, where designers input language to guide AI in design, are there other collaborative methods between AI and designers?

Simon:

I haven’t considered it in detail, but your comment reminds me of something. Many people mistakenly view AI creation as simplistic; there are two prevalent misconceptions. One, including many undergraduate students studying parametric design, is that using AI or even simple tools like Grasshopper allows them to create something slightly different from others, leading them to think they are exceptionally skilled, which is certainly a misconception.

The second misconception arises when others learn that your creation involved parametric design or AI; they often conclude, “Isn’t that just using a machine to draw? That’s not your skill.” This too is a misconception. Therefore, I think we need to correct our attitudes: how do architects merge with tools? This isn’t something I or a few others can dictate; it should be a design exploration.

Just as with musical instruments or painting tools, they originally lacked fixed conventions, which were gradually established by artists as they learned to use those tools. Currently, there’s a lack of a serious attitude among designers toward examining these new tools, as well as a prevailing habit and environment of genuinely investing effort in their application and development.

People try them out, find them entertaining, and if they can’t create their desired outcomes, they abandon them. But, for instance, with a pencil, to learn sketching, we practice drawing eggs, apples, and plaster casts for many days before being able to produce a respectable sketch. We dedicate substantial time to mastering the capabilities of a pencil.

If we approach AI tools with a similar level of seriousness, their inherent capabilities far exceed those of a pencil (though perhaps they are less flexible), shouldn’t they yield even more exciting results?

What I mean is that designers should embrace these tools, and through continuous use, they can either refine them, master them more effectively, or better integrate various tools to create something new. I believe this is a question that designers must spend time answering.

Audience A:

In your opinion, what is the greatest advantage of AI compared to self-driven design? Does AI exhibit distinct aesthetic features, or is the only novelty a result of using AI, which leads to new styles suitable for next-generation digital architecture? I’d like to hear your thoughts because if AI does not yield distinct results compared to previous methods, it might seem redundant.

A follow-up question: since both you and Xiao Gang are fathers, do you perceive AI as a somewhat unintelligent child? It seems less intelligent now because we have over-taught it. Would it perhaps be more suitable for it to focus on a singular task with an approach of deeper reinforcement learning?

Simon:

I think both your questions are easy to address. First, don’t view AI as a singular tool; see it as a toolkit. For instance, some individuals prefer creating by hand, others may prefer direct modeling, while others might start with CAD for drafting. You wouldn’t perceive limitations there, would you? Hand-drawers might sketch with pencils initially, then refine their sketches with markers, and later use watercolors for rendering—some experts even use oil paints; they rely on various tools to tackle different tasks. Similarly, computer users might employ SketchUp for rapid modeling, Rhino for specifics, and BIM for actual construction.

So, AI operates similarly. If you perceive it as bound to a certain style or feel that it doesn’t produce results distinctly "AI-like," it may be because your toolkit is still somewhat limited. Once you become familiar with enough AI tools, those constraints should fade. On one hand, I refer to "generic AI," not solely deep learning models but all algorithmic processes can be classified as AI. If you understand a variety of these algorithms, the continuous emergence of new algorithms can broaden your options. Thus, you will no longer feel constrained; you can select methods that align with your tasks. On the other hand, there is no significant concern; just think of it as another toolkit, akin to software, which expands the potential boundaries of your capabilities.

- *For instance, I might model using Grasshopper; later, if I aim for a more streamlined and organic form, I could use Blender. If I need conceptual visuals, I might turn to AI for inspiration with CLIP and VQGAN; when storytelling, I could utilize img2text; and for

problem-solving, I could resort to decision trees. If I require certain fixed forms, I can apply fractal algorithms. It’s essentially a large toolkit.**

Audience A:

We don’t possess your ability to choose different tools based on content or desired outcomes. While we acknowledge the prowess of these tools, we lack clarity on their practical effects. If it’s a short-term workshop, could you guide students towards building an awareness and capacity for selecting appropriate tools based on specific tasks?

Simon:

- The limitations of tools need to be addressed through your efforts.Whether that’s with other tools or by your methods.

END

Contact Simon for Collaboration:

Public account, video account, Xiaohongshu, Bilibili, Weibo: Simon's Daydream

All images are copyrighted to the author and may not be used for commercial purposes without permission.

The Supernova Column Group is Continuously Recruiting New Members.

Friends who love interviews and writing are welcome to join!

Recommendations for the "Supernova" column can be sent to the chief editor, Wanlin.

Chief editor: Zhang Wanlin WeChat: bo711WL

Supernova Explosions

Each issue introduces a young scholar possessing intellectual charm, sharp thinking, and creative talent, along with representative works.

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/AI-archi-leifeng-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章