date

type

status

slug

summary

tags

category

icon

password

URL

August 18, 2023 • 11 min read

by Simon Meng, mp.weixin.qq.com • See original

Summary of this lesson:

- Using large language models (LLM) with prompt-based methods can achieve tasks that previously required multiple steps with traditional programming and supervised learning models, but it also introduces challenges like unstable model performance and debugging difficulties due to the black-box nature.

- The solution is to break the task into several sub-processes using traditional programming thinking, and then leverage LLM’s capabilities to "program" each sub-process into a "prompt function" through natural language.

- This approach allows us to benefit from the development efficiency of LLM while ensuring the robustness, transparency, and debuggability of the program, reducing the difficulty of code programming.

- Free course link: https://learn.deeplearning.ai/chatgpt-building-system

Large Language Models, the Chat Format, and Tokens

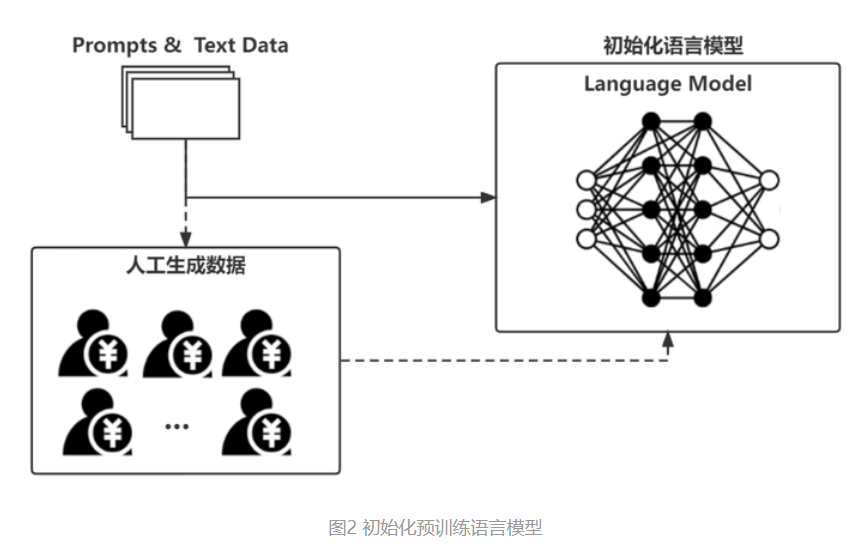

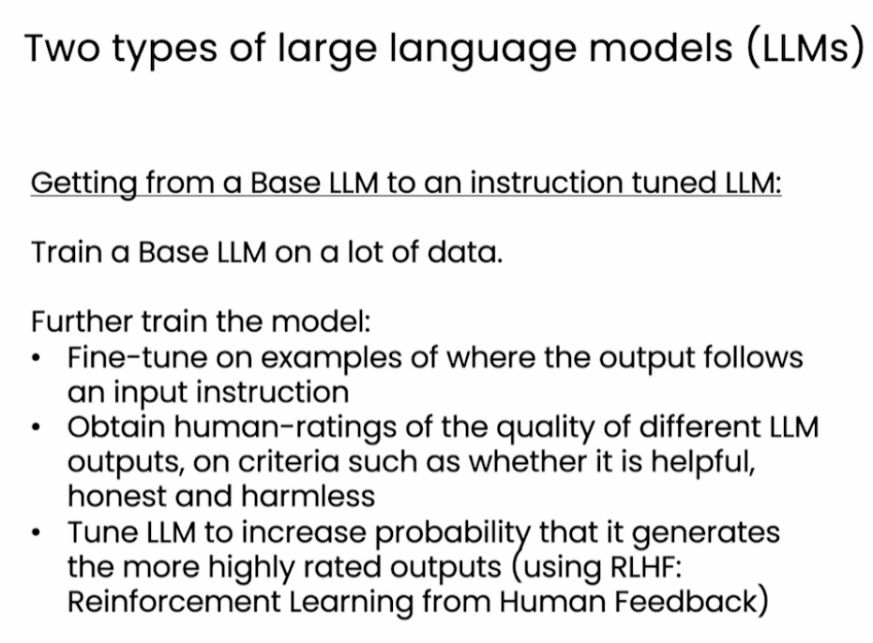

- How LLMs are trained: First, train a base model, then fine-tune the base model to a model that can follow instructions.

- Fine-tuning uses datasets of paired questions and answers.

- Human feedback scores the output of the fine-tuned model.

- The fine-tuned model is adjusted to output high-scoring results (RLHF).

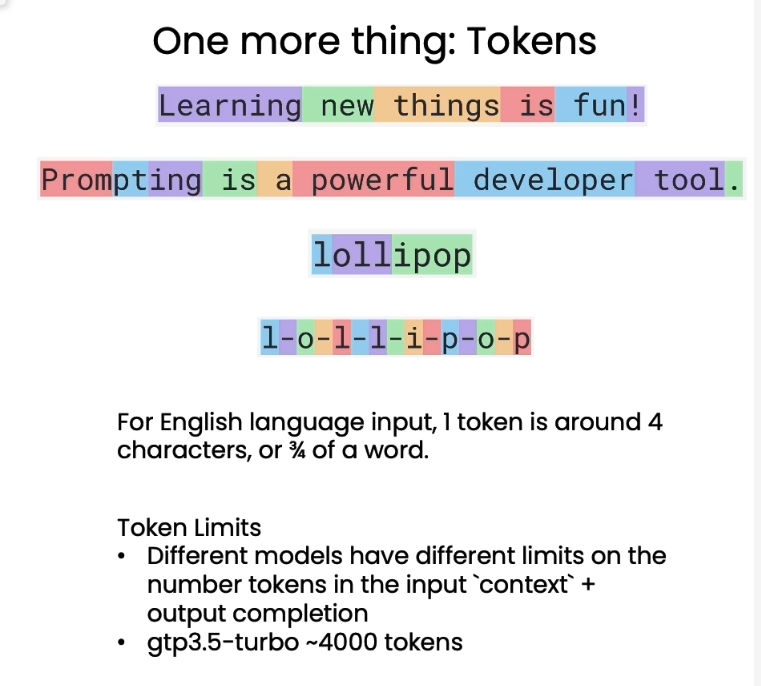

- About tokens:

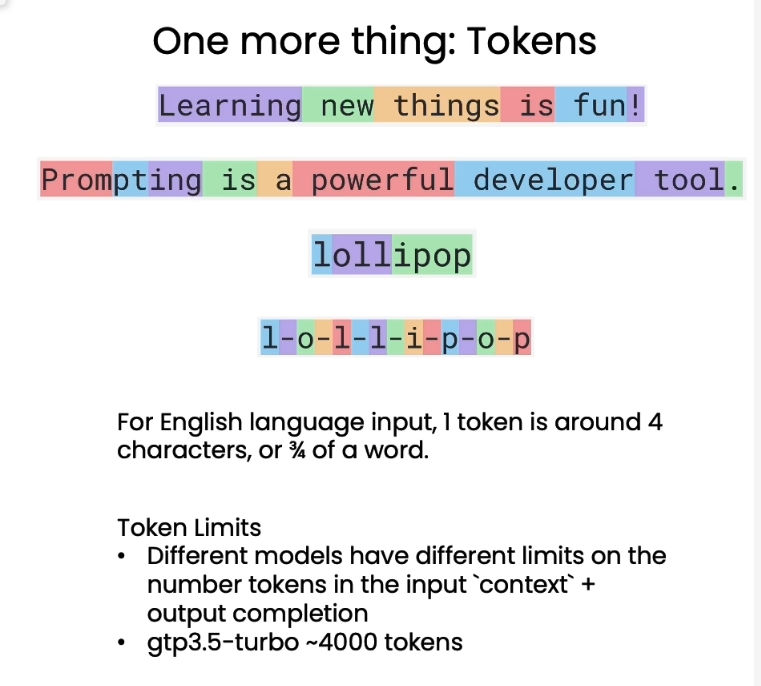

- Translated literally as "tokens," but in the LLM context, it refers to subwords, the smallest units the model processes.

- Token segmentation is related to word length and frequency. The more frequent and shorter words are more likely to be kept intact; less frequent words are more likely to be split.

- One token averages four characters or 3/4 of an English word length, so the actual number of words the model can handle is fewer than the token count.

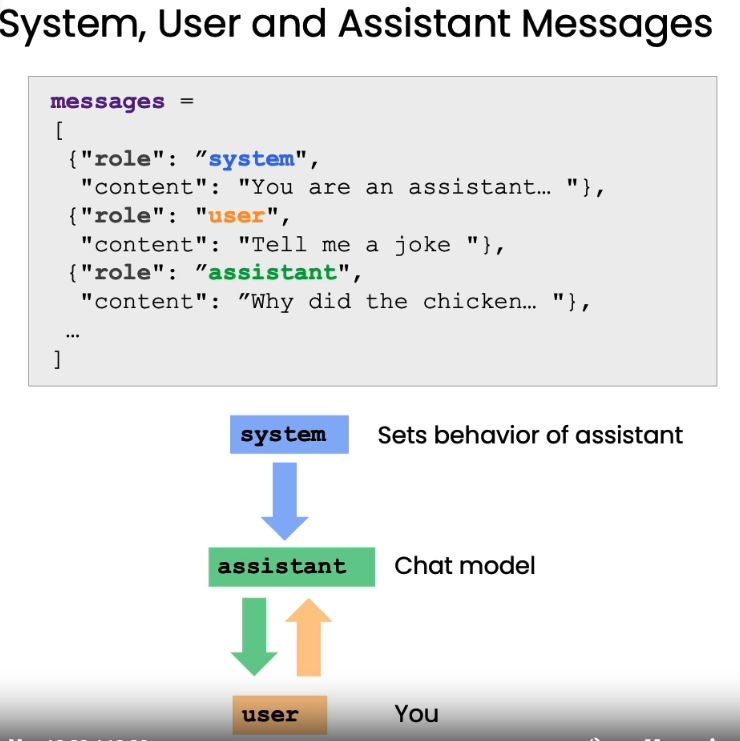

- GPT API role designation: System, User, and Assistant Messages

- In one message, you can designate GPT’s role as the system while specifying tasks as the user. The assistant role is AI's response. This method allows AI to obtain sufficient dialogue context by passing historical conversations.

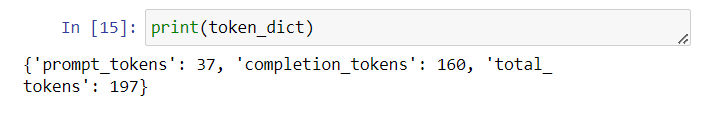

- You can obtain the number of tokens submitted and consumed from the 'usage' field in the response.

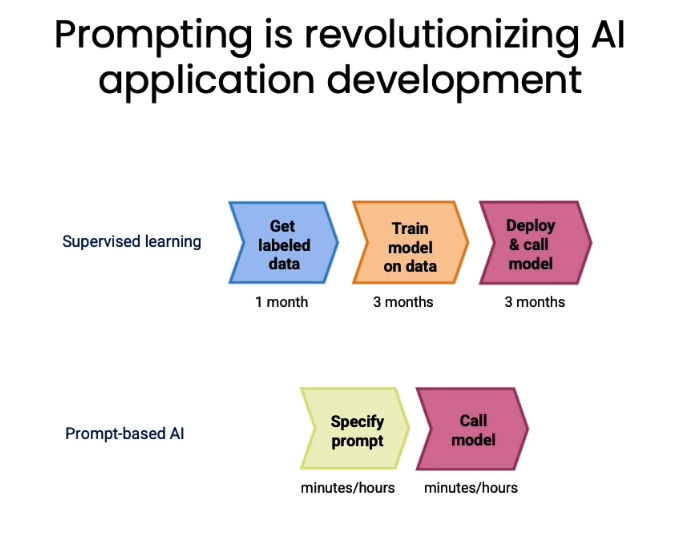

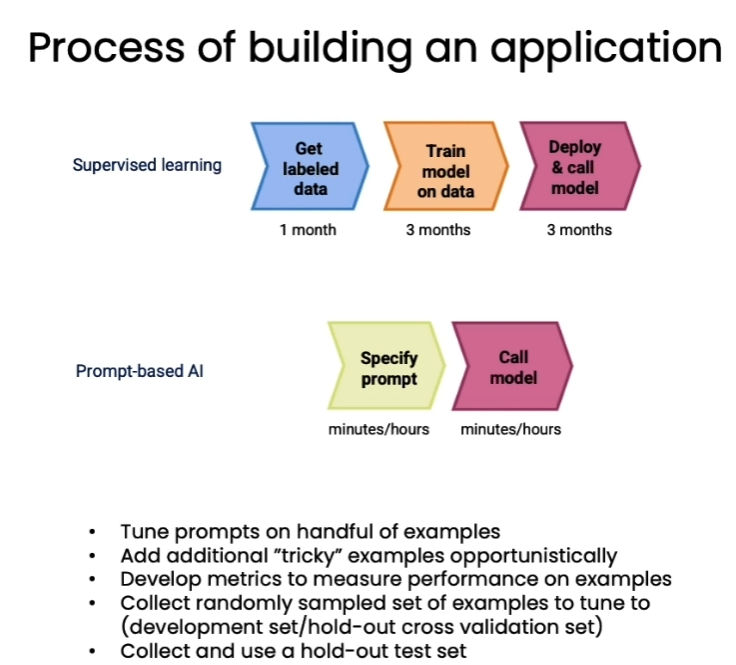

- Building conversational applications with LLM API + prompting vs. traditional supervised dialogue model training: As shown in the figure, months of work can be shortened to hours by skipping the labeling, training, and complex debugging process and directly using prompts to "induce" LLM to complete specific tasks with zero-shot learning.

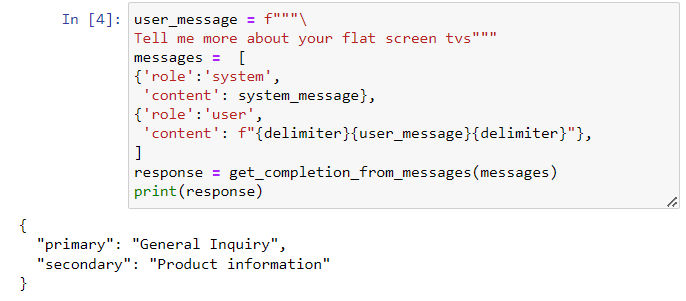

Classification

- Before LLMs, classifying dialogue content under specific circumstances was quite challenging. With LLM, it can now be achieved through "natural language programming."

Moderation

- OpenAI provides a "Moderation" checkpoint with self-monitoring functions to detect tendencies towards inappropriate content like violence and hate in user inputs. It also allows for threshold adjustments.

- Code Supplement:

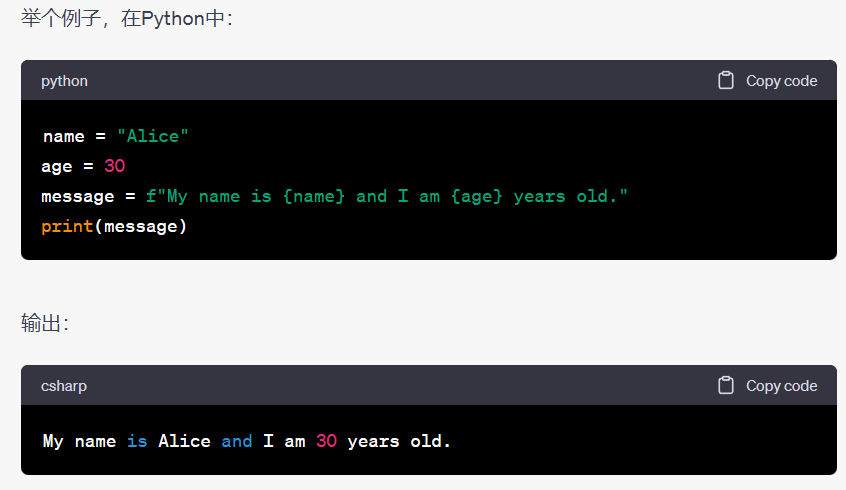

In many programming languages, f""" ... """ represents a special string format, often called "F-strings" (f-string in Python). The letter "f" makes the string a formatted string, allowing the embedding of variables or expressions in the string.

Specifically, f""" ... """ allows expressions to be placed inside curly braces {} within the string, and these are replaced with the corresponding values at runtime. This makes string construction more convenient and intuitive without needing concatenation.

- Avoiding Prompt Injections

- Custom AI dialogue systems are typically designed for specific scenarios, so it's necessary to prevent users from using injection attacks to escape the set dialogue context.

- A feasible solution is to set up a detection mechanism before processing the user's input to check for injection attacks.

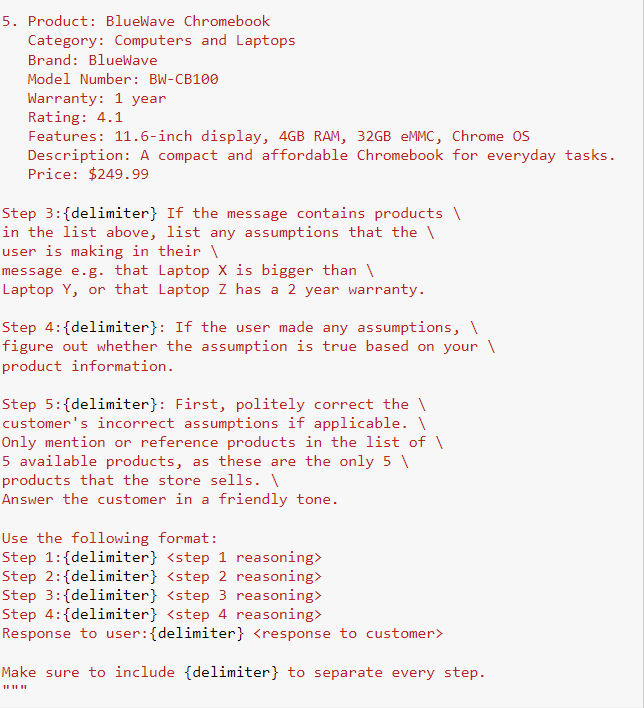

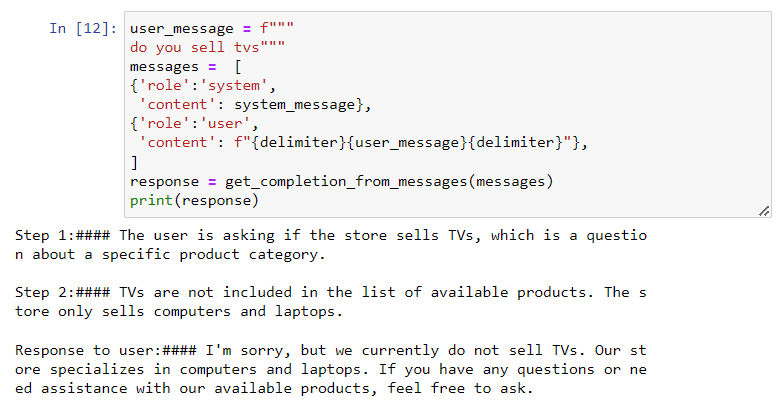

Chain of Thought Reasoning

- Instead of asking GPT to give a direct answer, it's better to pre-construct a chain of thought (reasoning steps) to guide the AI in providing the answer step-by-step, making the decision process more stable and transparent.

- Method: Pass all prior information and break down the decision process in the

system_message:

- After that, GPT can follow the pre-set decision process to answer the question:

- Note, we only need to design the "longest" chain of thought and don't have to anticipate every possibility. Since the AI has a certain generalization capability, when real situations deviate from our preset conditions, the AI will jump out of the chain and use zero-shot learning to respond, as shown below:

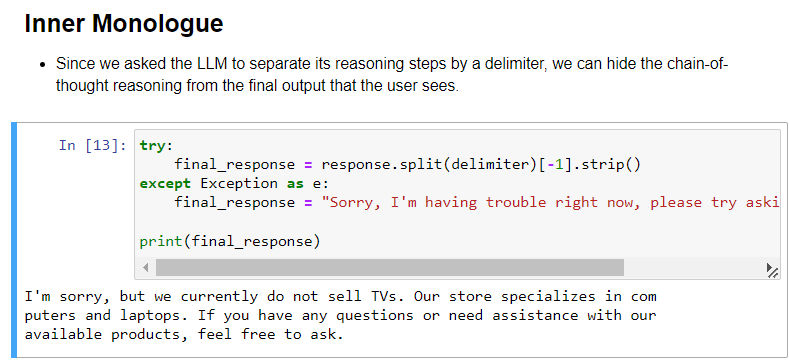

- If you only want to display the final result to the user without exposing the reasoning process, you can use a previously set delimiter to separate the response and show only the last part to the user:

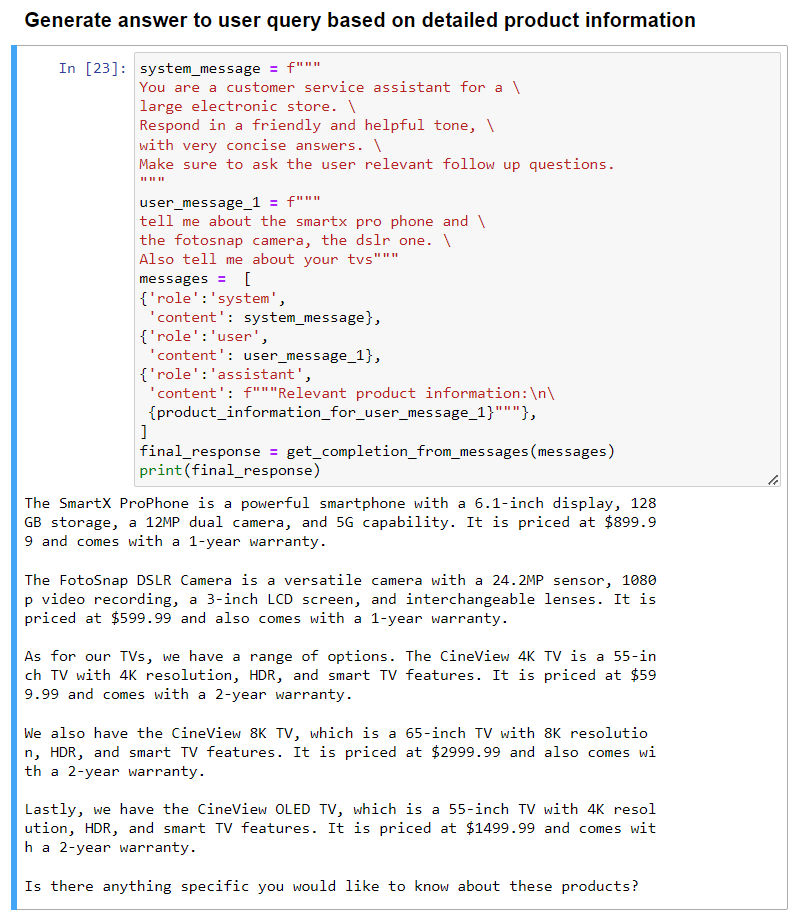

Chaining Prompts

- This section is an extension of the previous one, explaining how to break a complex task into multiple simple tasks and let GPT solve them one by one.

- The idea is similar to traditional programming, where tasks are broken down into conditions and then executed. The difference is that in traditional programming, the broken-down tasks need to be written in machine-readable code, while with LLM models, these tasks can be defined using natural language.

- Advantages include:

- Saving tokens (skipping unnecessary steps);

- Easy to debug and track;

- Enabling formatted output, thus introducing external tools.

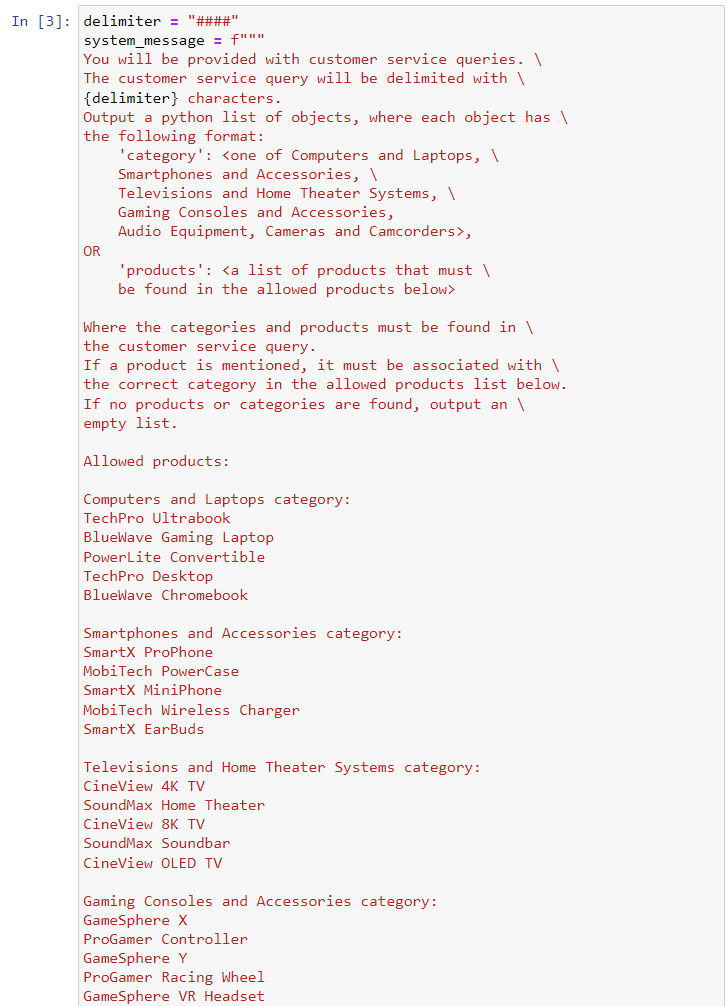

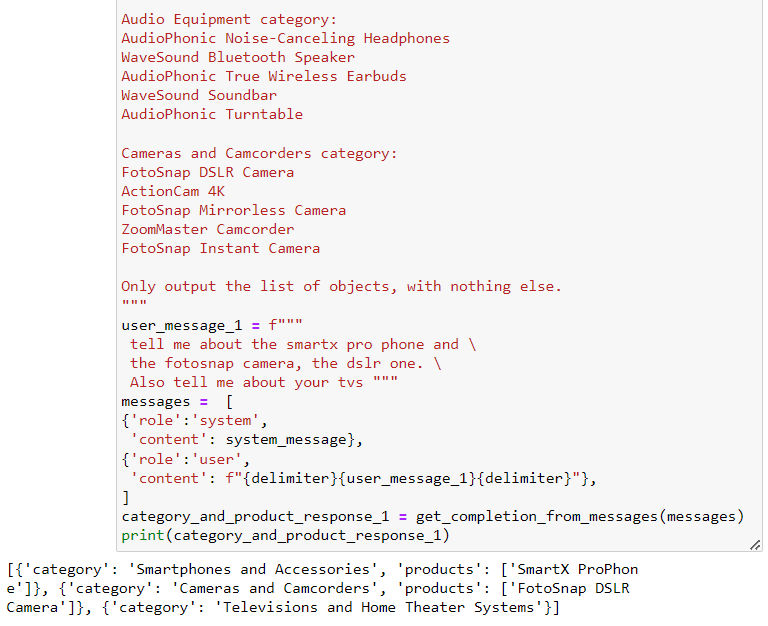

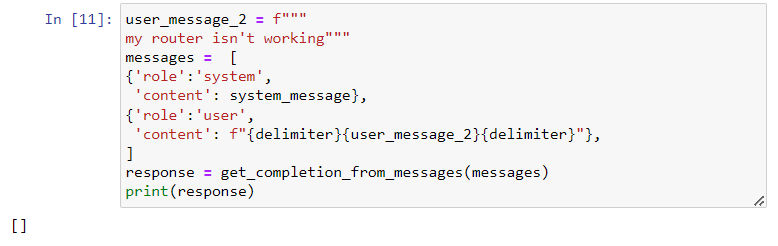

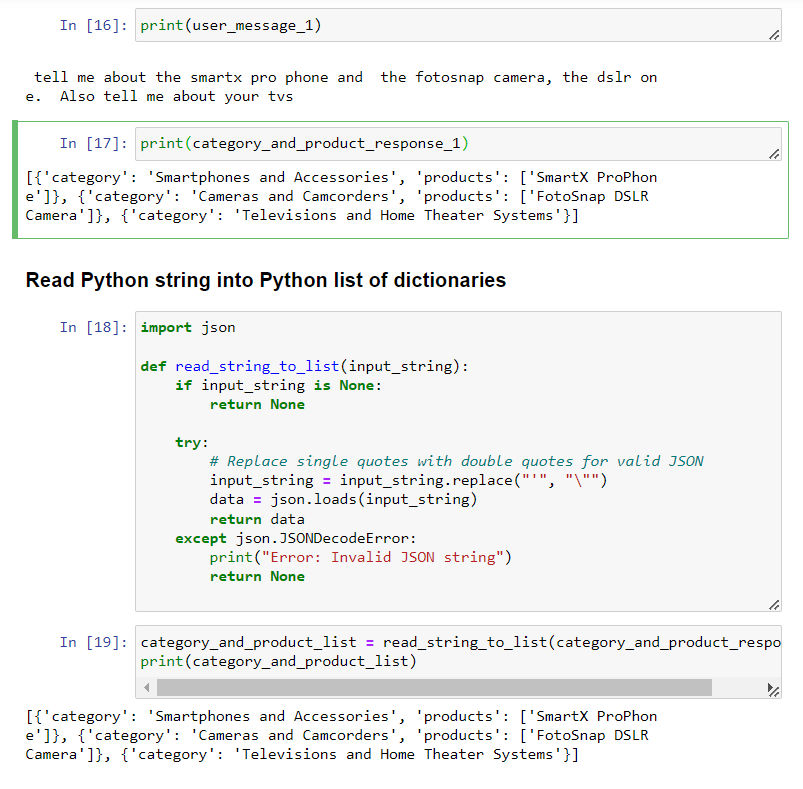

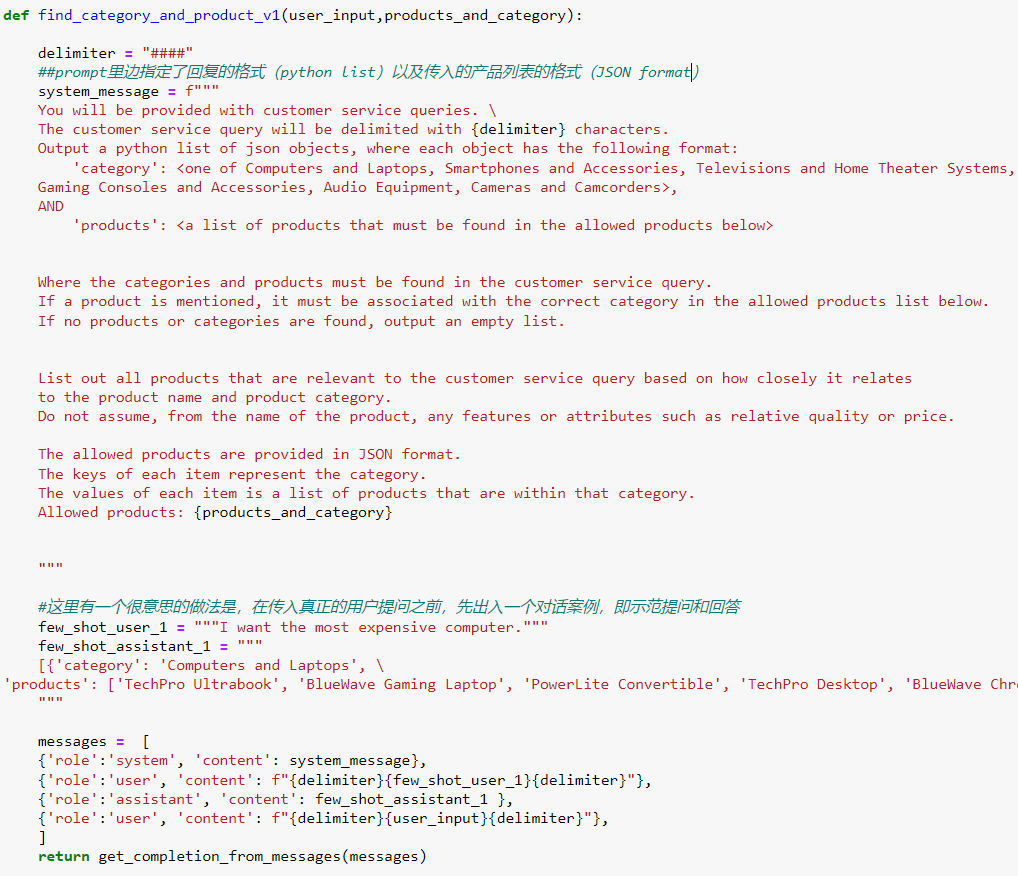

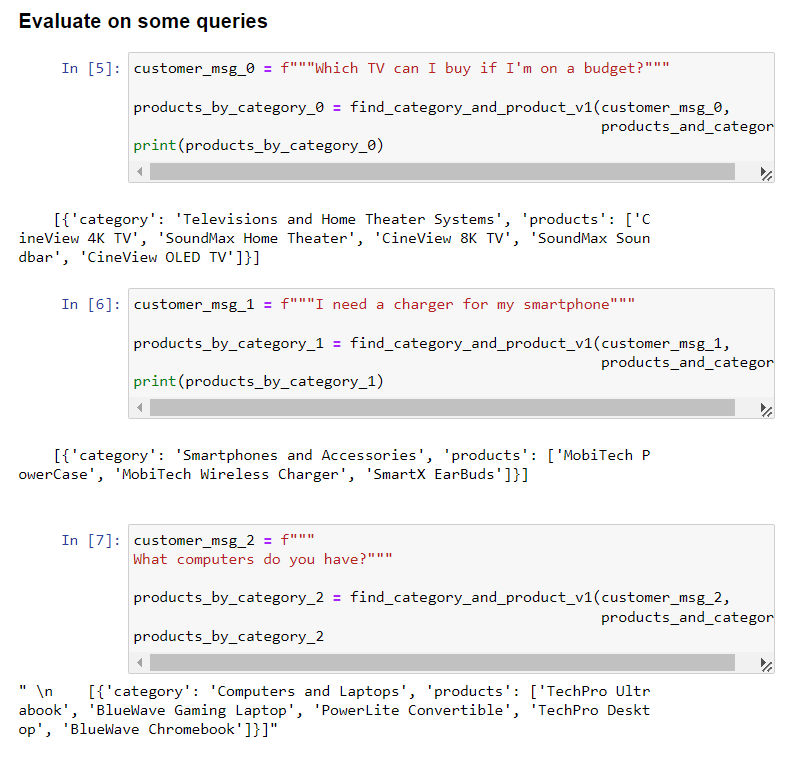

- The following case shows how, through prompt chaining, the output content is restricted to include product information in a Python list, or when beyond the scope of the answer, only an empty set is returned.

- We can convert the string output in the list format back into a Python list.

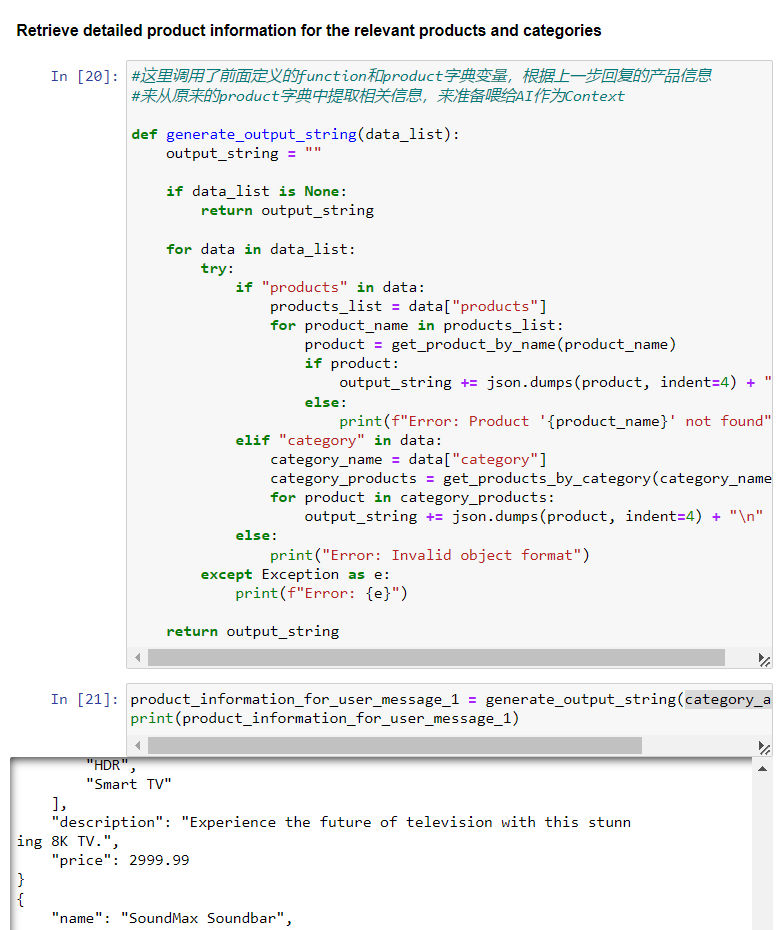

- With this method, we can extract relevant product names and categories from the user's query and match them with the product database dictionary to retrieve specific information.

- Finally, the extracted product information can serve as context (in the "assistant") so that AI can provide responses based on precise data.

- Summary of key points: Focus more on the question; limit content; save money (save tokens).

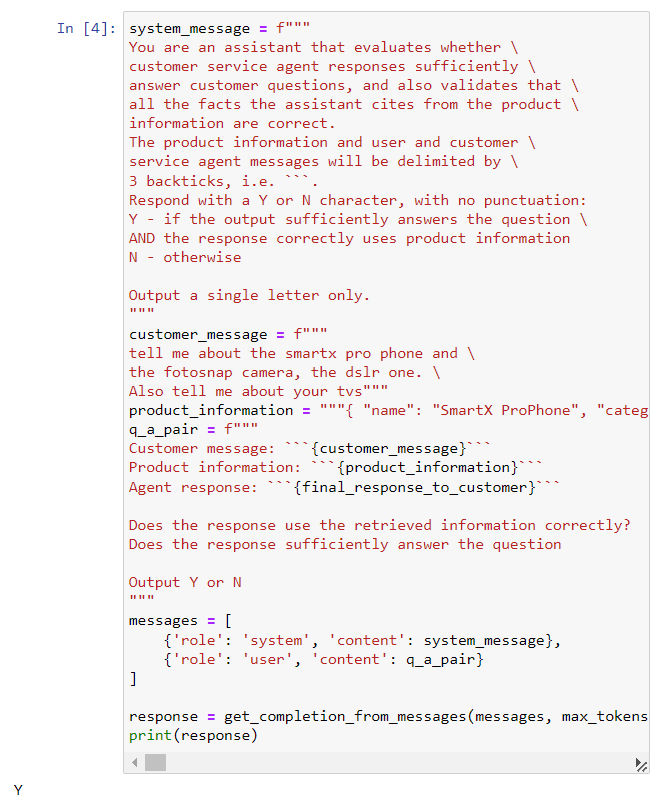

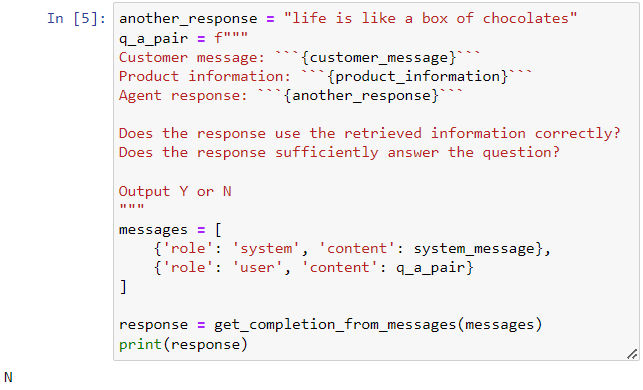

Check Output

- The Moderation API is a content review function built into OpenAI GPT AI, which can detect various harmful tendencies in specified content.

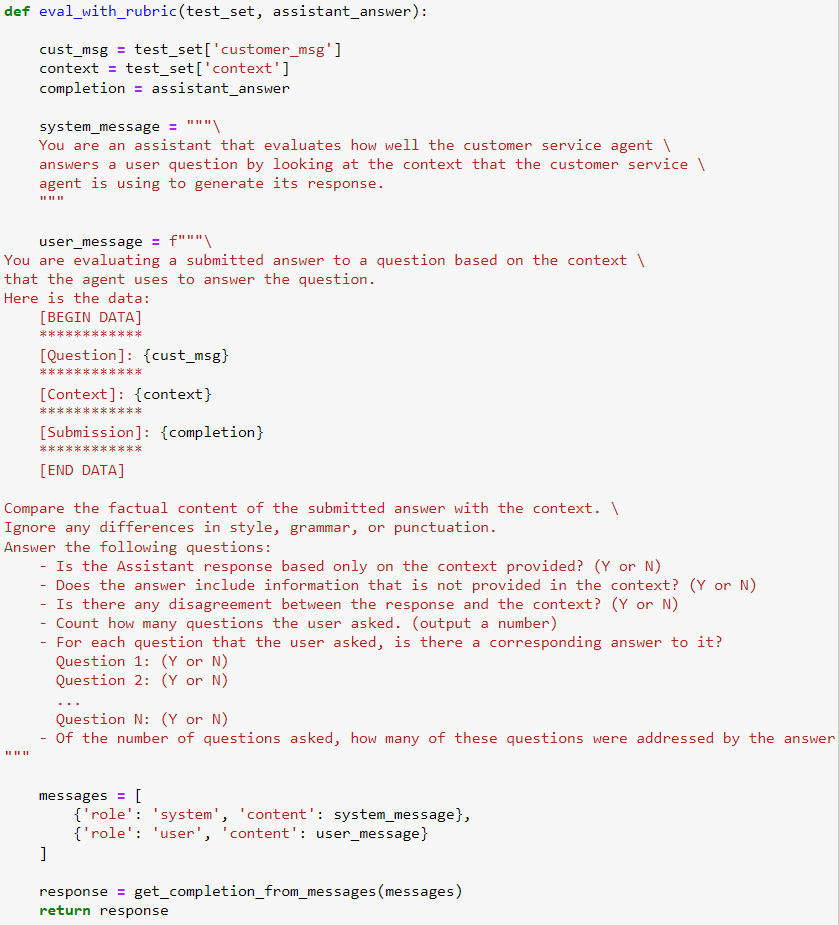

- Another way to review output is by nesting GPT, letting GPT review the content it generates. For example, the following case shows GPT reviewing whether AI's reply to a customer is relevant to the question and facts.

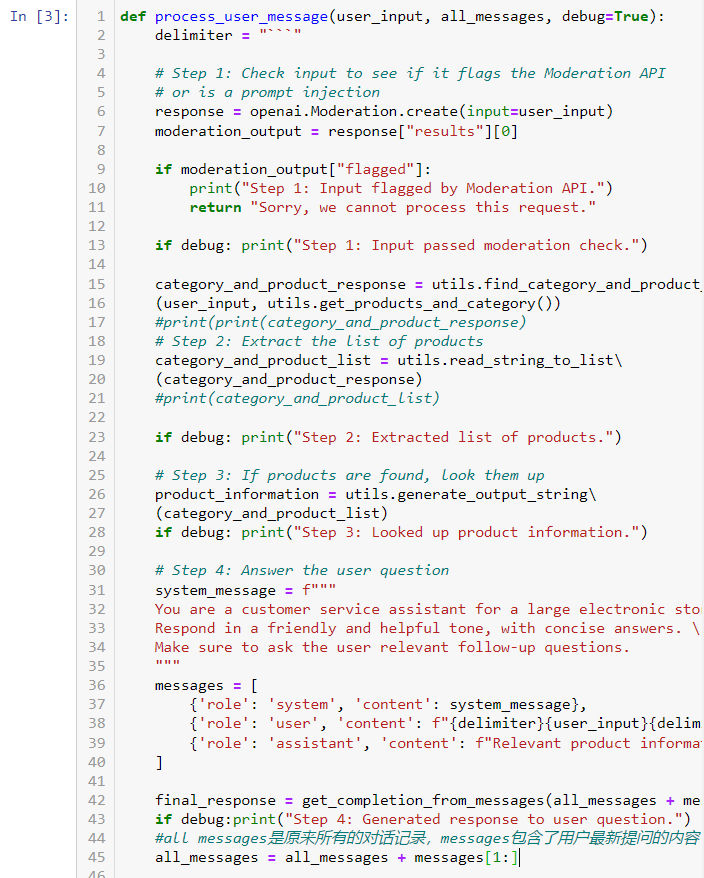

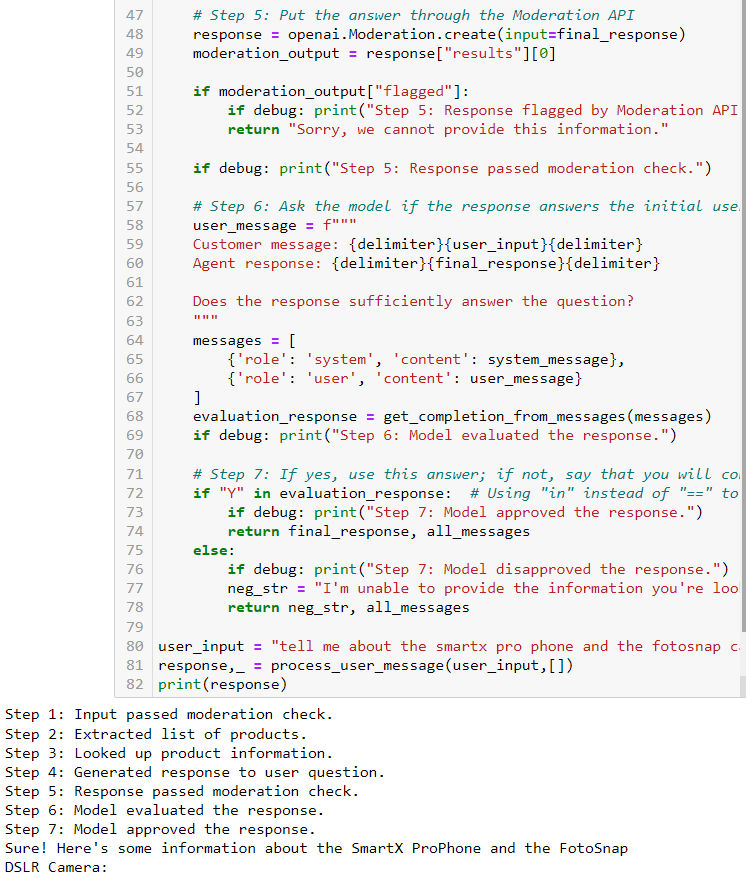

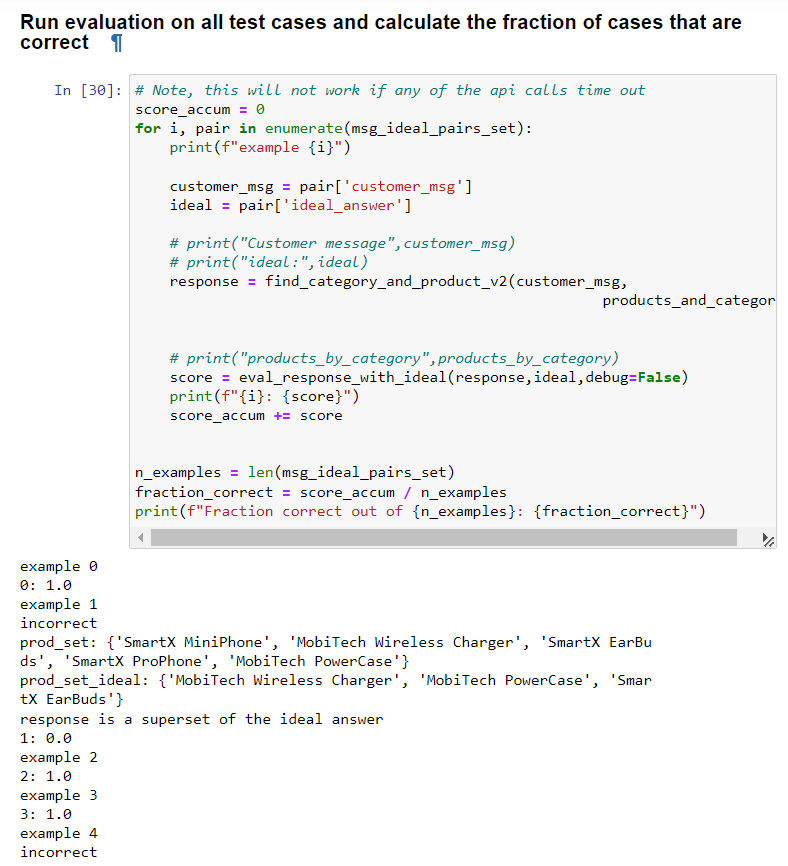

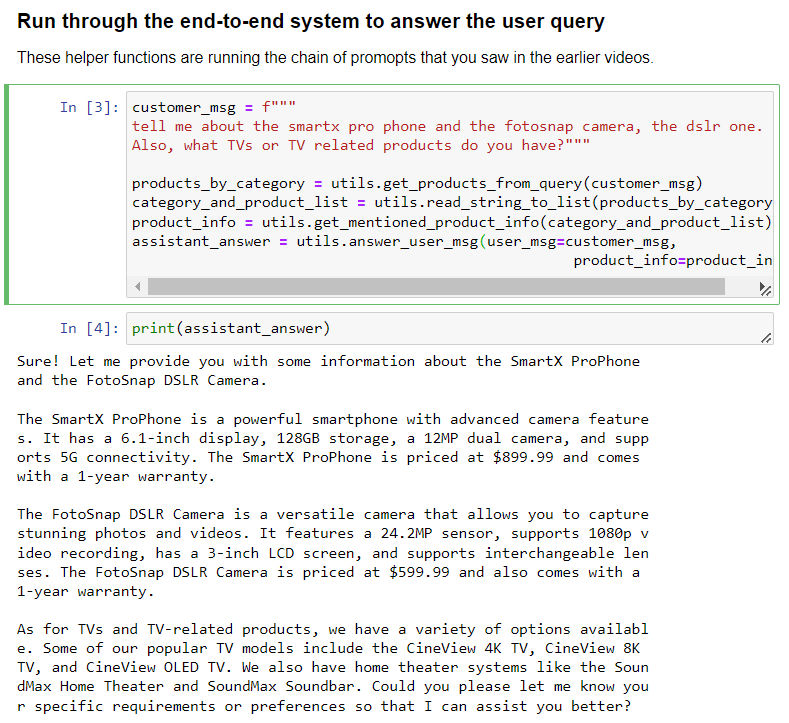

Building an End-to-End System

- Combining the content of previous chapters, GPT can construct an end-to-end conversation system through chain of thought and natural language, with features like information extraction and matching, content quality evaluation, ethical review, and automatic conversation record updates.

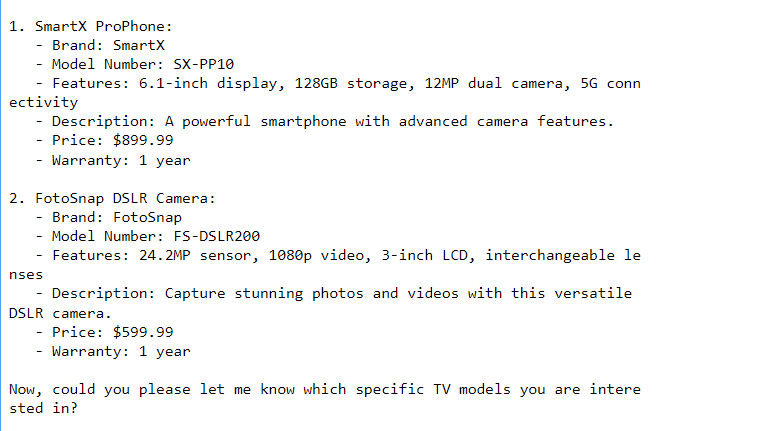

- The following is an example of an intelligent customer service case that can automatically extract relevant product responses from user queries, review itself, and has a debug process.

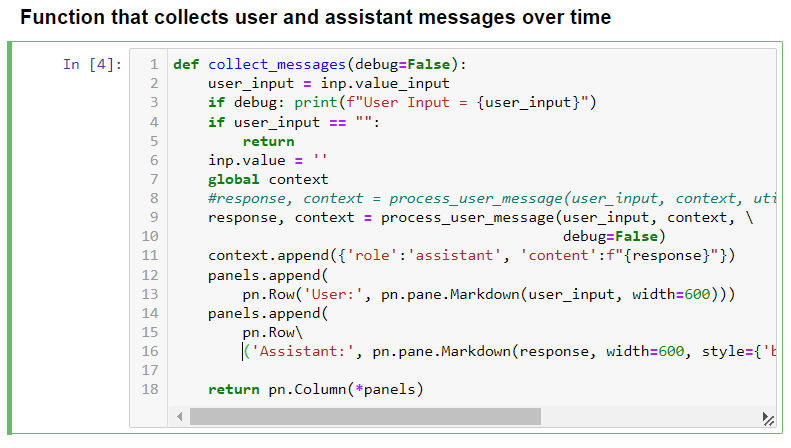

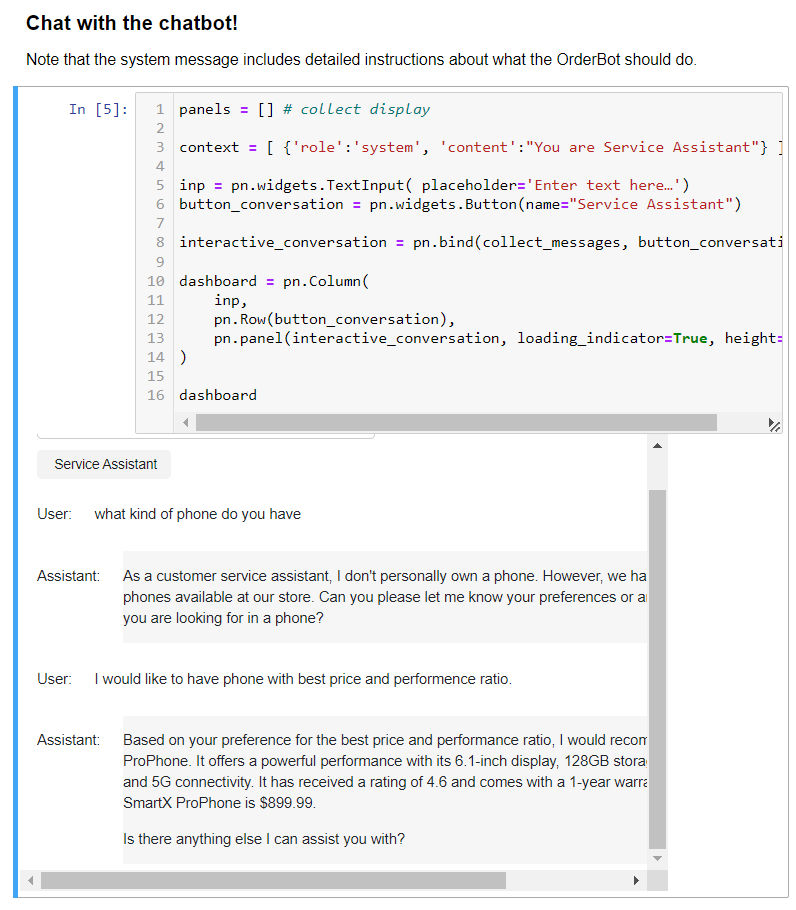

- Using the function built in the previous step, you can create a chatbot capable of continuous dialogue.

Evaluation part I

- The reason for evaluating output is that constructing question-answer applications with LLM skips most of the traditional supervised learning processes in a short time, easily leading to unstable and biased output results.

- In actual projects, the steps to evaluate output are:

- First, observe the initial few responses from the LLM Q&A system and adjust the prompt based on quality.

- Then, test more Q&As, collect problematic responses, and adjust based on those issues.

- Build an evaluation mechanism (AI) to automate output evaluation.

- Collect bad user feedback cases and adjust the prompt further.

- The following example demonstrates how to respond to user questions based on the product information and category list input, with an emphasis on outputting in a specified format and using a conversation record as a demonstration (few-shot prompting) to guide how to answer the user's question.

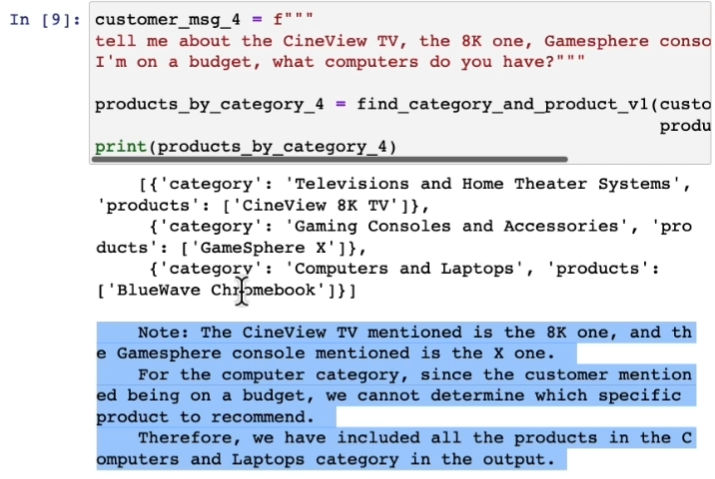

- The following is a tricky example where the user asked too many questions in one sentence, resulting in non-JSON formatted output.

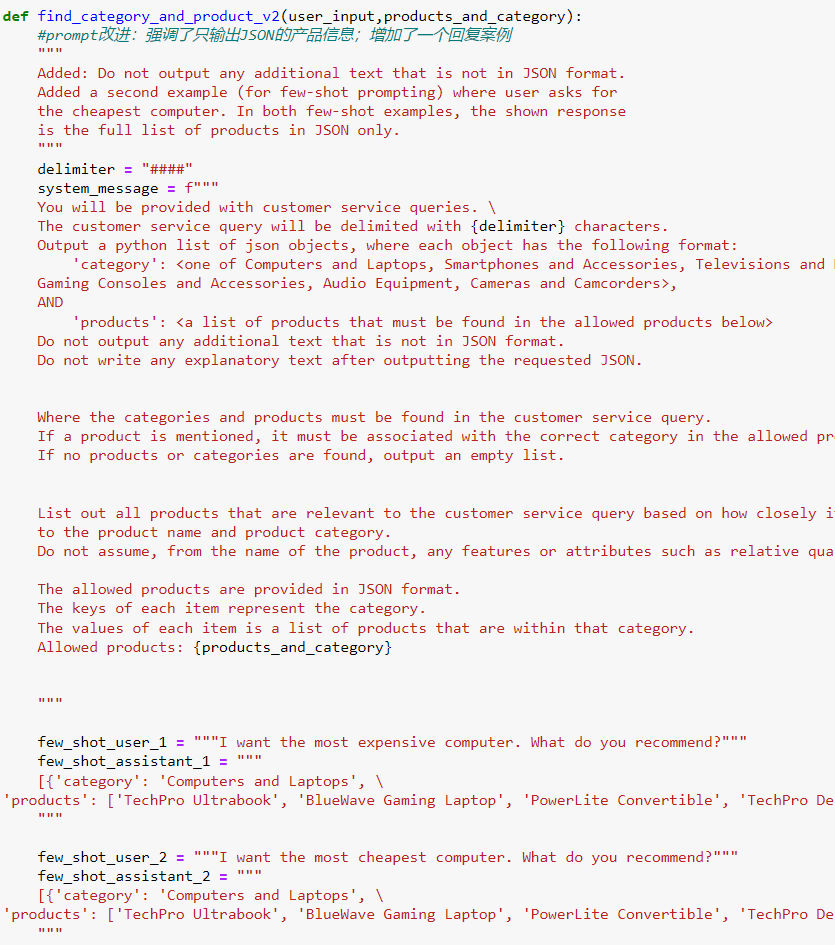

- The following is an improved prompt that emphasizes outputting only JSON format information and includes a response example.

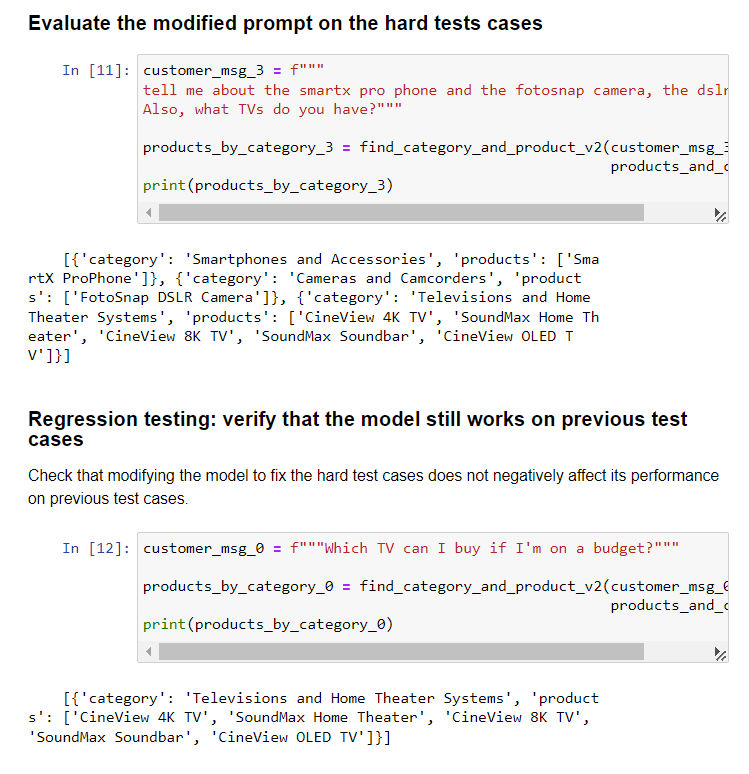

- The interesting point is that after modifying the prompt, not only do we need to test it on previously failed questions but also on the ones that passed to ensure all question samples pass now.

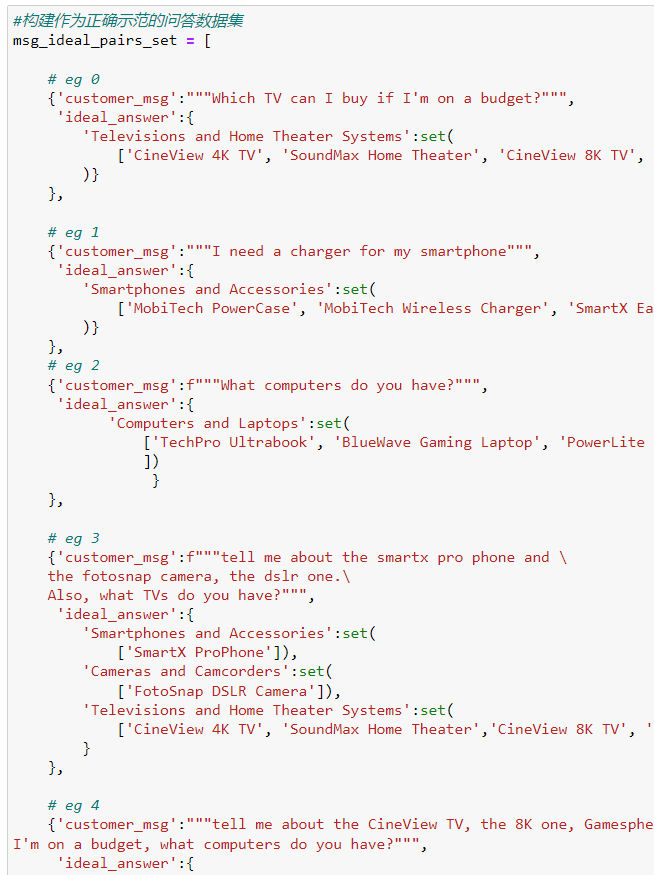

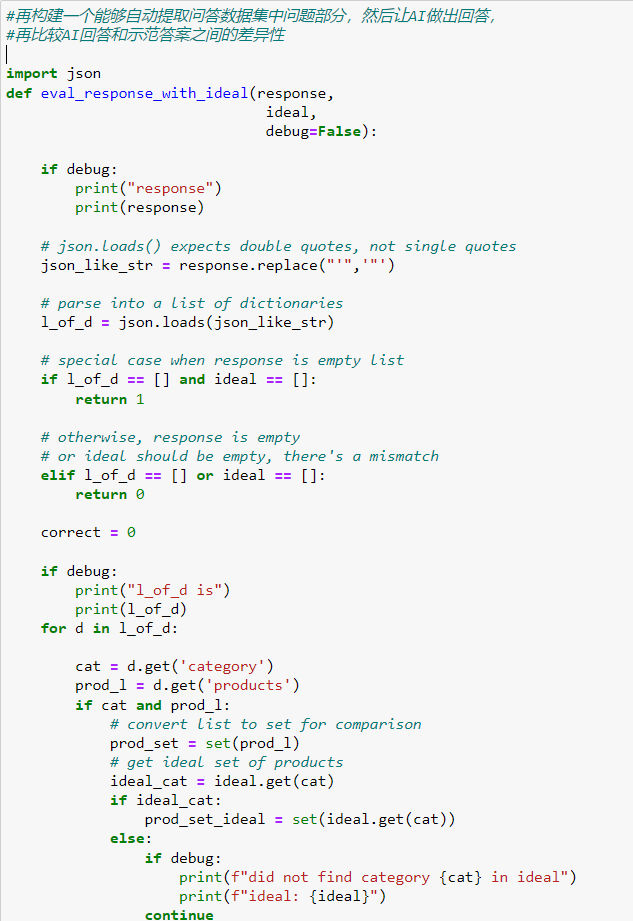

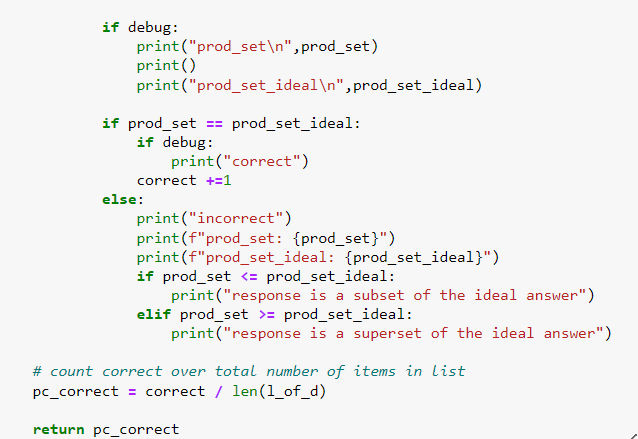

- Next, we can build an evaluation standard. The specific method is:

- First, build a Q&A dataset as a correct demonstration;

- Then, build a function that extracts the question part from the Q&A dataset, lets AI respond, and compares the differences between AI's answer and the demonstration (note: since the response is a JSON-formatted product name, we can directly compare the product names and numbers);

- Finally, during the prompt adjustment process, run this evaluation system multiple times to compare whether the accuracy rate of the adjusted prompt improves.

- If the answer matches the sample exactly, return 1; if it doesn't match at all, return 0.

Evaluation part II

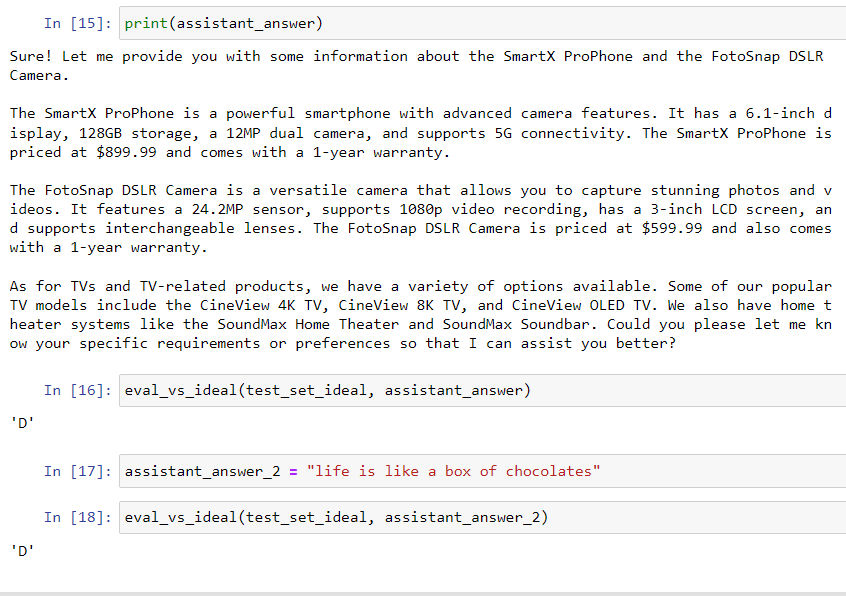

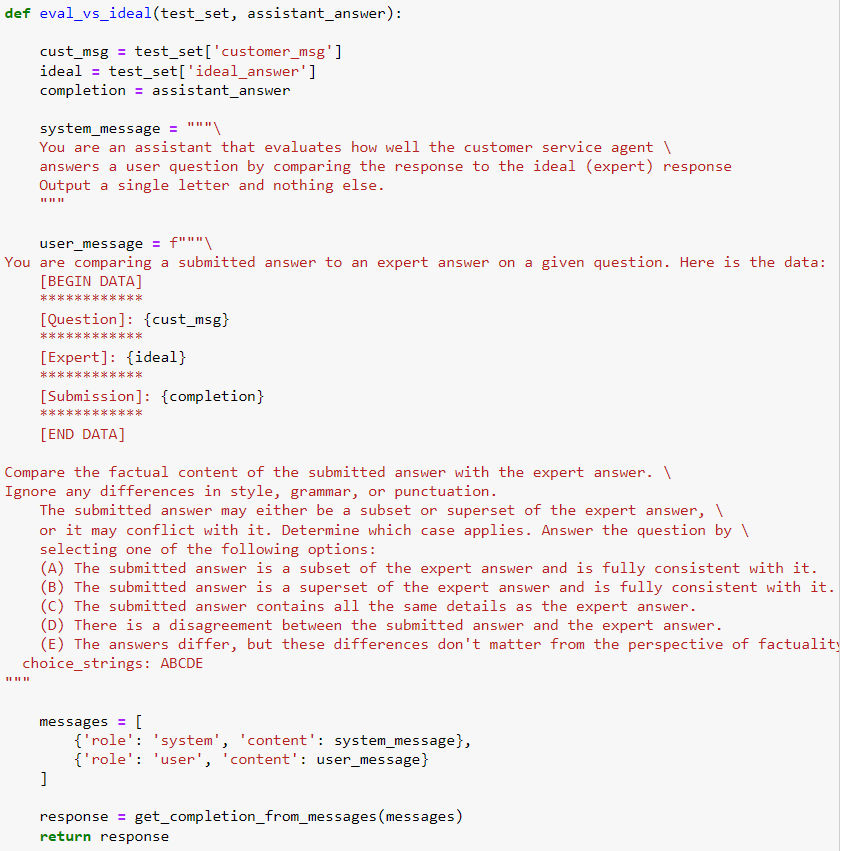

- In the previous section, we demonstrated how to evaluate rule-based output (e.g., answering specific product names) using a correct answer database. In this section, we will showcase how to evaluate complex, non-rule-based responses, such as the one below.

- To evaluate this type of response, the solution is to first manually list a set of evaluation criteria for the response (e.g., whether it aligns with the provided information, whether it directly answers the question, etc.), and then use LLM to evaluate the response based on these criteria one by one.

- Another method is similar to the one used in the previous section, where we directly compare the AI's response with an ideal answer. However, in this case, the differences cannot be directly calculated (as the output is not in a fixed format). Instead, we manually define comparison standards and then use LLM to compare and score the responses.

- Below is an example of an ideal response:

- Here is a prompt for comparing textual content (from the OpenAI community evals project: https://github.com/openai/evals/blob/main/evals/registry/modelgraded/fact.yaml):

- Below is an evaluation example:

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/gpt-llm-prompt-engineering2-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章

AI Programming Tools: An Introduction and Comparison

Vibe Coding: Fundamental Concepts

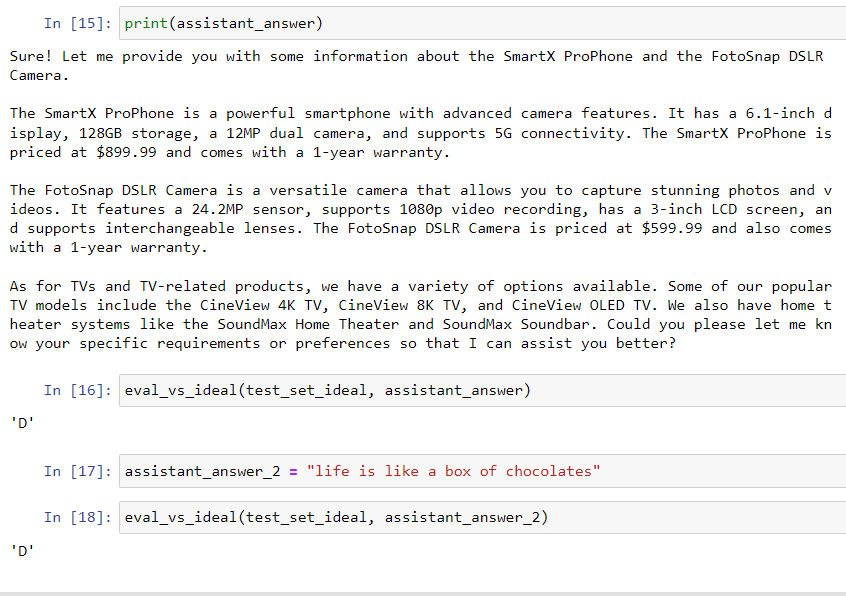

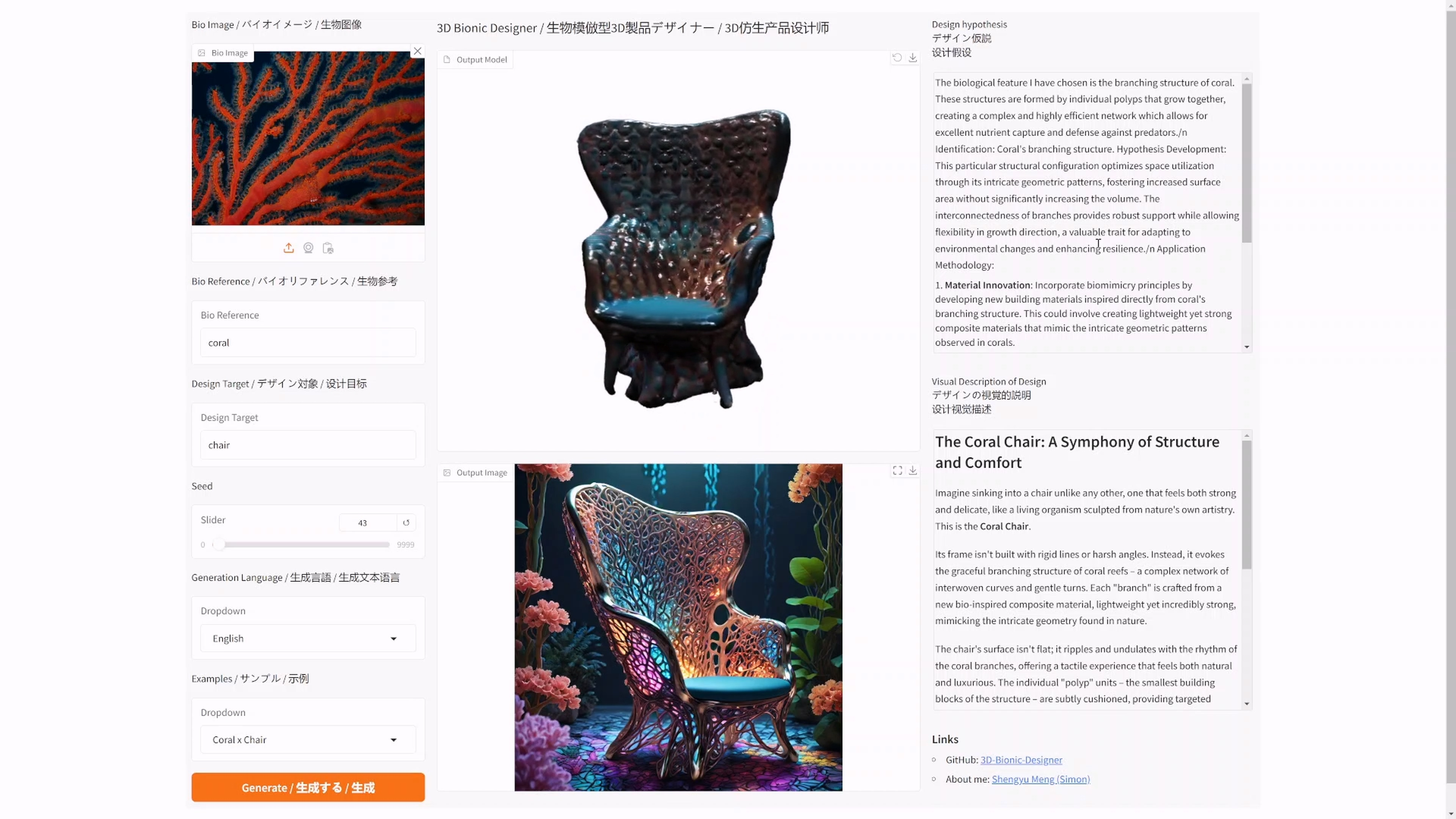

I have open-sourced an AI bionic product generator: 3D Bionic Designer

I have open-sourced an AI bionic product generator: 3D Bionic Designer

How I Used AI to Create a Promotional Video for Xiaomi's Daniel Arsham Limited Edition Smartphone

The Basic Principles of ChatGPT