date

Dec 12, 2023

type

Post

status

Published

slug

dreamspace-en

summary

I, a programming novice, have created a parallel space-time portal called GaussianSpace, a tool that allows users to guide the editing of three-dimensional Gaussian splatting (3D Gaussian Splatting) in large scenes using text. 🐶

tags

项目

开发

工具

编程

Project

Development

AI tool

category

Project

icon

password

URL

December 12, 2023 • 3 min read

by Simon Meng, mp.weixin.qq.com • See original

I, a programming novice, have created a parallel space-time portal—GaussianSpace, a tool that allows users to guide the editing of three-dimensional Gaussian splatting (3D Gaussian Splatting) in large scenes using text. 🐶

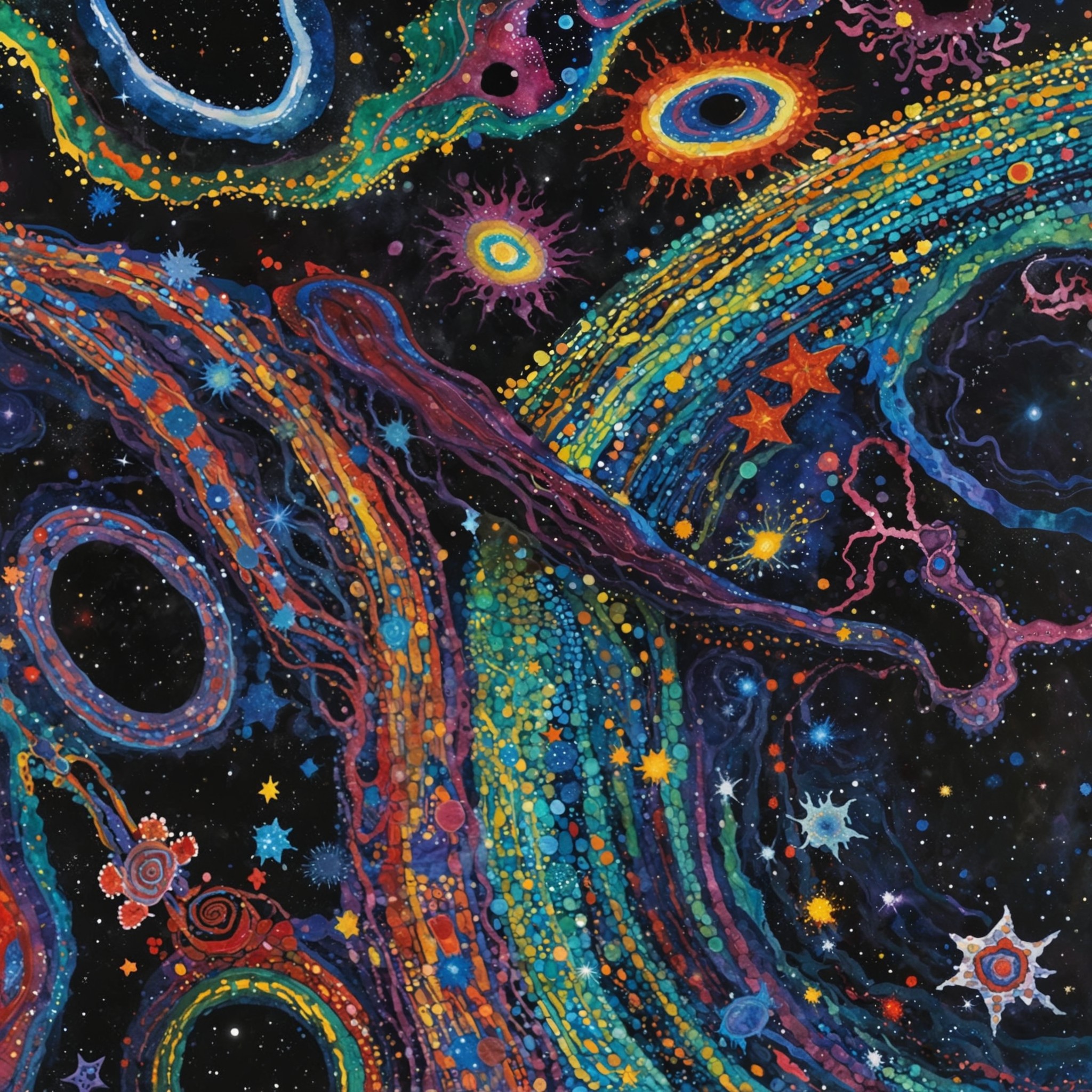

Recently, the 3D Gaussian technology has achieved an incredible level of detail in real scene 3D reconstruction. As a former architect, it’s easy to imagine that if we add text guidance for overall modifications, we could create parallel worlds 😮! I originally didn’t want to reinvent the wheel, but after exploring, I found that existing methods for text-guided editing of 3D Gaussian are primarily based on instruct pix2pix, which only allows for local editing 😂.

So, I had no choice but to tackle it myself 🧐. Based on the original 3D Gaussian loss function, I added a score distillation sampling (SDS) loss function based on a 2D stable diffusion model and introduced an Automatic Weighted Loss method to balance SDS Loss and real image Loss. This ensures that the overall loss function steadily decreases during iterations, allowing the edited Gaussian scene to maintain the original structural characteristics while responding to text guidance, ultimately achieving a successful transition to the parallel space-time! 🥹

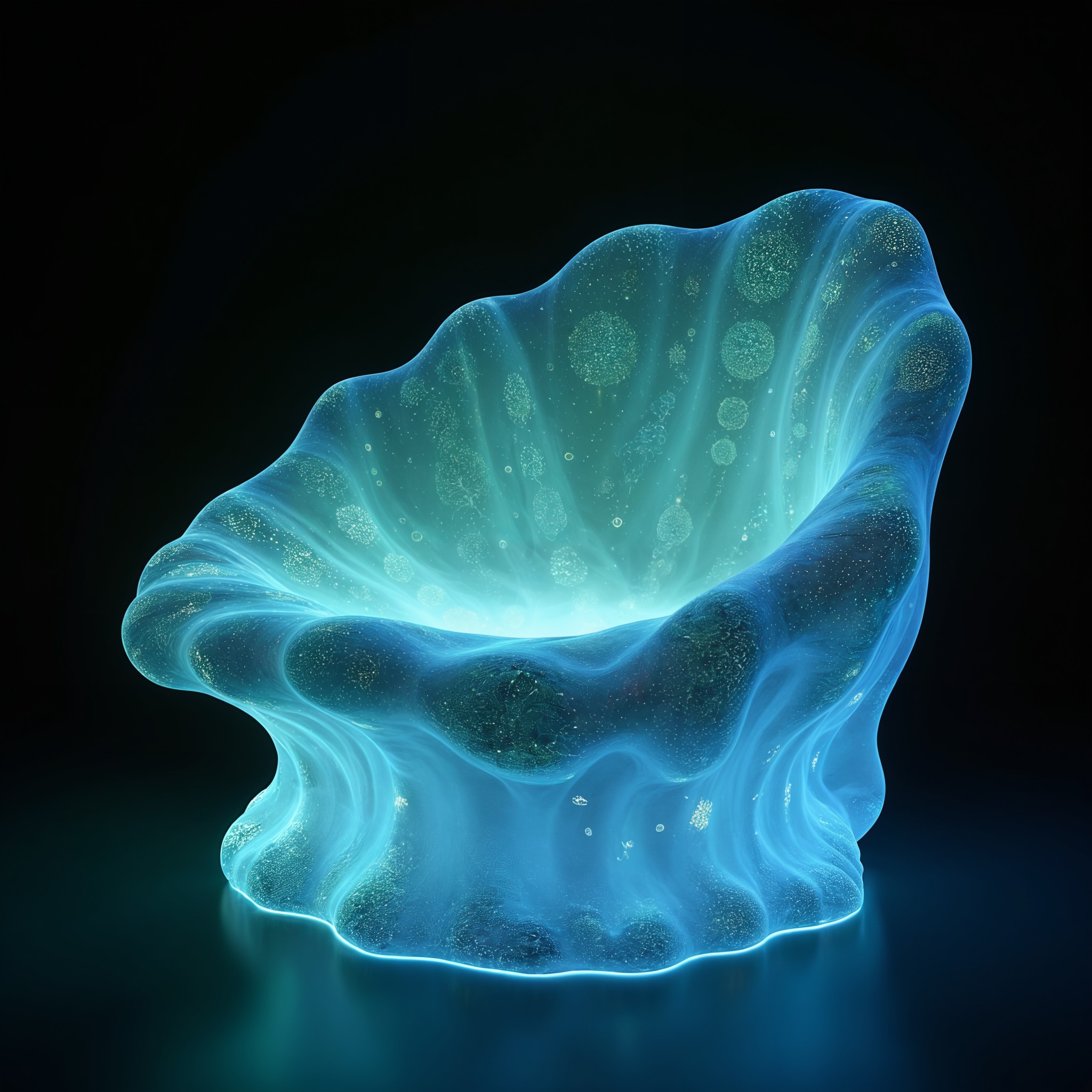

I successfully created three satisfactory parallel spaces—migrating from the Graz Armory Museum to the Cyber Machine Armory, the Abandoned Biological Exhibition, and the Fantasy Toy House! Note that this is not a video; it is an interactive full 3D scene (rotate, zoom, pan)! 🫠

➡️ The migrated 3D Gaussian parallel spaces can be interacted with at the following URL (please open in Chrome, as the web rendering effect is slightly worse than local rendering): *https://showcase.3dmicrofeel.com/armour_museum-house.html*

➡️ More information can be found on our Git page (requires a VPN): *https://gaussianspace.github.io/*

🤔 PS: This is just the initial run of the technical pipeline; many buffs have yet to be implemented. I should be able to further improve the quality (I initially wanted to make it more polished before posting, but the pace is too fast right now, so I decided to share it first to secure my spot 😂)! I hope to make it available to everyone in some way at the right time. 🤗

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/dreamspace-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章