date

Dec 31, 2023

type

Post

status

Published

slug

find-peaceful-in-ai-time-en

summary

This is the only non-technical article I've written this year, yet it’s the most important one, as it addresses the most crucial question in the age of AI: how to maintain self-coherence.

tags

资讯

访谈

项目

Technology

Project

Philosophy

category

Knowledge

icon

password

URL

December 31, 2023 • 17 min read

By Simon Meng, mp.weixin.qq.com • See original

Preface: This is the only non-technical article I've written this year, yet it’s the most important one, as it addresses the most crucial question in the age of AI: how to maintain self-coherence.

▌01. The Overwhelming Anxiety

As the end of 2023 approaches, many of us are feeling a sense of anxiety. This anxiety stems not only from the end of the last era of dividends but also from the beginning of the AI era.

This anxiety peaked for me after the end of the MiraclePlus Demo Day for the Autumn Startup Camp.

▎This anxiety, first and foremost, arises from the direct challenge that Agents (AI-powered intelligent entities) pose to humanity:

At this year’s ChiZhi Demo Day, 34 out of the 67 startup projects were working on Agents, covering almost everything you can imagine—programming agents, enterprise service Q&A agents, game NPC-driving agents, agents for reviving deceased loved ones, content creation agents, hot topic selection agents, and even more surprisingly, agents that can browse the web, watch movies, socialize with other agents, and have their own “lives.”

These are all things that once defined what it means to be human! And now agents can do them because they cost less than using humans, and the results are not much worse—in other words, when performing the same tasks, agents generate less entropy. So, has the agent driven by LLM reached a critical point, proving to be more “superior” than humans? Perhaps in the future, all we’ll need is an agent? Thinking of this, I felt not just anxious but also a bit frightened.

▎This anxiety also comes from the inability to keep up with the rapid pace of technological iterations:

Right before the demo day, I had a brilliant idea to achieve large-scale 3D Gaussian Splatting content and style transfer guided by text. After experiencing the least sleep I've had in a decade, I managed to complete the technical pipeline the day before the demo day and released an online demo of the experimental results. On the day of the demo, it attracted quite a bit of attention, and I confidently stated that we were the first team to achieve this. However, three days after the demo, two similar tools were released almost simultaneously, and they even had the same name—GaussianEditor—one from Huawei and one from SenseTime... Although it turned out later that their editing features were more focused on local modifications, it still made me marvel at the fierce competition in technological development.

Additionally, a year ago, I created a paid subscription Knowledge Planet and, adhering to the principle of responsibility to readers, “forced” myself to follow up on all significant AIGC technological updates weekly. Over the past year, I found that the intervals between significant technologies and tools have become shorter and shorter, and I’ve had to spend more and more time on updates. However, even as I worked hard to maintain the update frequency, the average number of views per technical update post on my Knowledge Planet dropped significantly—indicating that even if I could keep up with the updates, the readers couldn’t.

This seems to reaffirm the prediction made by physicist Geoffrey West in his book Scale: almost all teams, companies, and countries are caught in the trap of the Red Queen Effect: to be stronger than the competition, one is forced to master the next disruptive innovation—until ultimately the interval between major innovations is compressed to nearly zero, the “singularity.”

▎In the face of the coming singularity, the anxiety also arises from the alienation of human life driven by the pace of technology:

During the week I worked with the startup team, my average sleep time was from 2:30 am to 8:30 am, while my co-founders slept from around 4:30 am to 9:30 am. I thought we were already a ridiculous team, but then I talked to another CEO from a ChiZhi startup project. He casually mentioned that he hadn't slept before 3 am for many years. What’s even more ridiculous is that, despite my co-founders working around the clock and maintaining high performance, they still had unfinished tasks and couldn’t keep up with technological developments. Although they seemed to enjoy it, I couldn’t help but wonder, is this a form of “domestication” of humans by business or technology?

Perhaps we will reach our inner singularity before the actual technological singularity arrives—the value of our existence as humans is shaken—first the value of life and emotions, followed by the value of skills and thoughts.

▎This anxiety comes not only from myself but also from investors:

I originally thought that perhaps only we entrepreneurs and KOLs in the AI wave were anxious, while those with capital were watching the fire from the shore, making bets with ease.

But after talking to several investors, I found that they are also anxious. They fear making the wrong investments, they fear missing out, but what they fear most is selecting a flawless company after careful consideration, only to see it wiped out by a sudden technological innovation.

Speaking of this, I remember that after the demo day, we received contacts from nearly 300 investors, and we simply couldn’t screen them manually. So, I used GPT-4 to create an investor screening GPT—just input the name of the investment institution and the investor, and it will automatically return the investor’s position, fund size, investment cases, and comprehensive evaluation according to the list. The thought that investors screening AI projects are being reverse-screened by AI (we do manually verify them, of course!) suddenly made me feel a sense of cyber irony.

▌02. The Inescapable Choice Trap

In response to the various issues brought by technological development, Kevin Kelly once raised a question in his book title—What Technology Wants. I remember he didn’t answer the question in the end, but he didn’t seem opposed to the trend of technology gradually dominating everything, because in The Inevitable, he mentioned that the greatest benefit of technological development is giving people more choices.

Just like everything has two sides, more choices are both a blessing and a trap.

▎First is the consumption trap—when making consumption choices (whether you’re consuming money or time), because the human brain can no longer bear the cost of making the best choice from a vast number of options every time, we have passively given up our data privacy and choice autonomy, letting the system make the “optimal solution” for us—even though sometimes the so-called optimal solution is from the platform’s perspective, or even from an uncontrollable black-box model perspective.

▎Second is the learning trap—historically, we have had many “polymaths,” such as Leonardo da Vinci, who was an artist, engineer, biologist, and mathematician. Now, human knowledge has become so vast that even the smartest person may not be able to cover the smallest branch in a lifetime, and this tree of knowledge is still growing at a terrifying exponential speed.

▎Finally, the creative trap—we may feel almost omnipotent with the support of AI in terms of skills but forget that when everyone has almost the same AI capabilities, we may lose our unique advantages and even the motivation for creativity due to dispersed energy. This is also a problem I’m facing and thinking about.

We are not unaware of the existence of traps, but we don’t really have many choices. Our anxiety is like that of investors—we fear making the wrong choice, we fear missing out, but what we fear most is that we cannot make the optimal choice at the moment, no matter what.

▌03. The Value of Humans

Eventually, the continuous anxiety reached its peak on the second day after I returned to Nanning from Beijing. What comforted me was not a new invitation from a celebrity or the news of successful financing but the warm sunlight, the gentle air, familiar food, and the leisurely people—in other words, the human factors.

This soothing feeling inspired me that if we want to maintain a stable core in the turbulent AI era, we must find and discover our irreplaceable unique value as human beings. So, what is the value of humans in the AI era?

To answer this question, let’s first examine the flaws of AI—what I refer to as “having skills but lacking the way”—where the “way” is not an abstract concept, but rather refers to the differences between the birth processes of AI and the evolution of carbon-based life forms in nature: lacking environment, motivation, origin, and context. AI is born from data, evolves through training in artificial neural networks, survives through weight information, and grows through document replication; all of this is unrelated to AI itself and is passively driven by humans—AI does not need to actively do anything to promote its own evolution and existence, and therefore lacks “instinct.” The essence of survival and reproduction is, in fact, the foundation of brilliant civilization. Of course, some may argue that AI can exhibit emotions similar to humans, but this is achieved by aligning with human behaviors, not from any “intrinsic needs” that AI autonomously derives.

This perspective highlights the value of humans. We already know that merely chasing “skills” is destined to be futile: in an era of rapid technological advancement, following the limits of technology is akin to cutting water with a knife—you obtain only a cross-section of swiftly flowing water, and the significance within the accelerating river of time tends toward infinitesimal.

Only by making choices from the perspective of “human” and continuously seeking and forging one’s own “way,” rather than choosing from the perspective of technical optimization, can we avoid falling into the “trap of choice”—because the “optimal technology” is dynamically changing at an increasingly rapid pace, while the value of “human” is diverse and relatively constant.

▌04. Seeking the “Way”

- So, what is the “way”? *In fact, the specific concept of the “way” varies from person to person (and this is its preciousness), but I would like to share what I feel is closest to “seeking the way” — I feel less anxiety about the AI era and more value as a human when I do the following things.

▎Finding One's Mission

Please remember: Mission > Skills > Knowledge. Strive to find your mission (even if it is temporary); your mission will allow you to find your unique leverage in the ocean of AI—let your inner voice guide you to do certain things, and then find specific AI tools; do not decide to do something just because you discovered that AI tools can perform tasks quickly and cheaply. Because hastily adopting the second strategy may lead to being outpaced by younger entrepreneurs using newer technologies in a short period.

▎Continuously Improve Oneself, Be a Craftsman in the AI Era

The emergence of AI makes accessing complex information less scarce, but all AI training is based on data sets, and every industry always has secrets that are only mastered by a few people; therefore, continuously improving your professional abilities and finding exclusive insights will become your moat—providing information entropy within the biases and blind spots of large models. At the same time, remember that truth is often held by a minority.

▎Discover the Most Specific Application Scenarios in a Multimodal Environment

Currently, most AI is unimodal (like large language models) and is indeed incapable of achieving complete multimodality (for instance, models that can simultaneously align text, images, point clouds, and audio files, but these formats themselves are just excerpts and compressions of the complete information of the real world, not the world itself). However, “the divine is present on site,” and only by being immersed in a world filled with human experiences can we understand how to provide the most scarce emotional value to the public from every angle. As mentioned earlier, AI is a source-less water and a rootless reed, perhaps forever unable to “actively” care for people.

▎Further Focus on Emotional Value

Among the 67 projects at this year’s Qi Ji Chuang Tan, besides those I and friends are involved in, the one that impressed me the most was a project about using AI to "revive" the deceased—not because their technology was particularly strong, but because what they aimed to do had unique emotional value.

I suddenly realized that I am actually tired of the narrative of “cost reduction and efficiency increase”—how fast is fast enough? How cheap is cheap enough? How much material is considered sufficient? Does an era that moves ever faster, to the limit, really bring happiness? In fact, isn’t the ultimate purpose of these things to manifest emotional value? So why not focus directly on emotions from the start? Or can we find things that can only be done because of AI, rather than just things that can be done faster with AI, and that have universal value for humanity?

Once again, it’s important to emphasize that AI can help provide emotional value, but only humans are the original initiators.

▎Broadly Involved, Become a Super Individual

“Screws continue to be replaced by AI, and individuals with architecture and integration capabilities are more valued.” This means we need to actively reduce repetitive work and engage in creative and unique tasks, rapidly making connections and constantly seeking small “qualitative changes.”

AI is indeed more likely to seek quantitative changes rather than qualitative changes—training large models is not easy—the design and validation of network structures, data cleaning, establishing training hardware infrastructure, and adjusting hyperparameters, etc. This leads to AI’s “speed” referring to the speed of running in the same lane, rather than the speed of quickly switching lanes.

In contrast, the human brain is more flexible; we are born weary of monotony, and with just a little more courage and leveraging AI, this may be the best era for everyone to innovate.

▌05. Conclusion

Looking back at this moment in several years, people will find that if this is not the last golden age of human civilization, it is bound to be the starting point of a new era. As Dickens said at the beginning of “A Tale of Two Cities”: “It was the best of times, it was the worst of times; it was the age of wisdom, it was the age of foolishness; it was the epoch of belief, it was the epoch of incredulity; it was the season of Light, it was the season of Darkness; it was the spring of hope, it was the winter of despair; we had everything before us, we were all going direct to Heaven, we were all going direct the other way.”

References:

Illustrations: gpt4 + dalle3

“Scale” by Geoffrey West

“What Technology Wants,” “Out of Control” by Kevin Kelly

Miracle Plus 2023 Autumn Roadshow, 67 projects, 51 related to large models: link

“The Singularity is Near: When Humans Transcend Biology” by Jizhizhi Club: link

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/find-peaceful-in-ai-time-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章

Worldline Overload {Choose to Patch |or| Forced Reboot}

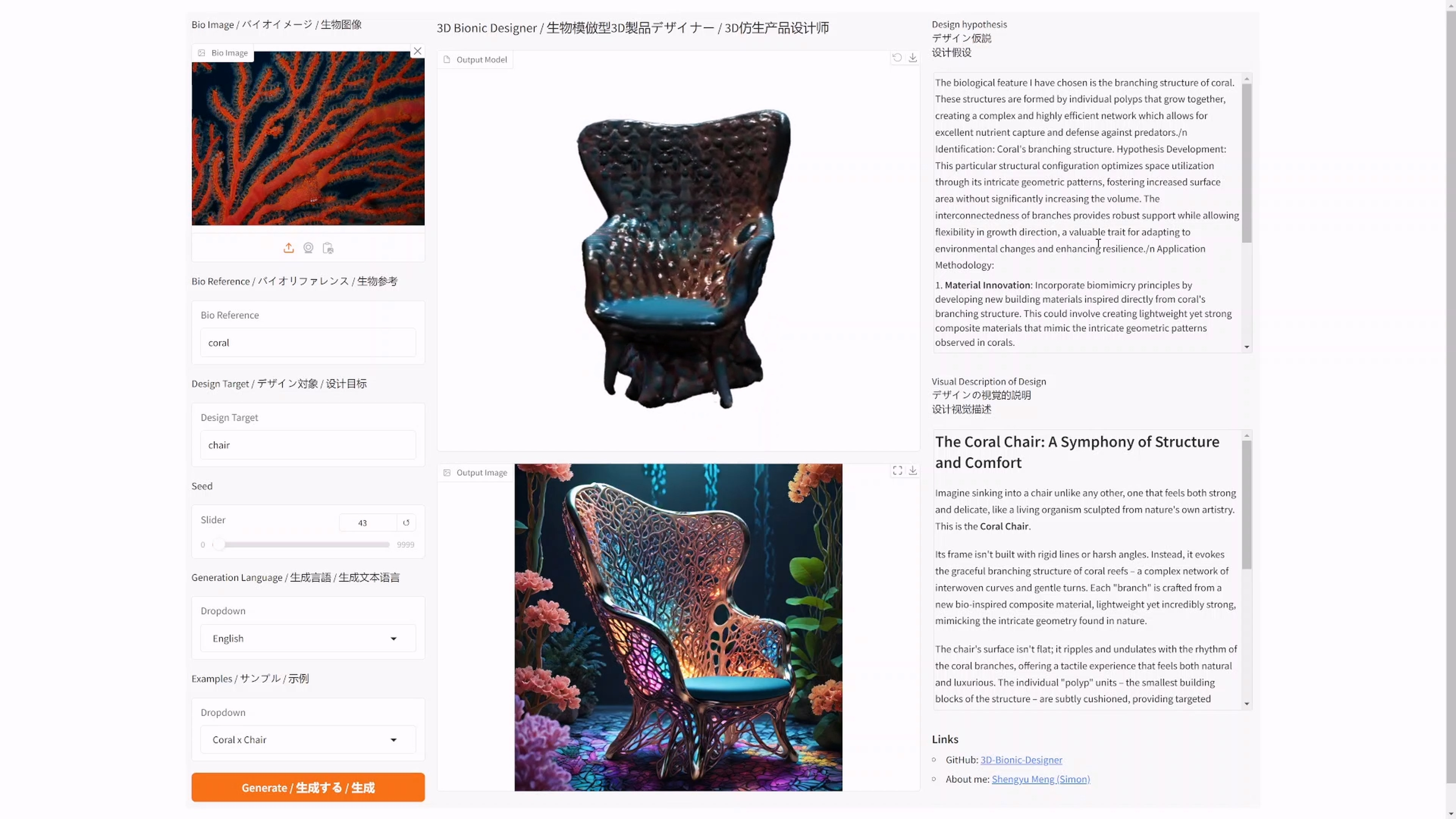

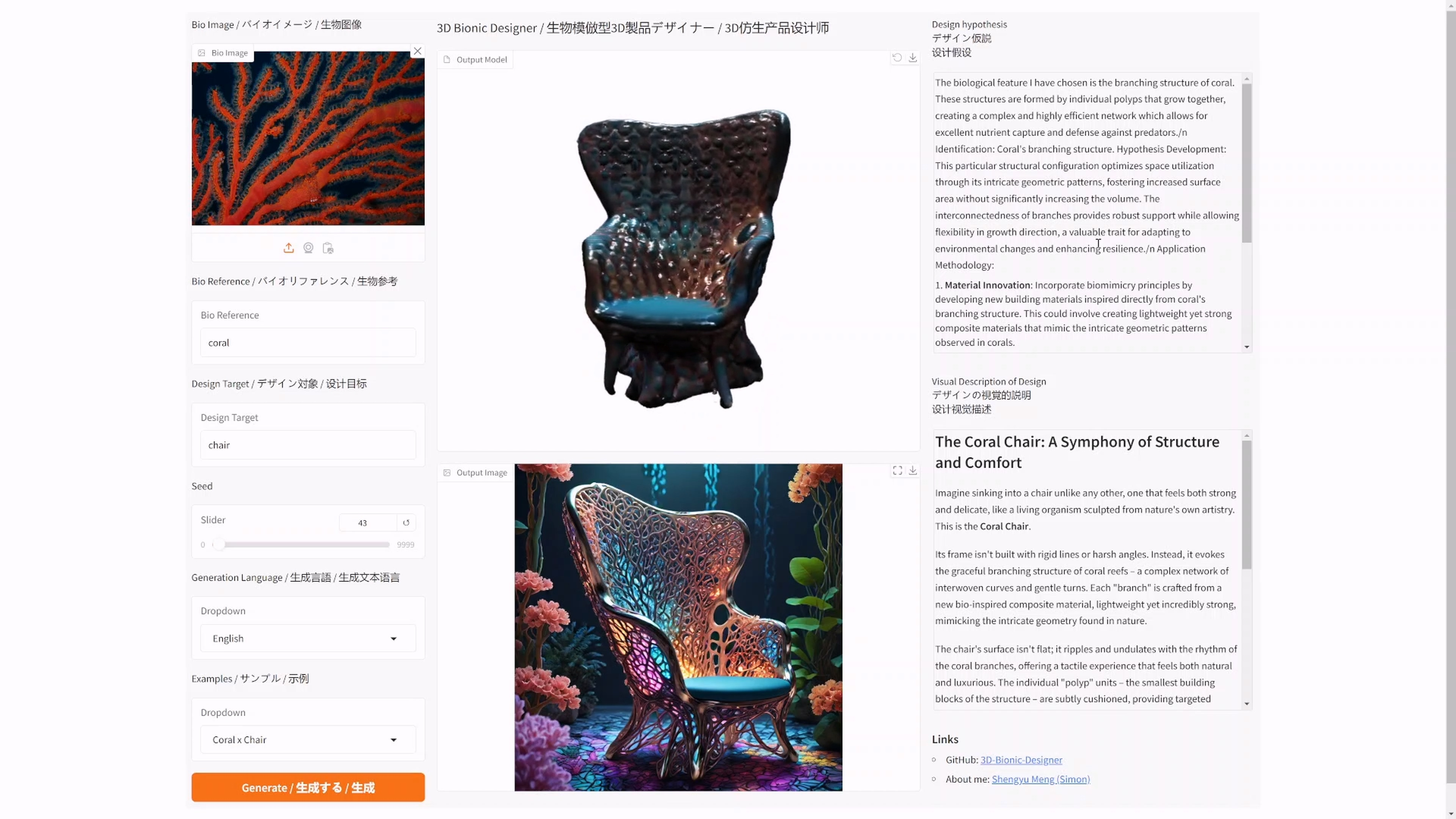

I have open-sourced an AI bionic product generator: 3D Bionic Designer

Microcosmic Universe

One and Three Objects, and An Attempt at Exhausting the Object

I have open-sourced an AI bionic product generator: 3D Bionic Designer

How I Used AI to Create a Promotional Video for Xiaomi's Daniel Arsham Limited Edition Smartphone