date

Jan 10, 2024

type

Post

status

Published

slug

4D-gen-en

summary

AI 3D generation has already become extremely competitive, and now 4D generation is trending.

tags

项目

教程

创作

展览

AI tool

Technology

category

Knowledge

icon

password

URL

January 10, 2024 • 4 min read

by Simon Meng • See original

AIGC is getting so competitive nowadays that 2D/3D/video content is no longer enough—4D has entered the scene 😂. Quickly sharing some of the recent 4D generation algorithms I came across—some are almost ready for use. Note: here, 4D refers to 3D models with movement (4D models), and videos that allow switching viewpoints during playback (4D spatial scenes).

- Animate124: Converts a single static image into a 3D video based on text descriptions, achieving a leap from 2D to 4D. This technology leverages a three-stage optimization and multi-diffusion prior, creating a unique animation experience.

➡️_Link: https://animate124.github.io/_

- 4D-fy: Combines variational SDS and text-to-image models (T2I) to enhance the 4D generation process. This algorithm enhances visual effects through mixed gradient supervision, showcasing its unique advantages in text-driven four-dimensional creation.

- Grounded 4D Content Generation: Combines static 3D assets with monocular video sequences, offering users finer geometric and motion control in 4D scene construction. This method provides new perspectives in the field of 4D content creation.

➡️_Link: https://vita-group.github.io/4DGen/_

- DreamGaussian4D: Significantly improves content generation speed and enhances motion control and detail presentation through its 4D Gaussian splatting technique. This framework has distinct advantages in both efficiency and expressiveness.

- Control4D: Enables users to intuitively edit 4D portraits using text instructions. The innovation of this framework lies in its high fidelity and editing consistency, providing new possibilities for four-dimensional editing.

➡️_Link: https://control4darxiv.github.io/_

- Consistent4D: Opens new pathways for generating four-dimensional objects through uncalibrated monocular video. It adds a new dimension to the text-to-3D tasks, providing a strong complement to traditional methods.

➡️_Link: https://consistent4d.github.io/_

- EasyVolcap: A PyTorch-based library focusing on accelerating research in neural volumetric video, especially in volumetric video capture, reconstruction, and rendering. It provides a set of tools aimed at simplifying the complex volumetric video processing workflow.

- SpacetimeGaussians: Introduces a new dynamic scene representation method—spatiotemporal Gaussian splatting. It combines enhanced 3D Gaussian models with feature splatting rendering techniques, achieving high-resolution real-time shading while maintaining compact storage.

- GPS-Gaussian: Focuses on real-time reconstruction and rendering of 4D Gaussians, providing an efficient solution for novel human viewpoint synthesis. This tool aims for fast and accurate dynamic 3D rendering.

- Dynamic 3D Gaussians: Breaks the limitations of neural implicit field modeling through its persistent dynamic view synthesis, enabling the reconstruction of dynamic objects and effectively combining models from different scenes.

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/4D-gen-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章

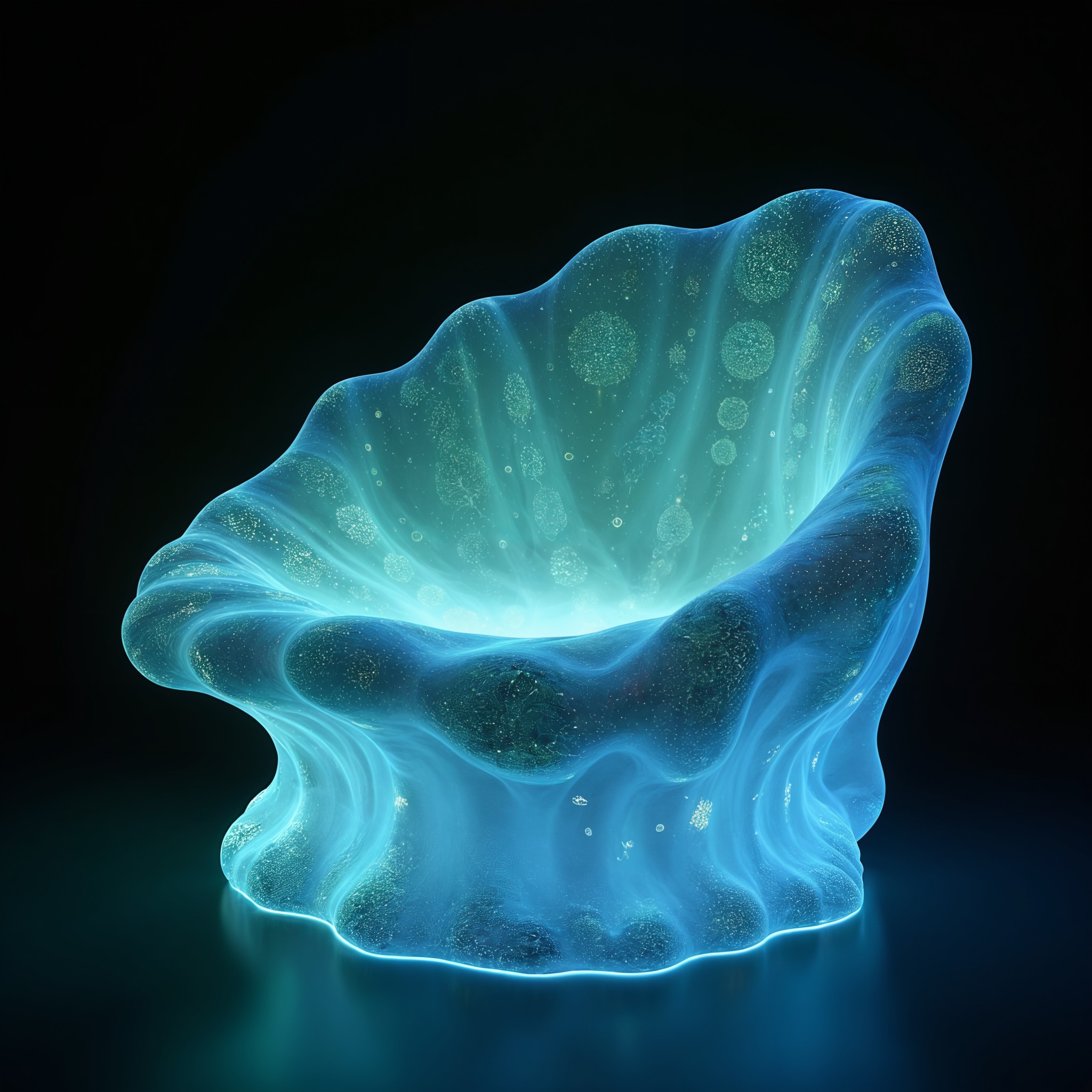

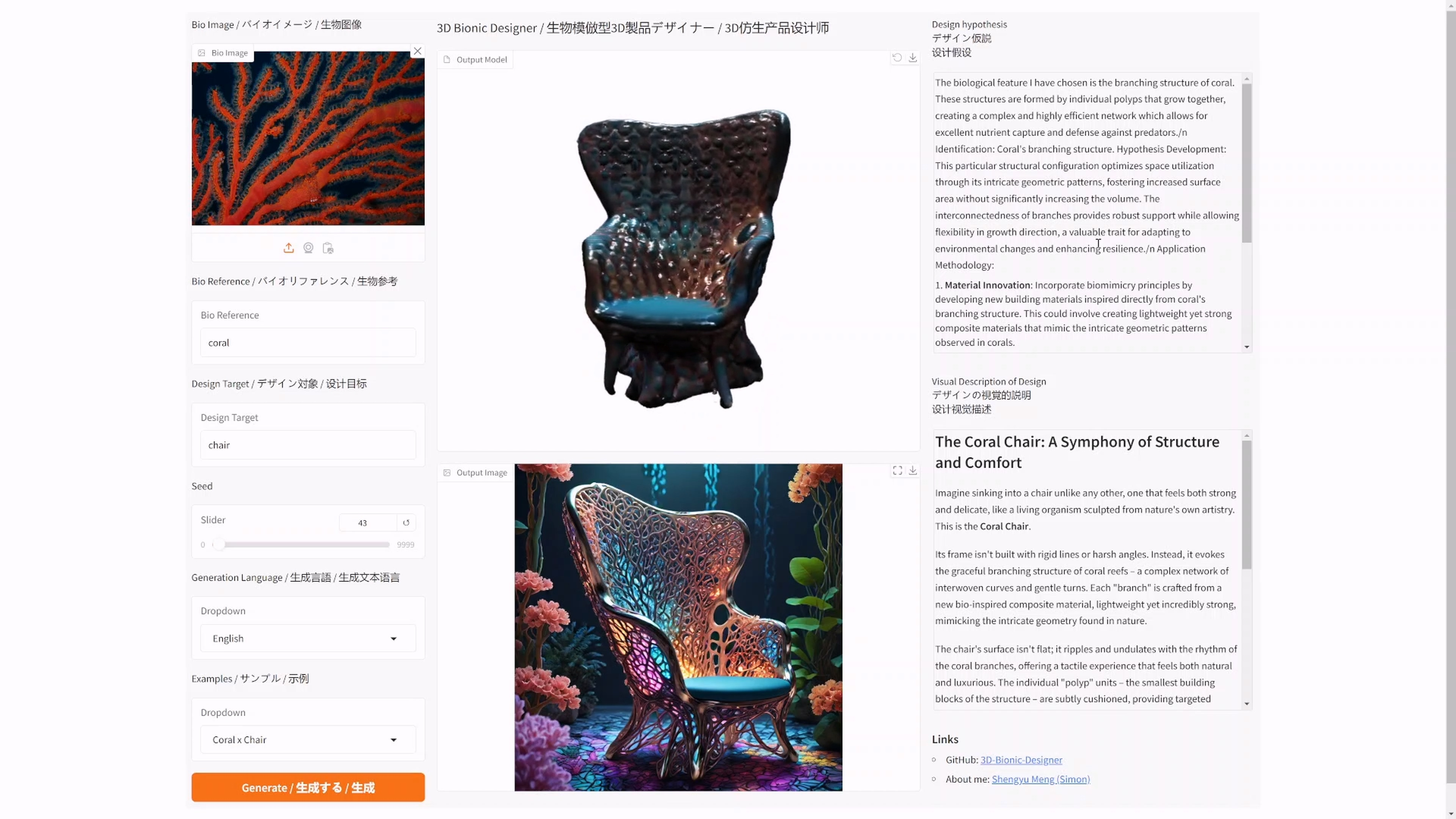

I have open-sourced an AI bionic product generator: 3D Bionic Designer

I have open-sourced an AI bionic product generator: 3D Bionic Designer

How I Used AI to Create a Promotional Video for Xiaomi's Daniel Arsham Limited Edition Smartphone

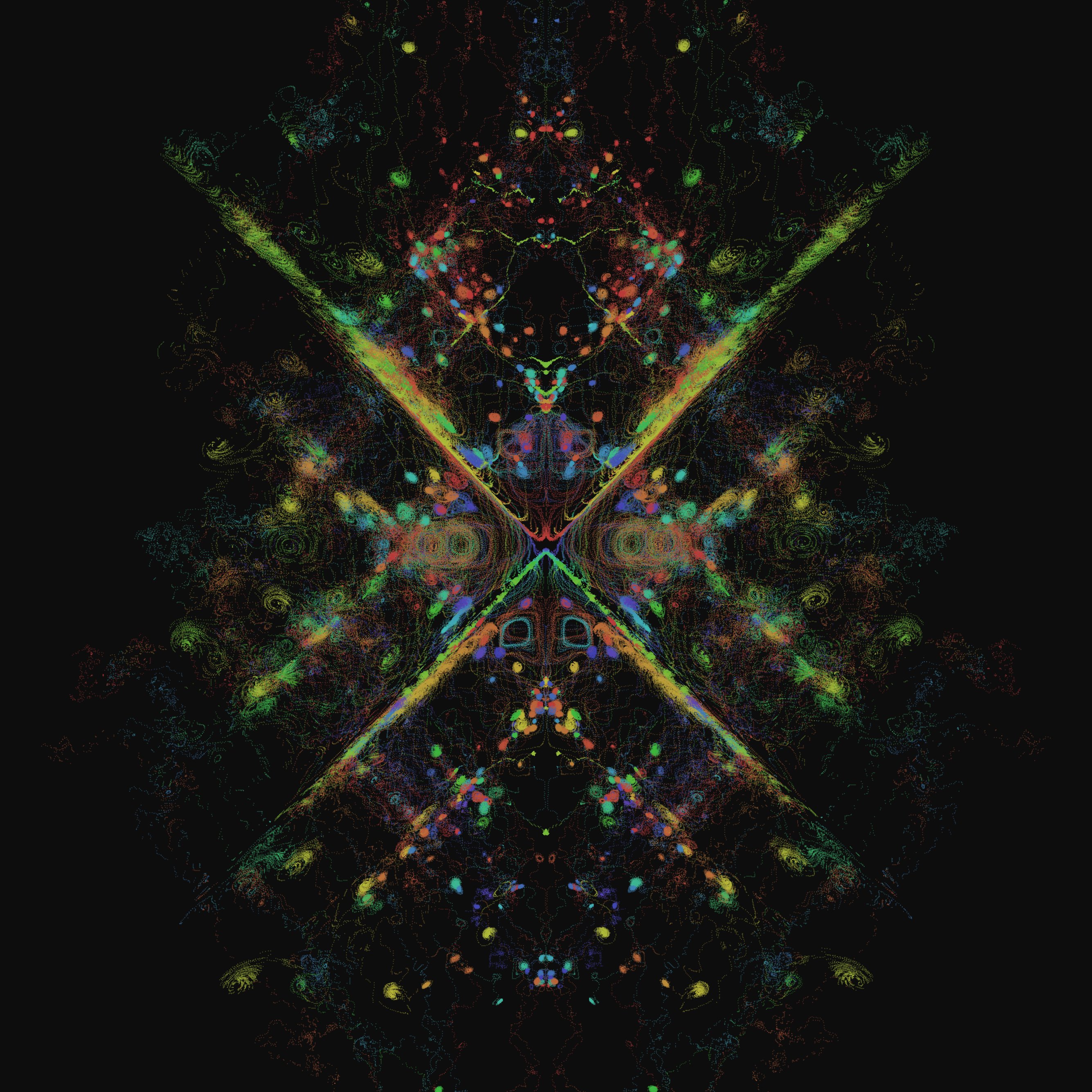

Works Series - Dimensional Recasting

The 2022 Venice - Metaverse Art Annual Exhibition: How Nature Inspires Design

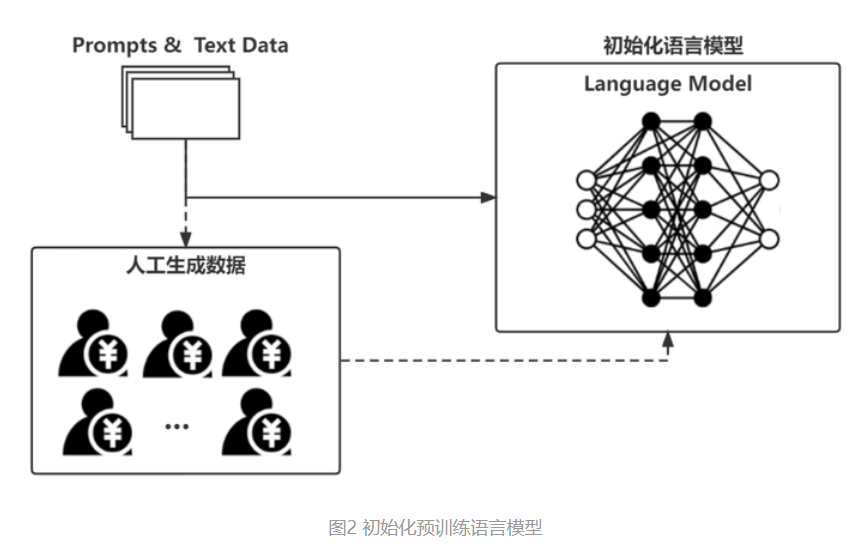

The Basic Principles of ChatGPT