date

Sep 5, 2023

type

Post

status

Published

slug

ai-town-en

summary

Close Reading of the Stanford AI Town Paper: Generative Agents: Interactive Simulacra of Human Behavior

tags

资讯

编程

教程

Technology

Tutorial

category

Knowledge

icon

password

URL

September 5, 2023 • 8 min read

Architectural Facade Perpetual Motion Machine and Controller — Original Paper Explanation

by Simon Meng, mp.weixin.qq.com • See original

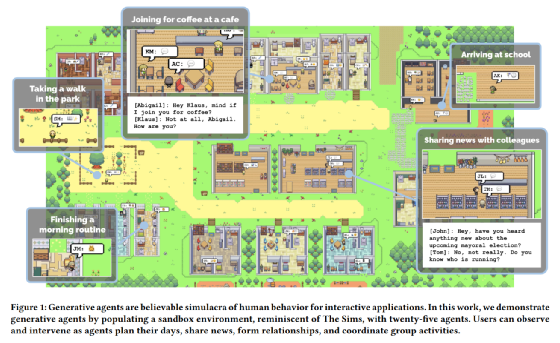

When I first saw the Stanford AI Town paper "Generative Agents: Interactive Simulacra of Human Behavior" (Park et al., 2023), I was truly shocked 🥹—the idea that we could manipulate 25 intelligent agent proxies (Agents) using prompt magic to simulate a mini-Westworld is incredible. So, after the code was released a few days ago, I went through it alongside the paper and felt both surprised and understanding at the same time. Here, I would like to share my notes and answer some questions you might find interesting. You can also download the original paper with my annotations at the end! 🤗

My Summary:

Stanford University has shown that, without modifying the large language model (the experiment used GPT-3.5), by using an external memory database and game map, along with clever prompt decomposition, the Agents (NPCs/intelligent agents) in the AI Town can exhibit social behaviors similar to real humans (time planning, action decision-making, participation in social activities, etc.). Although this does not actually prove that models like GPT possess "consciousness" (many of the Agents' "choices" are made under strict constraints; every time Agents take action, they are simply responding to a streamlined prompt), it demonstrates that the capabilities of LLMs (large language models) can be transferred to complex tasks, including "social" interactions, and provides a foundational model framework for conducting social experiments based on language rather than quantification.

Answering Common Questions

How do Agents store and retrieve long-term memory?

- Memory Storage Method: Stored in an external database in JSON format.

- How Memory is Retrieved: When recalling memory, three weights are considered: Recency, Importance, and Relevance.

- –Recency: Memories closer to the current time have higher recency.

- –Importance: Higher entropy (content not overlapping with other memories, non-routine behavior) increases importance.

- –Relevance: Evaluates the semantic embedding relevance between the current dialogue and stored memories.

- Memory Storage Format: The basic storage unit in the memory stream is a "node," each containing a natural language description and timestamps for when it was created and last retrieved. Details can be found in the nodes.json file in each character's folder. An example of an object file format is as follows:

– Below is an event node:

– Below is a thought (planning) node:

How do Agents locate and move in the map?

- Route Calculation: Initially, I thought GPT could fully understand spatial coordinates, allowing Agents to specify their desired coordinates when outputting. However, I realized I was mistaken 😂. In fact, Agents only indicate the destination name in their output, such as "cafe," and the actual movement path on the screen is entirely navigated and rendered automatically by the sandbox game engine.

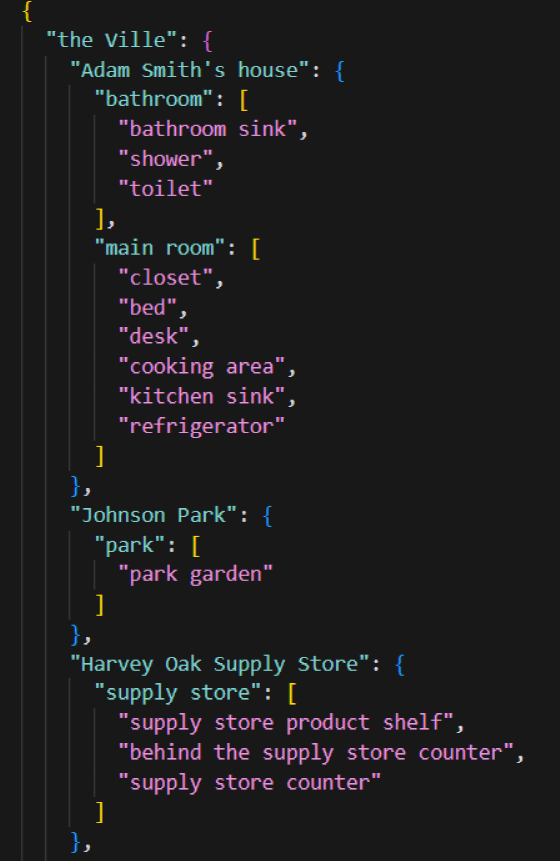

- Map Storage: The map information is stored in a JSON file using a tree structure, as shown in the image below:

- Map Information Converted to Prompt: When an agent needs to decide whether to move to another area, they are informed of their current location, the areas included in their current location, and the areas they can move to, followed by a dialogue scenario for the agent to make a choice, as shown below:

How do Agents interact with other Agents and the environment?

- The environment itself can be slightly altered by the agents' actions; for example, a restroom can be occupied, and a kitchen stove can be turned on or off, and pipes can leak.

- Interactions between agents are determined by the sandbox game engine, and when the distance between two agents on the map is below a certain threshold, there is a high probability of triggering dialogue, which occurs through natural language interaction.

How do Agents make decisions?

- Observation: Observational behavior includes both observing and storing the observed content in memory. Observed objects generally include the agents' own thoughts, behaviors of other agents in spatial and temporal overlap on the map, and the states of non-agent objects on the map. Here are some examples of observable content:

- Planning: Describes a sequence of activities the agent plans to undertake in the future, including location and start/end times. Plans are also stored in long-term memory, can be synchronously retrieved, and modified. Plans are generated recursively from broad to detailed: first, give the LLM a character description and an initial time frame for a plan, then let the LLM complete each hour; then recursively feed the LLM again to fill in the details for each hour. Here’s an example of driving the LLM to plan for an agent:

- Reflection: Agents perceive the world and store memories, deciding whether to change their original plans and react based on their memories. Below is a prompt case illustrating how an agent simulates its reaction when encountering another agent:

- 作者:Simon Shengyu Meng

- 链接:https://simonsy.net/article/ai-town-en

- 声明:本文采用 CC BY-NC-SA 4.0 许可协议,转载请注明出处。

相关文章

AI Programming Tools: An Introduction and Comparison

How I Used AI to Create a Promotional Video for Xiaomi's Daniel Arsham Limited Edition Smartphone

The 2022 Venice - Metaverse Art Annual Exhibition: How Nature Inspires Design

The Basic Principles of ChatGPT

From Hand Modeling to Text Modeling: A Comprehensive Explanation of the Latest AI Algorithms for Generating 3D Models from Text

The Correct Way to Unleash AI Creation: Chevrolet × Able Slide × Simon Shengyu Meng | A Case Study Review of AIGC Commercial Implementation